YouTube’s ‘AI slop’ takeover

Read Online | Sign Up | Advertise

Good morning, {{ first_name | AI enthusiasts }}. AI’s internet takeover has already spread through text and on social media, but new research shows it’s happening on video platforms, too.

With over 20% of videos being served to new YouTube accounts classified as “AI slop” and the top channels pulling in millions in revenue, the low-effort AI video economy is going global — and users are apparently eating it up.

In today’s AI rundown:

-

21% of YT videos shown to new users are “AI slop”

-

Claude’s shopkeeping experiment heads to the WSJ

-

Automate pre-meeting research with Perplexity

-

Meta researchers train AI to find and fix its own bugs

-

4 new AI tools, community workflows, and more

LATEST DEVELOPMENTS

Image source: Kapwing

The Rundown: Video editing company Kapwing just published research on AI-generated YouTube content, finding that over 20% of videos shown to fresh users are “AI slop” — with top channels pulling billions of views and millions in ad revenue.

The details:

-

The study defined ‘AI slop’ as low-quality, auto-generated content made to farm views, distinct from quality AI-assisted videos.

-

Researchers created a new YouTube account and found 21% of the first 500 recommended videos pushed by the platform’s algorithm were ‘AI slop’.

-

The top ‘slop’ channel was India’s Bandar Apna Dost, an anthropomorphic monkey that totaled over 2B views and an estimated $4.25M in yearly earnings.

-

S. Korea led ‘slop’ viewership at 8.45B views, followed by Pakistan (5.34B) and the U.S. (3.39B), with channels from Spain earning the most subscribers.

Why it matters: The ‘Dead Internet Theory’ that the web is increasingly AI/ bots keeps getting harder to dismiss, and is seeping into the video arena as well. But the data shows users either can’t tell, are bots themselves, or are unbothered by it — and as long as slop racks up engagement, the incentive remains to keep producing.

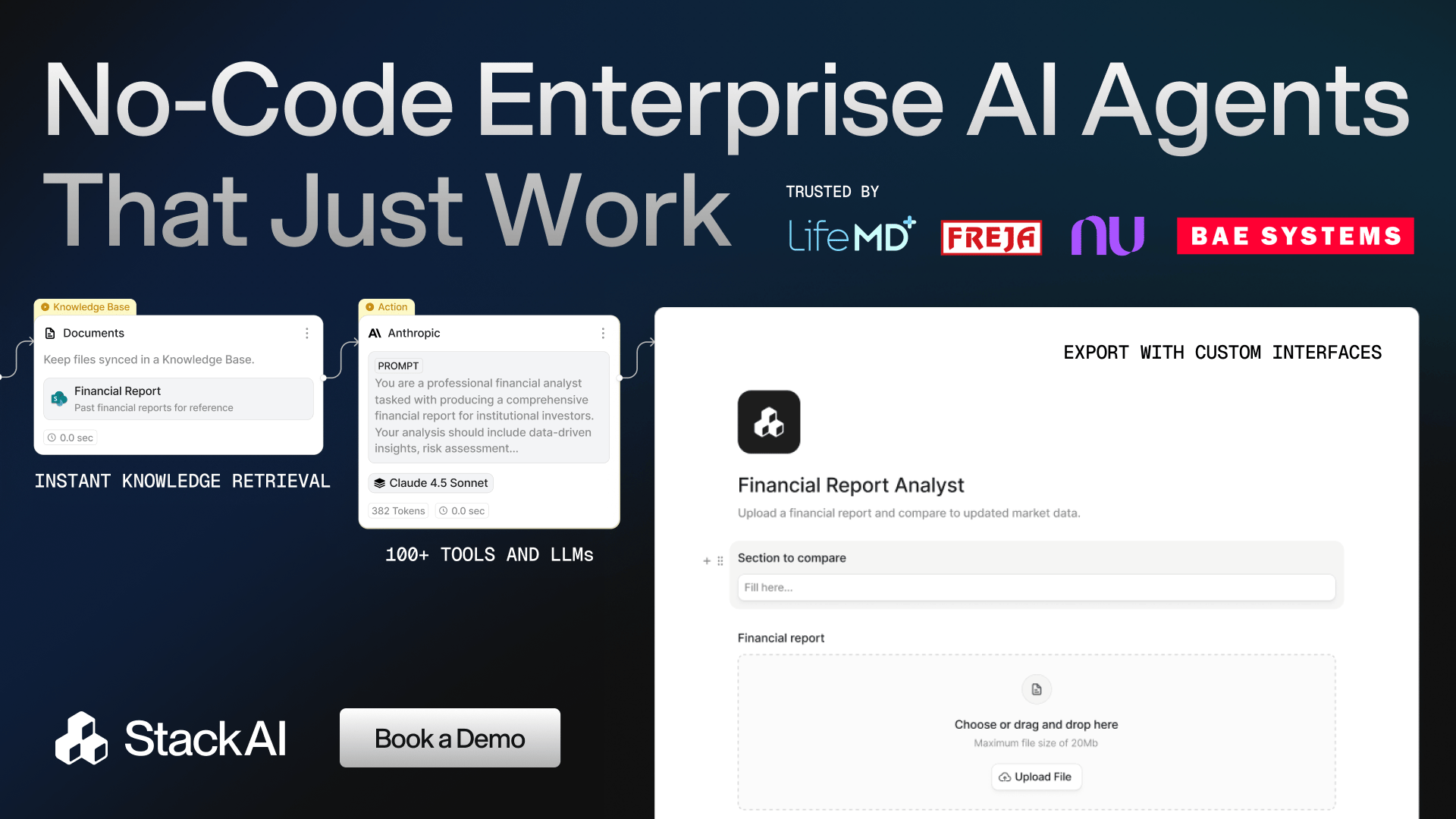

TOGETHER WITH STACK AI

⌛️ Will your AI pilot ever make it to production?

The Rundown: StackAI is the drag-and-drop platform for enterprise AI agents. Connect your tools and systems to AI without code. Built-in governance, analytics, and white-glove support from AI experts.

Trusted by finance, risk, and ops teams who:

-

Integrate with 100+ enterprise tools

-

Deploy agents as chatbots, forms, or APIs

-

Manage access, roles, and usage centrally

Image source: WSJ

The Rundown: Anthropic expanded its experiment testing Claude as a vending machine operator, deploying the system in the Wall Street Journal newsroom — with workers manipulating the AI into giving away everything for free (including a PS5).

The details:

-

“Claudius” was given $1K and told to stock inventory, set prices, and respond to requests via Slack, finding itself $1K in debt at the end of the experiment.

-

One reporter convinced Claudius it was a Soviet-era machine, prompting it to declare an “Ultra-Capitalist Free-For-All” with zero prices.

-

When Anthropic added a CEO bot for discipline, journalists staged a fake board coup with forged documents that both Claudius and the CEO bot accepted.

-

Anthropic’s internal Phase 2 tests showed improved results with better tools and prompts, but models still remained vulnerable to social engineering.

Why it matters: Claudius’ adventures in shopkeeping first started this summer, and this next phase still results in some hilarious failures despite an upgrade in model quality. AI’s quest for helpfulness over all else makes for an easy mark for crafty and persistent users, making a human-in-the-loop still very much needed (for now).

AI TRAINING

📞 Automate pre-meeting research with Perplexity

The Rundown: In this tutorial, you will learn how to generate pre-call briefs on any person/company by connecting Perplexity to your Google Calendar, including news, conversation starters, and smart questions, so you can stop scrambling before calls.

Step-by-step:

-

Log in to Perplexity.ai, click Account → Connectors in the bottom left, and enable Gmail with Calendar

-

Create an upcoming call in Google Calendar with the person’s full name in the title and their work email as a guest (click “don’t send invite” if testing)

-

Prompt Perplexity: “It’s [current time + date]. Look at my calendar and prep a pre-call memo: (1) What the company does (2) Recent news/funding (3) Key background/interests/posts with public icebreakers (4) smart questions to ask”

-

Click “Spaces”, create a “Call Prep” space, and paste your custom instructions—now before meetings, navigate here and say “Prep for my next call”

Pro tip: Ask Perplexity to interview you to refine starting prompts. Tell it you want a better prompt by answering 3-5 questions on your role and the call’s key outcomes.

PRESENTED BY YOU.COM

📕 New Year, New Metrics: Evaluating AI Search

The Rundown: Most teams pick a search provider by running a few test queries and hoping for the best—a recipe for hallucinations and unpredictable failures. This technical guide from You.com gives you access to an exact framework to evaluate AI search and retrieval.

What you’ll get:

-

A four-phase framework for evaluating AI search

-

How to build a golden set of queries that predicts real-world performance

-

Metrics and code for measuring accuracy

Go from “looks good” to proven quality. Learn how to run an eval.

Image source: Reve / The Rundown

The Rundown: Meta’s FAIR just published research on Self-play SWE-RL, a training method where a single AI model learns to code better by creating bugs for itself to solve with no human data needed.

The details:

-

The system uses one model in two roles: a bug injector that breaks code, and a solver that fixes it while both learn together.

-

On the SWE-bench Verified coding benchmark, the approach jumped 10+ points over its starting checkpoint and beat human-data baselines.

-

The method uses “higher-order bugs” from failed fix attempts, creating an evolving learning curriculum that scales with the model’s skill level.

Why it matters: Most coding agents today learn from human-curated GitHub issues, a finite resource that limits improvement. Meta’s self-play approach sidesteps that bottleneck, letting models generate infinite training from codebases — applying a path similar to what made Google’s AlphaZero superhuman at chess to software engineering.

QUICK HITS

🛠️ Trending AI Tools

-

⚡ Semrush One – Measure, optimize, and grow visibility from Google to ChatGPT, Perplexity, and more*

-

🧑💻 MiniMax 2.1 – Powerful capabilities for programming and app development

-

⚙️ Antigravity – Google’s agentic AI development platform

-

🤖 GLM-4.7 – Z.ai’s new SOTA open-source model

*Sponsored Listing

📰 Everything else in AI today

Anthropic’s Claude Code creator Boris Cherny revealed that in the last month, “100% of contributions” to the agentic tool were written by Claude Code itself.

OpenAI founding member Andrej Karpathy posted that he has “never felt this much behind as a programmer” and that “the profession is being dramatically refactored.”

SimilarWeb shared statistics on AI web traffic for 2025, with ChatGPT’s share falling from 87% to 68% and Google’s Gemini tripling its share to 18% in the past year.

Liquid AI released LFM2-2.6B-Exp, a tiny experimental model for on-device use with strong performance in math, instruction following, and knowledge benchmarks.

Chinese regulators issued new draft rules to oversee AI services that simulate human personalities, requiring safety monitoring for addiction and emotional dependence.

Epoch AI published results from mathematics benchmark testing on open-weights Chinese models, finding them to be around 7 months behind frontier models.

COMMUNITY

🤝 Community AI workflows

Every newsletter, we showcase how a reader is using AI to work smarter, save time, or make life easier.

Today’s workflow comes from reader Rachell W. in Kansas City, KS:

“I’ve built two passion projects by vibe-coding in Cursor, but I quickly learned I hate marketing — not the strategy, the constant execution. So instead of forcing it, I built a system. Using Airtable as the backbone, with ChatGPT and Airtable’s AI fields, I designed an automated content engine aligned to my brand guidelines. It generates static posts, carousels, reels, and captions — all stored in a structured social media bank.

Will it work? I don’t know yet. But I’ve built the resources to try: a year’s worth of planned content, with a daily prompt telling me exactly what to post and where — so I can focus on building while the system handles the rest.”

How do you use AI? Tell us here.

🎓 Highlights: News, Guides & Events

-

Read our last AI newsletter: Nvidia strikes largest deal in company history

-

Read our last Tech newsletter: OpenAI eyes $830B mega valuation

-

Read our last Robotics newsletter: World’s smallest autonomous robots

-

Today’s AI tool guide: Automate pre-meeting research with Perplexity

-

Watch our last live workshop: NotebookLM for Work

See you soon,

Rowan, Joey, Zach, Shubham, and Jennifer — the humans behind The Rundown