You Don’t Need GPT-5 for Agents: The 1.2B Model That Beats Giants

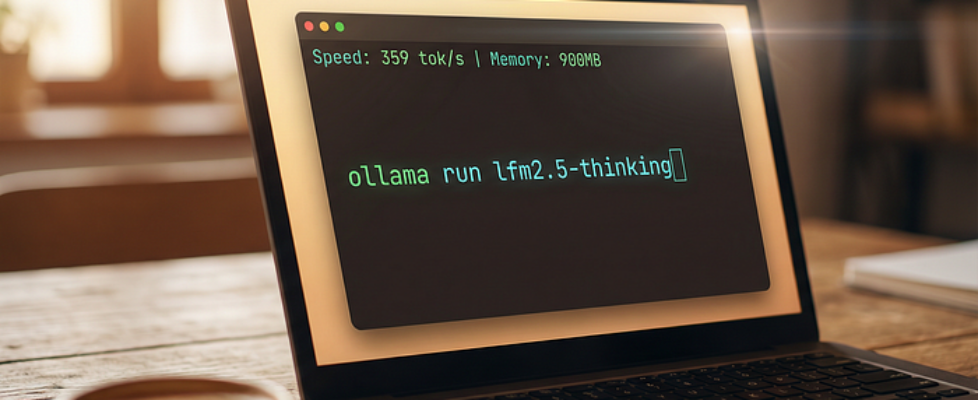

Author(s): MohamedAbdelmenem Originally published on Towards AI. Forget GPT-5 for agent tasks. LFM 2.5 runs at 359 tokens/sec in 900MB. Here’s why it works and how to fine-tune it for your use case. 1400x overtraining. 900MB memory. 359 tokens/sec. Three lines of code. Zero cloud round trips. Infinite agents. Start here. Made By Author.The article discusses the performance of the Liquid LFM 2.5 AI model, emphasizing its efficiency in tasks that normally require significantly larger models. It highlights how this smaller model surpassed traditional scaling laws, achieving faster inference speeds and lower operational costs, thus reshaping expectations in AI economics. The author argues that speed and task efficiency are now more critical than raw size or training costs, signaling a transformative shift in how AI agents are developed and deployed in real-world applications. Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI