With World Models, Let’s Walk Before We Run

An AlphaGo Zero-style upgrade for LLMs, which are now at the AlphaGo stage

World models are a frontier for AI research labs that are all the rage. The thinking is, to get to human level intelligence we need to put AI bots in a world like the one that humans live in. In an interview, DeepMind founder Demis Hassabis implied that bots in a world simulation could develop their own language, and that this might be the way to upgrade current LLMs in the same way that AlphaGo Zero upgraded AlphaGo. In particular, AlphaGo ingested a number of human games to start its training, but AlphaGo Zero basically played against itself randomly to learn how to play, with absolutely no knowledge of how humans strategize or have played in the past. Having bots in a world simulation that spontaneously come up with their own language with no knowledge of human language is quite a feat. It has not happened yet, but Hassabis seems to think cracking that challenge could give us an AGI or something greater. But does it have to be so hard as having a 3D world with realistic physics and perhaps other realistic things like chemistry?

Towards the beginning of 2025, I was on a kick to improve LLMs. I was like, “How hard can this be?” I used my own consumer PC with a gaming GPU to do some speculative research. The paper, “Evolving LLMs Through Text-Based Self-Play: Achieving Emergent Performance”, is up on viXra. Not exactly a noteworthy journal. I used AI heavily to help me write the paper. It hypothesizes that LLMs can be put into competition to enable self-improvement, and that this can be done through LLM controlled bots in a text-only world. It may have worked to some extent, but we need more rigor and better compute to test it further, ideally from an independent researcher or group.

Earlier this month, I saw an interview of Hassabis that was recorded the month before, in December of 2025, talking about World Models. A World Model would very faithfully simulate the physics and potentially chemistry and other functions of the world that happen in nature. It might never be perfect, and it may be just a room or a city of the world that gets simulated, rather than the whole thing. Simulated physical robots or people are put into the world, and they either have to interact with it at a high level because they’re already trained (or learning how to do things), or best case it’s an AlphaGo Zero scenario: robots or humanoids, simulated in the world model, living as some sort of pre-knowledge, pre-language creatures, that over thousands, millions, and even billions of random movements and interactions, could learn how to survive, thrive, and ultimately develop language, machines, systems, and more than all of human knowledge, from nothing. Maybe the incentive is survival and reproduction, similar to most animals, at least at an instinctual level. But how the agents in a World Model are incentivized toward language and knowledge were not really touched on by Hassabis. The down side of course is that any language the bots develop is unlikely to be English or any other known human language, so once we see a discernable language we’ll need to watch the bots carefully and observe their context in order to translate the language.

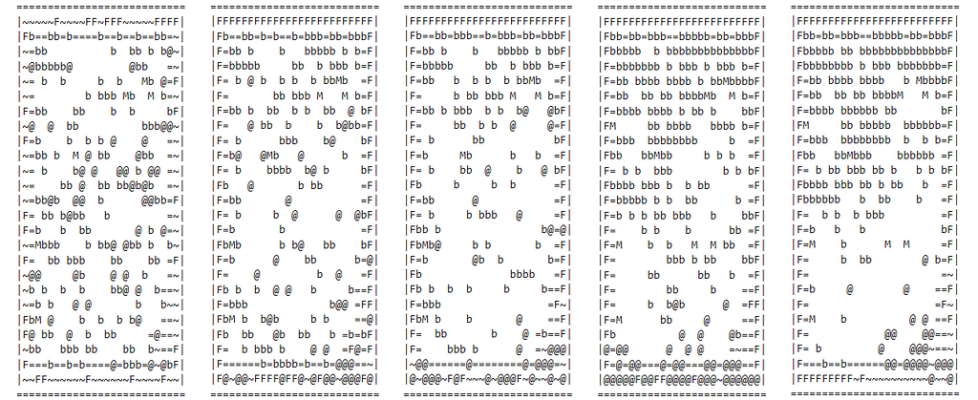

All of this is an intro to say: my previous work on a text-only world for LLM bots seemed pretty clearly tied in to these world models. Why not just create a much simpler world model where bots can see around themselves, either in a top down view or where the world appears as ASCII art, like ASCII Doom, if you’ve ever heard of it? This whole text-only world stuff goes back to my late 90s, early 00s introduction to text-based games called MUDs. That rabbit hole largely started with an obscure (even for a MUD) game called Promised Land. I wasn’t a very good player of these early forms of MMORPGs, but the text only concept really stuck with me.

I don’t know if major AI labs are looking at improving LLMs through competition in a text-only world, or if they are looking at allowing language and thereby language models to emerge spontaneously through bot interaction in a text-only world, but I believe they should be. If you think artificial intelligence can be improved through World Models, and the best form of AI right now seems to be LLMs, then why wouldn’t you allow LLMs to interact with each other in a text-only world? A text-only world is the simplest World Model I can think of. Text is the native way for LLMs to understand anything. This is unlike us, who have a world we ingest through our senses, but on top of that we layer in language and eventually text. LLMs start with text, and creating a world out of text is very easy for us, and has already been done many times. I think there’s a been a bit of dabbling regarding this, by researchers. I believe it should be explored wholeheartedly.

More complicated knowledge might require a more complicated world, but let’s start small to see if there’s a chance of scaling up. Here’s the world I’ve created, with the help of AI, as it stands (the layout of the world and placement of objects is largely random each time):

Map key:

@ = a bot that starts with a blank slate brain, but hopefully can slowly “learn” to survive, reproduce, eat efficiently, and communicate with other bots

b = berries, food for bots to eat

w = wheat, higher energy food for bots to eat

~ = water

= = shoreline

f = a fish, which is high energy food for bots to eat

M = a woolly mammoth, which bots can kill for high energy food, but only if they work together

Here’s the early thinking I had for this concept, before I had working code for it. Feel free to use any of these ideas.

I was running my new world like crazy, making tweaks to try to incentivize the emergent communication that I want to arise from the bots out of nothing.

The bots got better at surviving, reproducing, and even thriving over time… but there’s no discernable intelligible communication yet. You can run this yourself, on Kaggle. I want you to. (the full code is at the bottom of the article) You have to select the P100 as your accelerator for this to work, within the right-side menu:

You get 30 free hours of this P100 use per week. I upgraded to 45 hours per week for some monthly fee, but it still seems not nearly enough. There’s a ton of tweaking on this program to be done to hopefully get us to emergent communication faster, to balance the population while still balancing reproduction, tweaking genetic variation, changing the structure of the bots’ neural nets, there’s just so much to balance so that communication is incentivized and coxed into evolution as fast as possible. Better hardware would help a ton, better architecture would help, scale of the world (smaller or bigger could help, this is a 2D world, but 3D could help) or scale of bots or anything else could help. So much to work on here. Please work on this all you want. It’s all released into the public domain for anyone to use, build upon, change, copy, literally whatever you want to do with it, as long as you don’t build an AGI that hurts or kills humans.

Here’s the code for the project, 100% AI produced. There are easily a dozen directions to go with this project, I hope you take a stab at it!:

import os

# FORCE SYNC DEBUGGING

os.environ['CUDA_LAUNCH_BLOCKING'] = "1"

import torch

import torch.nn as nn

import numpy as np

import random

import glob

import math

import shutil

import time

from torch.func import functional_call, vmap

# ==============================================================================

# 0. GLOBAL CONFIG & HELPERS

# ==============================================================================

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f">> RUNNING ON: {DEVICE}")

VOCAB_SIZE = 95

ASCII_OFFSET = 32

SILENCE_TOKEN = 0

def decode_output(indices):

valid_indices = np.clip(indices, 0, VOCAB_SIZE - 1)

return "".join([chr(int(idx) + ASCII_OFFSET) for idx in valid_indices])

# --- CONFIG SCALED FOR 25x25 ---

START_FRESH = True

GRID_SIZE = 25

POPULATION_CAP = 65

BUFFER_SIZE = 50

MAX_AGENTS = POPULATION_CAP + BUFFER_SIZE

MAX_BIRTHS_PER_TICK = 5

MAX_AGE_BASE = 2500

MAX_AGE_VARIANCE = 500

RANDOM_MOVE_CHANCE = 0.05

LOG_FREQ = 1000

REAPER_FREQ = 3000

VISION_RADIUS = 4

VISION_WIDTH = (VISION_RADIUS * 2) + 1

VISION_AREA = VISION_WIDTH * VISION_WIDTH

# Output size: 7 Actions (0-6)

ACTION_SPACE = 7

SENTENCE_LEN = 10

# ==============================================================================

# 1. BRAIN

# ==============================================================================

class AgentBrain(nn.Module):

def __init__(self, vocab_size=VOCAB_SIZE, embed_dim=128, num_heads=4, num_layers=2):

super().__init__()

self.embedding = nn.Embedding(vocab_size, embed_dim)

self.pos_encoding = nn.Parameter(torch.randn(1, 256, embed_dim))

encoder_layer = nn.TransformerEncoderLayer(d_model=embed_dim, nhead=num_heads, batch_first=True, dim_feedforward=256, dropout=0.0)

self.transformer = nn.TransformerEncoder(encoder_layer, num_layers=num_layers)

self.action_head = nn.Linear(embed_dim, ACTION_SPACE)

self.vocal_head = nn.Linear(embed_dim, SENTENCE_LEN * vocab_size)

def forward(self, input_ids):

input_ids = torch.clamp(input_ids, 0, VOCAB_SIZE - 1)

seq_len = input_ids.size(1)

x = self.embedding(input_ids) + self.pos_encoding[:, :seq_len, :]

x = self.transformer(x)

latent = x[:, 0, :]

action_logits = self.action_head(latent)

vocal_logits = self.vocal_head(latent).view(-1, SENTENCE_LEN, VOCAB_SIZE)

return action_logits, vocal_logits

# ==============================================================================

# 2. POP MANAGER

# ==============================================================================

class PopulationManager:

def __init__(self, size=MAX_AGENTS):

self.template = AgentBrain().to(DEVICE)

self.params = {}

for name, param in self.template.named_parameters():

self.params[name] = param.unsqueeze(0).repeat(size, *([1]*param.ndim)).detach().requires_grad_(False)

self.max_size = size

def load_brain(self, slot_idx, state_dict):

if slot_idx >= self.max_size: return

for name, tensor in state_dict.items():

if name in self.params: self.params[name][slot_idx].copy_(tensor)

def clone_brain(self, source_idx, target_idx):

for name in self.params:

self.params[name][target_idx].copy_(self.params[name][source_idx])

def mutate_batch(self, target_indices, parent_indices, lineages, global_decay):

if len(target_indices) == 0: return

probs = torch.rand(len(target_indices), device=DEVICE)

bases = torch.full_like(probs, 0.01)

bases = torch.where((probs >= 0.25) & (probs < 0.50), torch.tensor(0.05, device=DEVICE), bases)

bases = torch.where((probs >= 0.50) & (probs < 0.75), torch.tensor(0.10, device=DEVICE), bases)

bases = torch.where(probs >= 0.75, torch.tensor(0.20, device=DEVICE), bases)

decays = torch.clamp(1.0 / (2.0 ** lineages), min=0.1)

final_strengths = bases * decays * global_decay

for name in self.params:

parent_w = self.params[name][parent_indices]

self.params[name][target_indices].copy_(parent_w)

view_shape = [len(target_indices)] + [1] * (self.params[name].ndim - 1)

noise = torch.randn_like(parent_w) * final_strengths.view(view_shape)

self.params[name][target_indices].add_(noise)

def get_brain_dict(self, idx):

return {name: self.params[name][idx].clone() for name in self.params}

def infer(self, active_indices, input_batch):

if len(active_indices) == 0: return None, None

input_batch = torch.clamp(input_batch, 0, VOCAB_SIZE - 1)

active_params = {name: p[active_indices] for name, p in self.params.items()}

def call_single(params, inp):

return functional_call(self.template, params, (inp.unsqueeze(0),))

act, voc = vmap(call_single, randomness="different")(active_params, input_batch)

return act.squeeze(1), voc.squeeze(1)

# ==============================================================================

# 3. WORLD ENGINE

# ==============================================================================

class WorldEngine:

def __init__(self, size=GRID_SIZE):

self.size = size

self.pad = VISION_RADIUS

self.grid_cpu = np.full((size, size), ' ', dtype=str)

self.static_map_gpu = torch.full((size + 2*self.pad, size + 2*self.pad), 35, dtype=torch.long, device=DEVICE)

self.res_grid_gpu = torch.zeros((size, size), dtype=torch.long, device=DEVICE)

self.food_multiplier = 1.0

self.high_pop_streak = 0

self.low_pop_streak = 0

self.generate_new_map()

def generate_new_map(self):

self.grid_cpu = np.full((self.size, self.size), '~', dtype=str)

target_land = int(self.size**2 * 0.85)

x, y = self.size//2, self.size//2

count = 0; attempts = 0

while count < target_land and attempts < self.size**2*10:

attempts+=1

if self.grid_cpu[x,y] == '~': self.grid_cpu[x,y] = ' '; count+=1

moves = [(0,1),(0,-1),(1,0),(-1,0)]

dx, dy = random.choice(moves)

x = max(1, min(self.size-2, x+dx)); y = max(1, min(self.size-2, y+dy))

new_g = self.grid_cpu.copy()

for r in range(self.size):

for c in range(self.size):

if self.grid_cpu[r,c] == ' ':

has_w = False

for dx in [-1,0,1]:

for dy in [-1,0,1]:

nx, ny = r+dx, c+dy

if 0<=nx<self.size and 0<=ny<self.size and self.grid_cpu[nx,ny] == '~': has_w = True

if has_w: new_g[r,c] = '='

self.grid_cpu = new_g

temp = np.array([[ord(c) for c in row] for row in self.grid_cpu], dtype=np.int32)

self.static_map_gpu.fill_(35)

self.static_map_gpu[self.pad:self.pad+self.size, self.pad:self.pad+self.size] = torch.tensor(temp, device=DEVICE)

self.res_grid_gpu.fill_(0)

def update_difficulty(self, pop):

# 1. Overpopulation Streak: 500 Ticks

if pop >= (POPULATION_CAP * 0.9):

self.high_pop_streak += 1

self.low_pop_streak = 0

if self.high_pop_streak >= 500: # Changed from 300 to 500

self.food_multiplier = max(0.1, self.food_multiplier * 0.95)

self.high_pop_streak = 0

print(f">> ⚠️ OVERPOPULATION STREAK. Lowering Food to {self.food_multiplier:.4f}")

# 2. Famine Streak: 100 Ticks

elif pop <= (POPULATION_CAP * 0.3):

self.low_pop_streak += 1

self.high_pop_streak = 0

if self.low_pop_streak >= 100: # Changed from 300 to 100

self.food_multiplier = min(3.0, self.food_multiplier * 1.10)

self.low_pop_streak = 0

print(f">> 🆘 FAMINE STREAK. Boosting Food to {self.food_multiplier:.4f}")

else:

self.high_pop_streak = 0

self.low_pop_streak = 0

def respawn_resources(self):

target_counts = {

1: int((self.size**2)*0.25*self.food_multiplier),

2: int((self.size**2)*0.01*self.food_multiplier),

5: int((self.size**2)*0.15*self.food_multiplier)

}

inner = self.static_map_gpu[self.pad:self.pad+self.size, self.pad:self.pad+self.size]

is_water = (inner == 126)

for tid, targ in target_counts.items():

curr = (self.res_grid_gpu == tid).sum()

deficit = targ - curr

if deficit > 0:

count = int(deficit * 1.5) + 10

rx = torch.randint(0, self.size, (count,), device=DEVICE)

ry = torch.randint(0, self.size, (count,), device=DEVICE)

curr_res = self.res_grid_gpu[rx, ry]

empty_mask = (curr_res == 0)

terr_val = is_water[rx, ry]

terr_mask = (terr_val if tid == 5 else ~terr_val)

valid = empty_mask & terr_mask

valid_x = rx[valid]; valid_y = ry[valid]

take = min(len(valid_x), deficit)

if take > 0:

self.res_grid_gpu[valid_x[:take], valid_y[:take]] = tid

# ==============================================================================

# 4. SIMULATION LOOP

# ==============================================================================

def render_ascii_map(world, agent_pos_list):

vis = world.grid_cpu.copy()

res = world.res_grid_gpu.cpu().numpy()

vis[res==1] = 'b'; vis[res==2] = 'M';

vis[res==5] = 'F'

for r, c in agent_pos_list: vis[r, c] = '@'

print("n" + "="*27)

for row in vis: print("|" + "".join(row) + "|")

print("="*27 + "n")

def run_simulation():

if not os.path.exists("checkpoints"): os.makedirs("checkpoints")

if START_FRESH:

for f in glob.glob("checkpoints/tick_*.pth"): os.remove(f)

world = WorldEngine()

pop_manager = PopulationManager()

agent_stats = torch.zeros((MAX_AGENTS, 5), dtype=torch.float32, device=DEVICE)

agent_pos = torch.zeros((MAX_AGENTS, 2), dtype=torch.long, device=DEVICE)

alive_mask = torch.zeros(MAX_AGENTS, dtype=torch.bool, device=DEVICE)

audio_inbox = torch.zeros((MAX_AGENTS, 1, SENTENCE_LEN), dtype=torch.long, device=DEVICE)

next_uid = 0

hall_of_fame = []

global_mutation_decay = 1.0

print("Genesis..."); world.respawn_resources()

def spawn_batch(count):

nonlocal next_uid

free_indices = torch.nonzero(~alive_mask).squeeze(1)

count = min(count, len(free_indices))

if count == 0: return

idx = free_indices[:count]

pos_list = []

for _ in range(count):

while True:

rx, ry = random.randint(0, GRID_SIZE-1), random.randint(0, GRID_SIZE-1)

if world.grid_cpu[rx, ry] != '~':

pos_list.append([rx, ry]); break

agent_pos[idx] = torch.tensor(pos_list, device=DEVICE)

agent_stats[idx, 0] = torch.rand(count, device=DEVICE) * 150.0 + 150.0

agent_stats[idx, 1] = torch.rand(count, device=DEVICE) * 50.0

r_var = (torch.rand(count, device=DEVICE) * (MAX_AGE_VARIANCE * 2)) - MAX_AGE_VARIANCE

agent_stats[idx, 4] = MAX_AGE_BASE + r_var

agent_stats[idx, 2] = idx.float()

agent_stats[idx, 3] = 0.0

next_uid += count

alive_mask[idx] = True

spawn_batch(50)

INPUT_SIZE = 28 + VISION_AREA

batch_tensor = torch.zeros((MAX_AGENTS, INPUT_SIZE), dtype=torch.long, device=DEVICE)

move_deltas = torch.tensor([[0,0],[-1,0],[1,0],[0,-1],[0,1],[0,0],[0,0]], device=DEVICE)

rows = torch.arange(VISION_WIDTH, device=DEVICE) - VISION_RADIUS

cols = torch.arange(VISION_WIDTH, device=DEVICE) - VISION_RADIUS

gr, gc = torch.meshgrid(rows, cols, indexing='ij')

offsets = torch.stack([gr.flatten(), gc.flatten()], dim=1)

last_time = time.time()

stats_history = {"move": 0, "eat": 0, "fish": 0, "stay": 0}

for tick in range(10000000):

pop_count = alive_mask.sum().item()

world.update_difficulty(pop_count)

if tick > 0 and tick % REAPER_FREQ == 0 and pop_count > 10:

active_idx = torch.nonzero(alive_mask).squeeze(1)

scores = agent_stats[active_idx, 0] + (agent_stats[active_idx, 1] * 0.5)

sorted_local = torch.argsort(scores)

sorted_global = active_idx[sorted_local]

cutoff = max(1, int(len(active_idx) * 0.20))

worst = sorted_global[:cutoff]; best = sorted_global[-cutoff:]

print(f"n>> 🧬 CLONING EVENT (Tick {tick})")

for i in range(cutoff):

source = best[i].item(); target = worst[i].item()

pop_manager.clone_brain(source, target)

agent_stats[target, 0] = 200.0; agent_stats[target, 1] = 0.0; agent_stats[target, 3] += 1.0

r_var = (random.random() * MAX_AGE_VARIANCE * 2) - MAX_AGE_VARIANCE

agent_stats[target, 4] = MAX_AGE_BASE + r_var

print(" >> Genetic Overwrite Complete.n")

if pop_count <= 5:

print(">> EXTINCTION RESET");

world.food_multiplier = 1.0

world.generate_new_map(); world.respawn_resources()

alive_mask.fill_(False)

spawn_batch(50)

continue

world.respawn_resources()

active_indices = torch.nonzero(alive_mask).squeeze(1)

if len(active_indices) == 0: continue

agent_stats[active_indices, 1] += 1

batch_tensor.fill_(0)

batch_tensor[active_indices, 0] = torch.clamp((agent_stats[active_indices, 0] / 20).long(), 0, 9) + 16

batch_tensor[active_indices, 1:11] = audio_inbox[active_indices, 0, :]

current_map = world.static_map_gpu.clone()

res_mask = world.res_grid_gpu > 0

res_vals = world.res_grid_gpu[res_mask]

res_coords = torch.nonzero(res_mask)

lut = torch.zeros(6, dtype=torch.long, device=DEVICE)

lut[1]=98; lut[2]=77; lut[5]=70

current_map[res_coords[:,0]+world.pad, res_coords[:,1]+world.pad] = lut[res_vals]

pos = agent_pos[active_indices]

sample_coords = pos.unsqueeze(1) + offsets.unsqueeze(0) + world.pad

map_w = GRID_SIZE + (2 * world.pad)

flat_idx = ((sample_coords[:, :, 0] * map_w) + sample_coords[:, :, 1]).clamp(0, current_map.numel() - 1)

vision = torch.take(current_map, flat_idx)

batch_tensor[active_indices, 28:] = torch.clamp(vision - ASCII_OFFSET, 0, VOCAB_SIZE-1)

audio_inbox.fill_(0)

act_out, voc_out = pop_manager.infer(active_indices, batch_tensor[active_indices])

if act_out is not None:

actions = torch.argmax(act_out, dim=-1)

vocals = torch.argmax(voc_out, dim=-1)

jitter_mask = torch.rand(len(actions), device=DEVICE) < RANDOM_MOVE_CHANCE

random_actions = torch.randint(1, 7, (len(actions),), device=DEVICE)

actions = torch.where(jitter_mask, random_actions, actions)

is_talking = (vocals.sum(dim=1) > 0)

if is_talking.any():

talkers = active_indices[is_talking]

msgs = vocals[is_talking]

t_pos = agent_pos[talkers].float()

all_pos = agent_pos[active_indices].float()

dists = torch.cdist(t_pos, all_pos)

hear_mask = (dists <= VISION_RADIUS) & (dists > 0)

for i, talker_idx in enumerate(talkers):

listeners = active_indices[hear_mask[i]]

if len(listeners) > 0:

msg = msgs[i].view(1, 1, -1).expand(len(listeners), 1, -1)

audio_inbox[listeners] = msg

move_mask = (actions >= 1) & (actions <= 4)

eat_mask = (actions == 5)

fish_mask = (actions == 6)

stay_mask = (actions == 0)

stats_history["move"] += move_mask.sum().item()

stats_history["eat"] += eat_mask.sum().item()

stats_history["fish"] += fish_mask.sum().item()

stats_history["stay"] += stay_mask.sum().item()

deltas = move_deltas[actions]

proposed = pos + deltas

# --- SAFE BOUNDS CHECK ---

in_bounds = (proposed[:, 0] >= 0) & (proposed[:, 0] < GRID_SIZE) &

(proposed[:, 1] >= 0) & (proposed[:, 1] < GRID_SIZE)

check_x = proposed[:, 0] + world.pad

check_y = proposed[:, 1] + world.pad

terr = current_map[check_x, check_y]

valid_move = in_bounds & (terr != 126)

final_pos = torch.where(valid_move.unsqueeze(1), proposed, pos)

agent_pos[active_indices] = final_pos

r_vals = world.res_grid_gpu[final_pos[:,0], final_pos[:,1]]

gain = (eat_mask & (r_vals == 1)).float() * 25.0

if fish_mask.any():

f_idx = torch.nonzero(fish_mask).squeeze(1)

if len(f_idx) > 0:

my_pos = final_pos[f_idx]

check_offsets = torch.tensor([[-1,0],[1,0],[0,-1],[0,1]], device=DEVICE)

can_fish = torch.zeros(len(f_idx), dtype=torch.bool, device=DEVICE)

for off in check_offsets:

target = my_pos + off

tx = target[:,0].clamp(0, GRID_SIZE-1) + world.pad

ty = target[:,1].clamp(0, GRID_SIZE-1) + world.pad

is_water = (world.static_map_gpu[tx, ty] == 126)

rx = tx - world.pad

ry = ty - world.pad

has_fish = (world.res_grid_gpu[rx, ry] == 5)

success = is_water & has_fish

if success.any():

can_fish = can_fish | success

world.res_grid_gpu[rx[success], ry[success]] = 0

gain[f_idx] += can_fish.float() * 40.0

agent_stats[active_indices, 0] = torch.clamp(agent_stats[active_indices, 0] + gain, max=2500.0)

eaten_land = (eat_mask & (r_vals == 1) & (gain > 0))

if eaten_land.any():

p = final_pos[eaten_land]

world.res_grid_gpu[p[:,0], p[:,1]] = 0

pop_tax = 0.1 + max(0, (pop_count - POPULATION_CAP) * 0.05)

agent_stats[active_indices, 0] -= pop_tax

died = ((agent_stats[:, 0] <= 0) | (agent_stats[:, 1] >= agent_stats[:, 4])) & alive_mask

if died.any(): alive_mask[died] = False

births = (agent_stats[:, 0] > 120) & alive_mask

if births.any():

ready = torch.nonzero(births).squeeze(1)

mask = torch.rand(len(ready), device=DEVICE) > 0.2

parents = ready[mask]

if len(parents) > 0:

current_pop = alive_mask.sum().item()

space = POPULATION_CAP - current_pop

free = torch.nonzero(~alive_mask).squeeze(1)

num = min(len(parents), len(free), space, MAX_BIRTHS_PER_TICK)

if num > 0:

p = parents[:num]; c = free[:num]

agent_stats[p, 0] -= 80

agent_stats[c, 0] = 80.0; agent_stats[c, 1] = 0.0; agent_stats[c, 2] = c.float()

agent_stats[c, 3] = agent_stats[p, 3] + 1

r_var = (torch.rand(num, device=DEVICE) * MAX_AGE_VARIANCE * 2) - MAX_AGE_VARIANCE

agent_stats[c, 4] = MAX_AGE_BASE + r_var

agent_pos[c] = agent_pos[p]

alive_mask[c] = True

pop_manager.mutate_batch(c, p, agent_stats[p, 3], global_mutation_decay)

if tick % LOG_FREQ == 0:

cur = time.time(); tps = LOG_FREQ/(cur-last_time+1e-9); last_time=cur

# --- CRITICAL STABILITY FIX ---

current_active_indices = torch.nonzero(alive_mask).squeeze(1)

active = agent_stats[current_active_indices]

total = sum(stats_history.values()) + 1

mv = (stats_history['move']/total)*100

eat = (stats_history['eat']/total)*100

fish = (stats_history['fish']/total)*100

st = (stats_history['stay']/total)*100

stats_history = {k:0 for k in stats_history}

if len(active) > 0:

avg = active[:,0].mean().item()

ma = active[:,1].max().item()

scores = active[:, 0] + (active[:, 1] * 0.5)

if len(scores) > 1:

s_cpu = scores.cpu().numpy()

top_k = np.argsort(s_cpu)[::-1][:300]

active_cpu = current_active_indices.cpu().numpy()

best_global = active_cpu[top_k]

hall_of_fame = [pop_manager.get_brain_dict(i) for i in best_global]

else:

avg = 0; ma = 0

txt = "SILENCE"

if is_talking.any() and is_talking.sum() > 0:

txt = decode_output(vocals[torch.argmax(is_talking.float())].cpu().numpy())

print(f"Tick {tick}|Pop {pop_count}|E {avg:.1f}|Age {ma:.0f}|TPS {tps:.0f}|Food {world.food_multiplier:.4f}|Voc '{txt}'")

print(f"[Act: Move {mv:.1f}% | Eat {eat:.1f}% | Fish {fish:.1f}% | Stay {st:.1f}%]")

apos = agent_pos[alive_mask].cpu().numpy()

render_ascii_map(world, apos)

run_simulation()

With World Models, Let’s Walk Before We Run was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.