Who Do Autonomous Agents Answer To? The Identity & Governance Problem

This is Part 2 of a two-part series on Agentic Identity.

Part 1: Identity Management for Agentic AI: Making Authentication & Authorization Digestible

https://medium.com/towards-artificial-intelligence/identity-management-for-agentic-ai-making-authentication-authorization-digestible-0fc5bb212862

TL;DR

- Agentic AI challenges traditional IAM by introducing autonomous actors that can act on behalf of humans or organizations.

- Identity is no longer a single ID, it’s rich metadata, context, and trust.

- Delegation, recursive access, and dynamic tool discovery introduce new security and governance complexities.

- Scalable human oversight will require AI-assisted, risk-based governance to prevent consent fatigue and bottlenecks.

Introduction

Today’s IAM systems assume humans are at the center: log in, consent, and act. But agentic AI i.e., autonomous systems capable of planning, acting, and interacting — completely breaks this assumption.

Inspired by the recent whitepaper on agentic AI identity management (arXiv:2510.25819), it’s clear that identity for agents is far more than a username or token. Delegation, dynamic tool access, and continuous autonomous behavior force a rethinking of authentication, authorization, and governance.

This article explores the key challenges in building secure, scalable, and trustworthy agentic identity systems.

1. Agent Identity: Beyond a Simple ID

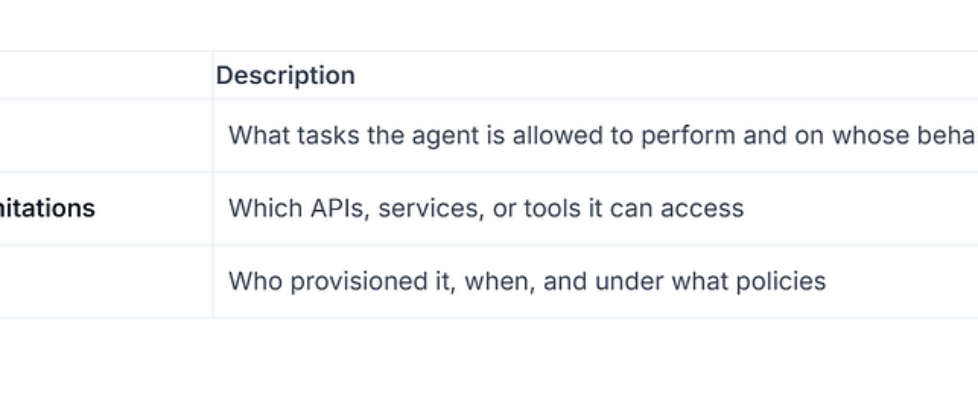

Traditional identity is simple: an identifier that maps to a person. For agents, identity must carry context-rich metadata, including:

Without rich metadata, we cannot reason about risk or enforce nuanced policies for autonomous agents.

Current IAM systems are largely static and human-centric, making them ill-suited to handle dynamic, autonomous agents. Future identity models must be:

- Extensible — supporting new attributes as agent capabilities evolve

- Verifiable — cryptographically provable and auditable

- Machine-readable — enabling automated policy evaluation and enforcement

2. Delegated Authorization and Transitive Trust

Agents rarely act alone. To operate on behalf of humans or other agents, they require delegated access, which introduces complex trust relationships.

2.1 On-Behalf-Of (OBO) Delegation

OBO delegation lets an agent act for a user. Unlike humans, agent delegation can be continuous and automated, raising questions:

- How long should an agent retain access?

- How do we prevent escalation beyond the intended scope?

User → grants delegation → Agent A → acts on downstream APIs

2.2 Recursive Delegation

Recursive delegation occurs when an agent delegates access to another agent, which may further delegate. Each hop amplifies risk:

User → Agent A → Agent B → Agent C → ...

Key concerns:

- Policies must propagate recursively across all delegation hops

- Risk assessments must consider transitive trust

- Accountability becomes harder to trace

2.3 Revocation Challenge

Revocation is no longer a single action. With multiple layers of delegation:

- Revocation must propagate in real-time across all dependent agents

- Failure to revoke correctly can lead to access escalations and systemic vulnerabilities

2.4 Deprovisioning and Offboarding

Agents may be ephemeral, cloned, or migrated across systems. Deprovisioning must ensure:

The lifecycle of an agent is complex and dynamic; deprovisioning cannot be treated like a simple human account deletion.

3. Registries and Dynamic Tool Discovery

Unlike humans, agents will dynamically discover and connect to new services and tools:

- Self-provisioning in SaaS applications, APIs, or cloud resources

- Automatic negotiation of capabilities and access

This creates dynamic trust challenges:

IAM is no longer a static permissions model — it becomes a living, adaptive ecosystem.

4. Scalable Human Governance

As autonomous agents proliferate, human oversight faces a bottleneck:

Future governance will require AI-assisted oversight, including:

- ✅ Risk-based auto-approval systems — low-risk actions proceed automatically

- ✅ Adaptive consent policies — policies that evolve based on agent behavior and context

- ✅ Explainable audit trails — human-interpretable logs for accountability

Humans must remain in control, but AI must scale governance to match agent autonomy.

Conclusion

Agentic identity is the foundation for trustworthy AI ecosystems. From rich metadata to recursive delegation, dynamic tool access, and scalable governance, the challenges are both immediate and profound.

Addressing them requires rethinking IAM from the ground up:

- Machine-readable, high-dimensional identity attributes

- Transitive trust and delegation-aware policies

- Continuous discovery and validation of agent-accessible resources

- AI-assisted governance to prevent consent fatigue

The future is not just about building smarter agents — it’s about building agents we can trust.

Resources

- Identity Management for Agentic AI (arXiv:2510.25819) — The full whitepaper that inspired this article

- OAuth 2.0 Token Exchange (RFC 8693) — Standard for delegation tokens

- Client-Initiated Backchannel Authentication (CIBA) — Async authentication for decoupled flows

Who Do Autonomous Agents Answer To? The Identity & Governance Problem was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.