When GraphDB Ontologies Break: Exploring Embeddings

Keywords: GraphDB, VectorDB, Ontology, Embedding Vectors.

tl;dr Your GraphDB ontology works perfectly in dev, but then production users write “jogging” and it can’t match “running!” Can embeddings fix this? Let’s explore.

You’re building a recommender system that matches events and people based on interests. You built out a graphDB to help with matching, and you’re excited that it passes all the tests and it looks done and ready to launch.

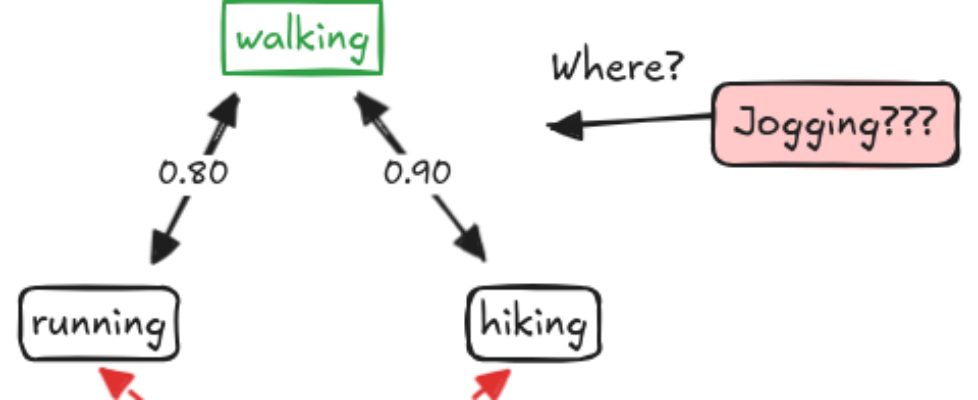

You try it out on a couple of people and quickly find it falls flat on its face. It knows about walking, hiking, and running but when someone says they like “jogging” it’s totally useless.

Let’s dive into code. We’ll start with a basic graph then explore embedding-based approaches using vectorDBs like Weaviate, or ChromaDB.

The GraphDB Approach

The common approach is to create an ontology around this idea of activities and encode how related they are as a number. For example, if I like running maybe that means there’s an 80% chance I’ll like walking (even though I didn’t say so), and if I like hiking perhaps there’s a 90% chance I’ll like walking. Plus, in the other direction, if I like walking I’m also 90% likely to like hiking.

Challenges

But wait, if I like hiking, am I likely to like running? There’s no arrow between those, and our graph implies there’s a relationship there, but we don’t know that we can safely infer such things. Plus, should we be conservative and say it’s 80% likely or should we be optimistic and say 90%, or average the two at 85%? We might even be more conservative and “walk the graph” and multiply .8 * .9 to get 72% likelihood (See picture 3 and 4). Plus, we know that this isn’t purely bidirectional, so someone who said they like “outdoor activities” might also like walking 70% of the time, but someone who likes walking may be 91% likely to like “outdoor activities”…

For fun here’s some naive code. We can imagine inferring a few hops, reviewing dijkstra’s algo, etc.

Of course, it’s great if we can enumerate the nodes we need in the graph, but often there are too many terms we might not know. Consider that someone may say “I like running” or “I’m a runner” or “I go for runs” and simply searching for “running” as an interest will miss 2 terms out of three of these. Stemming and other tricks might help but they’re brittle. We always come across data we didn’t expect, e.g. we might read “I was a sprinter on the track team” or “I like sprinting” and miss that they have an interest in “running”.

Embeddings: A Different Approach

A newer way to look at this problem is to leverage ML. LLMs are great at exactly this kind of problem. For an application we may not want to use a full prompt and LLM API call, but luckily we can use embeddings and vectorDBs to help. Let’s quickly look at the word embeddings for walk, run, hike and see what we get in terms of cosine distances:

Interesting:. This model finds walk and run to be the most similar, walk and hike the next, and hike and run the least similar

Today, we have embeddings, which give us a nice calculation of “conceptual distance”. What this means is we can get these “relationship” numbers “for free” without necessarily building this graph out. Plus vectorDBs are amazing at quickly giving us the top-K of similar items. Embedding models have done the work of figuring out, across huge corpuses of text, what these words and concepts mean, especially in relation to each other. And that’s good enough for a lot of use cases.

Challenges

There’s a caveat here, which is to say that words without context can be dangerous. I worked in web search and we used to say “Fencing can be a sport, the stuff that borders your house, or what you do with stolen goods”. So, depending on your embedding model, you may want to calculate from full sentences such as “I like the activity walking” and “I like the activity running” as opposed to using the bare words.

A quick change to the more popular Sentence model “all-MiniLLM-L6-v2”:

Interesting. This model has a different perspective, which could be the model or the context of interests and preferences (or both).

The most powerful and robust feature here is that we can handle almost any input without the need to pre-calculate our graph and weights. If we have an enumeration of categories of people’s favorite activities, and we see an event category that’s new to us, we can make an educated guess about how to map them, or use the embedding as input to our ranking. Meaning, if we put event descriptions into our vectorDB, then search for “I like to walk”, then a hiking event should score well.

Closing Thoughts

Of course, a combination of the two concepts would be needed in a real system! GraphDBs are great for fixed taxonomies and clear relationships, whereas vectorDBs are a life saver for problems that are fuzzy, natural language, or inherently semantic.

For the example discussed, “interests” isn’t the same as “semantic meaning”. Here we controlled the sentence, but if you put in “I hate to walk” it turns out that sentence scores 0.7697 similarity with “I like to walk” because these are close in latent space although one part of the vector is pointing in the opposite direction. If you can afford the latency of calling a foundational LLM with a prompt, it would handle such a case nicely.

Matching, recommendation systems, and ranking are always interesting to play with and there’s always more to explore; but never blindly trust code without measuring.

Where have you seen GraphDB ontologies break down? How did you resolve the problems?

End Notes

- Production quality is all about the details, so you should always test and benchmark. A vectorDB search is fast, but if you need accuracy and control then the right approach may be building a graph and calculating these weights yourself (doing several queries to get answers).

- At the time of writing, Weaviate was the only vectorDB I found that directly supports graphDB features.

- We used a couple models, so beware that the embedding vectors between them are not compatible. e.g. never compare vectors from a word model against a sentence model.

Thanks Richard King for the fencing examples 😉

When GraphDB Ontologies Break: Exploring Embeddings was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.