The Admin Work Killing Your Practice Has a Simple Fix You’re Probably Ignoring

Article Authored By Bobby Tredinnick LMSW-CASAC; CEO & Lead Clinician at Interactive Health Companies including Coast Health Consulting & Interactive International Solutions

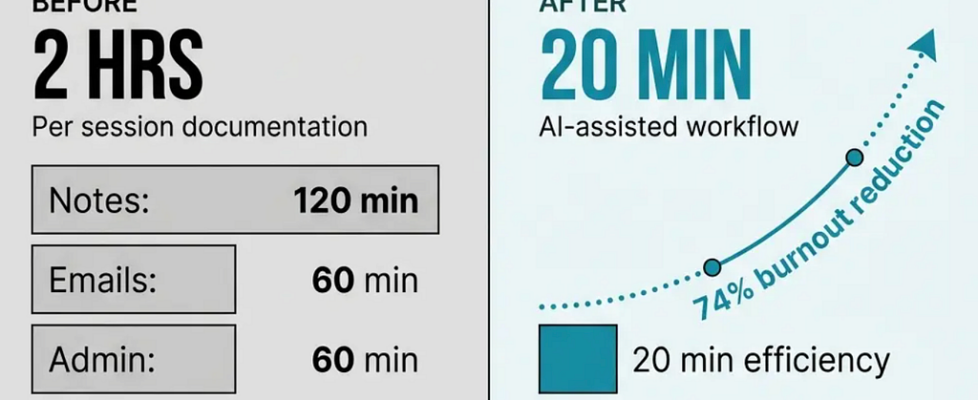

Clinicians across the field are exhausted. Not the kind of tired fixed with a weekend off. The kind that comes from spending two hours after every session writing notes, another hour answering emails, and realizing more time has been spent staring at screens than looking at the people they trained to help. When 93% of behavioral health workers report experiencing burnout, and a third of the workforce spends most of their time on administrative tasks instead of direct client support, the field is not dealing with individual resilience problems. It’s dealing with a structural failure in how behavioral health work gets done.

The answer sitting in front of most clinicians right now is so obvious that it gets dismissed. Simple AI tools, many of them free or nearly free, can handle the administrative work that steals hours from their day. This is not about complex enterprise systems that require IT departments and six-month implementations. This is about accessible tools clinicians can start using this afternoon. The gap between knowing these tools exist and actually using them is what separates clinicians who reclaim their time from those who keep grinding until they leave the field entirely.

What the Data Actually Shows About AI and Clinical Burnout

The numbers on this are not speculative. Physicians using ambient AI scribes saw burnout rates drop from 51.9% to 38.8% after just 30 days. That’s a 74% reduction in the odds of experiencing burnout, measured in a clinical study, not a marketing claim. The same research found significant improvements in cognitive task load, time spent documenting after hours, and focused attention on patients. When one family physician reported that AI assistance reduced documentation time from two hours to twenty minutes per case, that’s a 6x efficiency improvement that directly translates to either seeing more clients or going home at a reasonable hour.

Most physicians at The Permanente Medical Group using ambient AI scribes save an average of about an hour a day at the keyboard. An hour. Every single day. That’s five hours per week, 20 hours per month, 240 hours per year. For context, that’s six full work weeks of time returned to clinical judgment, patient interaction, or basic human rest. The return on investment for AI in behavioral health extends far beyond productivity metrics. Early adopters report improvements in staff retention, clinician wellbeing, and client engagement.

The adoption curve is accelerating because the results are undeniable. The number of behavioral health professionals using AI in their organization’s daily activities nearly doubled from 15% in 2024 to 29% in 2025. Among physicians surveyed, 75% believe AI could help with work efficiency, and 54% believe it could help with stress and burnout. The gap between belief and action is closing rapidly.

The Specific Tools You Can Use Today

Here’s what matters: you don’t need permission from an IT department or a budget approval process to start using AI for administrative work. The tools are accessible right now, and most of them require nothing more than creating an account and learning basic prompts.

ChatGPT and similar large language models represent the most immediate opportunity. The free version handles most documentation tasks. The Plus subscription costs $20 per month and provides faster responses and access to more advanced models. You can use these tools to draft progress notes, summarize session content, organize treatment plans, and generate client communication templates. The key is learning how to structure your prompts to get useful output.

Ambient AI scribes like Otter.ai, Fireflies.ai, or specialized clinical documentation tools listen to your sessions and generate draft notes automatically. You review and edit for accuracy, but the heavy lifting of transcription and initial organization is handled. HIPAA-compliant versions exist specifically for healthcare settings. The time savings here are immediate and measurable.

Task automation tools like Zapier or Make connect different applications and automate repetitive workflows. You can set up automatic appointment reminders, intake form processing, or data entry between systems without writing code. These tools operate on simple if-then logic that anyone can configure.

Email and communication assistants can draft responses to common inquiries, organize your inbox by priority, and flag urgent messages. Tools like Superhuman or even Gmail’s built-in AI features handle this with minimal setup.

The pattern across all these tools is the same. You provide context and parameters. The AI handles the repetitive structure and formatting. You apply clinical judgment and make final decisions. The division of labor is clear and appropriate.

How to Actually Implement This Without Losing Your Mind

The biggest mistake clinicians make with AI tools is trying to overhaul their entire workflow at once. That approach leads to frustration and abandonment. The correct method is to identify one specific administrative task that consumes disproportionate time, apply AI to that single task, measure the result, and then expand from there.

Start with documentation. If you spend two hours after each session writing notes, that’s your first target. Use ChatGPT or a specialized clinical documentation tool to draft initial notes based on session summaries you provide. You’ll need to develop a prompt template that works for your documentation style. This takes about a week of iteration. Once you have a working template, the time savings compound immediately.

Build your prompt library. Every time you create a prompt that generates useful output, save it. Create a document with templates for progress notes, treatment plans, client communications, and administrative responses. Refine these templates based on what works. Your prompt library becomes a personal productivity system that improves with use.

Establish review protocols. AI output requires human oversight. Always review generated content for accuracy, clinical appropriateness, and compliance with documentation standards. This review process is still faster than creating content from scratch, but it’s not optional. Your clinical judgment remains the final authority on everything that goes into a client record.

Test HIPAA compliance carefully. Not all AI tools are appropriate for protected health information. Use HIPAA-compliant versions of tools when working with client data. For general administrative tasks that don’t involve PHI, standard tools work fine. Know the difference and apply appropriate safeguards.

Measure actual time savings. Track how long administrative tasks take before and after implementing AI tools. The data will either validate your approach or show you where adjustments are needed. Anecdotal improvements feel good but measured improvements drive sustained behavior change.

The Self-Care Dimension Nobody Talks About

The conversation about AI in healthcare focuses almost entirely on efficiency and productivity. That framing misses the more important outcome. When you reclaim hours of administrative time, you’re not just becoming more efficient. You’re creating space for the parts of clinical work that actually require trained human judgment and the parts of life that keep you functional as a human being.

The therapeutic relationship remains the most powerful predictor of positive outcomes in behavioral health. Technology doesn’t replace that relationship. It removes the barriers that prevent you from being fully present in it. When you spend less time on screens and more time present with clients, both care quality and workforce sustainability improve. This is not a theoretical benefit. It’s the direct result of shifting cognitive load from documentation to clinical attention.

The burnout crisis in behavioral health is not primarily about resilience training or self-care workshops. It’s about structural problems in how work is organized. When 68% of behavioral health workers say administrative tasks take away from direct client support time, and 57% of physicians identify addressing administrative burdens through automation as the biggest opportunity for AI, the solution becomes clear. Remove the administrative burden and you address the root cause of burnout.

Self-care in this context means protecting your capacity to do the work that matters. It means going home at a reasonable hour. It means not spending your evenings finishing documentation. It means having enough cognitive energy left for the complex clinical decisions that require your full attention. AI tools make this possible not by replacing clinical work but by eliminating the busy work that prevents you from doing clinical work effectively.

What Actually Prevents Clinicians From Using These Tools

The barriers to AI adoption in behavioral health are not primarily technical. Most clinicians can learn to use ChatGPT or set up an ambient scribe in less than an hour. The real barriers are psychological and institutional.

Fear of technology replacing clinical judgment. This fear is understandable but misplaced. AI tools handle administrative tasks and information organization. They don’t make clinical decisions, develop treatment plans, or build therapeutic relationships. The technology augments human capability rather than replacing it. Understanding this distinction removes the primary psychological barrier.

Concern about accuracy and liability. AI-generated content requires review. This is not a limitation. It’s the appropriate use case. You maintain full control and responsibility for all clinical documentation and decisions. The AI provides a draft. You provide the judgment. Liability concerns are addressed through proper review protocols, not by avoiding useful tools.

Institutional resistance and compliance uncertainty. Some organizations have policies that restrict AI use without clear guidance on what’s permitted. This creates a chilling effect where clinicians avoid tools that could help them. The solution is to engage with compliance and IT departments proactively, demonstrate HIPAA-compliant implementations, and show measured outcomes. Resistance typically dissolves when results are documented.

Lack of training and support. Most clinicians receive no formal training on AI tools despite their growing importance in healthcare delivery. Self-directed learning works but takes longer and involves more trial and error. Organizations that provide structured training and peer support see much higher adoption rates and better outcomes.

The gap between knowing these tools exist and using them effectively is real but surmountable. The clinicians who cross that gap first gain a significant advantage in both productivity and wellbeing.

The Practical Reality of Implementation

Clinicians have transformed their practices by implementing simple AI tools over the course of a few weeks. The pattern is consistent. Initial skepticism gives way to cautious experimentation. Early wins with documentation or email management build confidence. Expanded use across multiple administrative tasks creates compounding time savings. Within a month, the new workflow becomes standard practice and the old way of doing things seems unnecessarily difficult.

The most successful implementations share common characteristics. They start small with one specific use case. They establish clear review protocols from the beginning. They measure time savings and quality outcomes. They expand gradually based on proven results. They maintain appropriate boundaries around clinical judgment and human oversight.

The least successful implementations try to do everything at once, skip the review process, or expect AI to handle tasks that require human judgment. These approaches lead to poor outcomes and reinforce skepticism about AI tools. The technology works when used appropriately. Appropriate use requires understanding both capabilities and limitations.

What This Means for the Future of Behavioral Health Practice

The trajectory is clear. Behavioral health will become increasingly AI-enabled while simultaneously becoming more human than it has been in decades. This is not a contradiction. When clinicians spend less time on administrative tasks and more time on clinical relationships, the quality of care improves. When burnout rates decline because structural barriers are removed, workforce retention improves. When documentation takes minutes instead of hours, clinicians can see more clients or maintain better work-life boundaries.

The clinicians who learn to use these tools effectively will have a significant competitive advantage. They’ll be able to serve more clients without sacrificing quality. They’ll experience less burnout and stay in the field longer. They’ll deliver better outcomes because they have more cognitive capacity for the work that matters. The gap between early adopters and those who resist will widen rapidly.

The tools are available now. The evidence supporting their effectiveness is substantial and growing. The barriers to adoption are primarily psychological and institutional rather than technical. The time to start is not when your organization mandates it or when burnout forces you out of the field. The time to start is today, with one simple tool applied to one specific administrative task that’s stealing your time and attention from the work you trained to do.

Clinicians don’t need permission. They don’t need a budget. They don’t need technical expertise. They need to recognize that the administrative burden crushing behavioral health practitioners has a solution that’s sitting in front of them right now, waiting to be used.

The Admin Work Killing Your Practice Has a Simple Fix You’re Probably Ignoring was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.