Stop Wasting PDFs — Build a RAG That Actually Understands Them

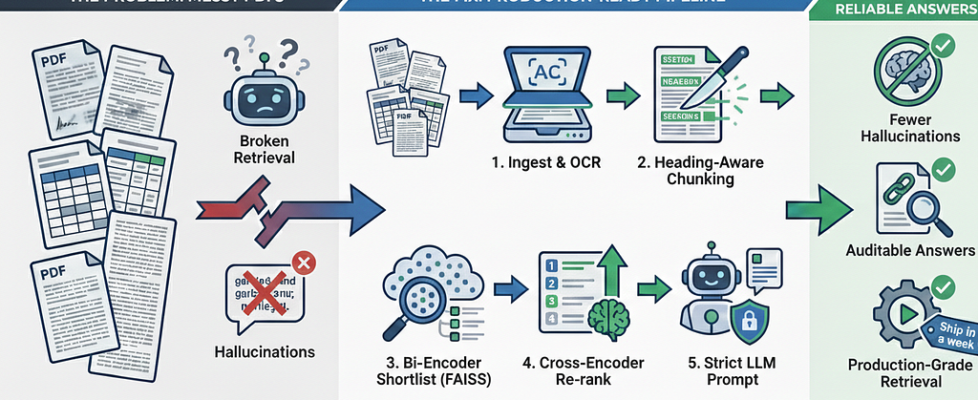

Author(s): Robi Kumar Tomar Originally published on Towards AI. Turn messy PDFs into reliable, auditable answers — a production-ready RAG pipeline with OCR, heading-aware chunking, FAISS, cross-encoder reranking, and strict LLM prompts Image Source : Google Gemini TL;DR — for skimmers Problem: PDFs are messy — scans, tables, and long paragraphs break retrieval. Fix: Ingest → smart chunk → bi-encoder shortlist → cross-encoder re-rank → grounded LLM prompt. Result: Fewer hallucinations, auditable answers, production-grade retrieval. Ship in a week: Use the included end-to-end script (OCR fallback + FAISS + CrossEncoder + LLM) and run the 20-question evaluation recipe. You’ve spent months producing product specs, compliance docs, and slide decks — yet search still returns noise. The frustration is universal: employees copy-paste from PDFs, support teams lose hours, and trust in the knowledge base quietly erodes. This article delivers a concise, battle-tested pipeline that turns messy PDFs into accurate, auditable answers. Quick vignette: A support team at a mid-size SaaS company spent three days debating whether an old contract clause applied to a renewal. After shipping the pipeline below, they surfaced the exact paragraph — in 30 seconds — with a cited quote. The legal lead stopped asking, “Do we trust the answer?” and started asking, “How do we make more documents searchable?” Small change. Huge trust. Why regular RAG fails on real PDFs Scanned pages — no extractable text without OCR. Bad chunking — arbitrary windows split meaning, tables, and code blocks. Fuzzy retrieval — bi-encoders return types of relevance, not exact passages. Redundancy — near-duplicate chunks bloat the shortlist and confuse rerankers. Bold truth: Upgrading your LLM rarely fixes these problems. Fix ingestion and retrieval first. The disciplined, production-ready pipeline (one line) Extract reliably → Chunk by meaning → Embed & shortlist (bi-encoder) → Re-rank with a cross-encoder → Prompt the LLM with citations. Step by step Extract + OCR fallback — attempt text extraction first; OCR empty pages with pdf2image + pytesseract. Heading- & table-aware chunking — split on semantic boundaries; avoid slicing tables mid-cell. Deduplicate + attach metadata — hash chunks to dedupe; retain source, page, heading, timestamps. Embed & store — use a fast bi-encoder (e.g., all-MiniLM-L6-v2) and store in FAISS/Chroma/Weaviate. Shortlist (top 30–100) — fast, scalable candidate retrieval. Cross-encoder re-rank — score query–doc pairs and select the top 3–10. Grounded LLM prompt — require chunk IDs, include exact quotes, return NOT FOUND when absent. Measure — track precision@k, hallucination rate, and p95 latency. Micro-edits for skimmers — what to tune first Add OCR fallback for scanned PDFs. Tune chunk size and overlap per doc type (specs vs. slides). Deduplicate chunks using SHA-1 plus an embedding-similarity threshold. Retrieve 30–100 candidates, then re-rank the top ~10. Force the LLM to return chunk IDs and exact quotes. Monitor precision@k and hallucination rate weekly. Full working code (end-to-end) This is a single-file, runnable script that implements the full pipeline: OCR fallback, heading-aware chunking, FAISS vector store, bi-encoder retrieval, CrossEncoder re-ranking, and a strict LLM answer step. Save as rag_pdf_pipeline.py. “””rag_pdf_pipeline.pyEnd-to-end RAG for unstructured PDFs:- PDF text extraction with OCR fallback- Heading-aware chunking with overlap- Deduplication and metadata preservation- Embeddings (HuggingFace) + FAISS vector store- Bi-encoder retrieval -> Cross-encoder re-rank- LLM answer with strict grounding and citationsDependencies:pip install langchain sentence-transformers faiss-cpu openai PyPDF2 pdf2image pytesseract python-magic# Optional: pip install unstructured[local] pymupdf”””import osimport loggingimport hashlibimport refrom typing import List, Tuple, Dict, Anyfrom langchain.docstore.document import Documentfrom langchain.embeddings import HuggingFaceEmbeddingsfrom langchain.vectorstores import FAISSfrom langchain.chat_models import ChatOpenAIfrom langchain.schema import SystemMessage, HumanMessagefrom sentence_transformers import CrossEncoderfrom PyPDF2 import PdfReaderfrom pdf2image import convert_from_pathimport pytesseract# —————- CONFIG —————-OPENAI_API_KEY = os.environ.get(“OPENAI_API_KEY”)EMBEDDING_MODEL_NAME = “sentence-transformers/all-MiniLM-L6-v2″RERANKER_MODEL_NAME = “cross-encoder/ms-marco-MiniLM-L-6-v2″CHUNK_SIZE = 1000CHUNK_OVERLAP = 200RETRIEVE_K = 50RERANK_TOP_N = 10# ————————————–logging.basicConfig(level=logging.INFO)logger = logging.getLogger(__name__)_heading_re = re.compile( r’^s*(?:#{1,6}s*|[A-Z][w -]{2,}n[-=]{2,})’, re.MULTILINE,)def sha1_text(text: str) -> str: return hashlib.sha1(text.encode(“utf-8”)).hexdigest()def load_pdf_text(path: str, ocr_if_needed: bool = True) -> List[Tuple[int, str]]: “”” Returns [(page_number, text)]. Uses PyPDF2 first; falls back to OCR per-page if text is missing. “”” pages: List[Tuple[int, str]] = [] try: reader = PdfReader(path) for i, page in enumerate(reader.pages): text = page.extract_text() or “” pages.append((i + 1, text.strip() or None)) except Exception as e: logger.warning(“PDF parse failed (%s). Falling back to OCR.”, e) pages = [] if ocr_if_needed and (not pages or any(t is None for _, t in pages)): images = convert_from_path(path, dpi=200) for i, img in enumerate(images): if i >= len(pages) or pages[i][1] is None: ocr_text = pytesseract.image_to_string(img) if i < len(pages): pages[i] = (i + 1, ocr_text) else: pages.append((i + 1, ocr_text)) return pagesdef simple_chunk(text: str, page: int) -> List[Document]: chunks = [] start = 0 while start < len(text): end = min(len(text), start + CHUNK_SIZE) chunk = text[start:end].strip() if chunk: chunks.append(Document(page_content=chunk, metadata={“page”: page})) start += CHUNK_SIZE – CHUNK_OVERLAP return chunksdef smart_chunk_pages(pages: List[Tuple[int, str]]) -> List[Document]: “”” Heading-aware chunking with deduplication. “”” all_docs: Dict[str, Document] = {} for page_num, text in pages: if not text: continue headings = list(_heading_re.finditer(text)) spans = ( [m.start() for m in headings] + [len(text)] if len(headings) >= 2 else [0, len(text)] ) for i in range(len(spans) – 1): segment = text[spans[i]:spans[i + 1]].strip() for doc in simple_chunk(segment, page_num): h = sha1_text(doc.page_content) if h not in all_docs: all_docs[h] = doc return list(all_docs.values())def build_vectorstore(docs: List[Document], save_path: str = None) -> FAISS: embeddings = HuggingFaceEmbeddings(model_name=EMBEDDING_MODEL_NAME) vectorstore = FAISS.from_documents(docs, embeddings) if save_path: vectorstore.save_local(save_path) return vectorstoredef retrieve_and_rerank( vectorstore: FAISS, query: str, reranker: CrossEncoder,) -> List[Tuple[Document, float]]: candidates = vectorstore.similarity_search(query, k=RETRIEVE_K) if not candidates: return [] pairs = [(query, d.page_content) for d in candidates] scores = reranker.predict(pairs, batch_size=16) ranked = sorted(zip(candidates, scores), key=lambda x: x[1], reverse=True) return ranked[:RERANK_TOP_N]def format_context(ranked: List[Tuple[Document, float]]) -> Tuple[str, List[str]]: blocks, citations = [], [] for i, (doc, score) in enumerate(ranked): cid = f”doc-{i+1}-p{doc.metadata.get(‘page’)}” excerpt = doc.page_content[:400].replace(“n”, ” “).strip() blocks.append(f”— {cid} —n{excerpt}”) citations.append(f”{cid} (score={score:.4f})”) return “nn”.join(blocks), citationsdef answer_with_llm(query: str, ranked: List[Tuple[Document, float]]) -> Dict[str, Any]: system = ( “You are a strict summarizer. Use ONLY the provided context blocks. ” “If the answer is not present, reply NOT FOUND. ” “Include citation IDs and quote exact text using triple backticks.” ) context, citations = format_context(ranked) user = f”Question:n{query}nnContext:n{context}” llm = ChatOpenAI(model=”gpt-4o-mini”, temperature=0.0) resp = […]