Stop Using Self-Joins: How Using GroupBy and Filters Instead Can Save Massive Time and Cost in…

Stop Using Self-Joins: How Using GroupBy and Filters Instead Can Save Massive Time and Cost in PySpark

When working with large datasets in PySpark, it’s rather common in a notebook to see a table joined to itself using an inner join. While this approach is straightforward and intuitive, it often comes with a steep price: long runtimes, excessive shuffles, and inflated compute costs.

In many real-world scenarios, you can replace a self–inner join with a groupBy + aggregation + filter pattern that is dramatically faster and cheaper — especially at scale.

Let’s break down why self-joins are expensive and how the alternative works.

Why Self Inner Joins Are So Expensive

Before I got involved with Big Data on Apache Spark, I used to use self-joins all the time. In itself, a self-join looks easy-peasy and quite harmless. Let’s take this simple example of looking in a table of customer transactions to see the transactions side by side a customer may have made on the same day. Let’s assume that the transaction_id field is a whole number, so using an inequality with ensure a pair of transactions don’t show up in the resulting output more than once as well as eliminating self pairs in the output.

same_day_transactions = (

transactions_df.alias("a")

.join(transactions_df.alias("b"),

on=["first_name", "last_name", "transaction_date"],

how="inner"

)

.filter("a.transaction_id" < "b.transaction_id")

.select("first_name",

"last_name",

"transaction_date",

"a.transaction_id",

"a.transaction_description",

"b.transaction_id",

"b.transaction_description"

)

)

Looks simple, right? But under the hood, Spark has to:

First, Materialize two logical copies of the same dataset then…

Shuffle both sides across the cluster on the join keys then…

Build the join state (hash tables or sort-merge structures) then finally….

Explode the row counts when keys are non-unique.

And that’s not cheap. On large tables of billions of rows, this quickly becomes one of the most expensive operations in Spark. This will result in long shuffle stages, high spill to disk, executors running out of memory and a large cloud bills with little business value.

The Key Insight: Do You Really Need Two Copies?

Most self-joins are written to answer questions like:

“Which keys appear more than once?”

“Which rows share the same A and B?”

“Are there duplicates or collisions?”

If you’re not actually comparing row-to-row attributes, then you usually don’t need a join at all.

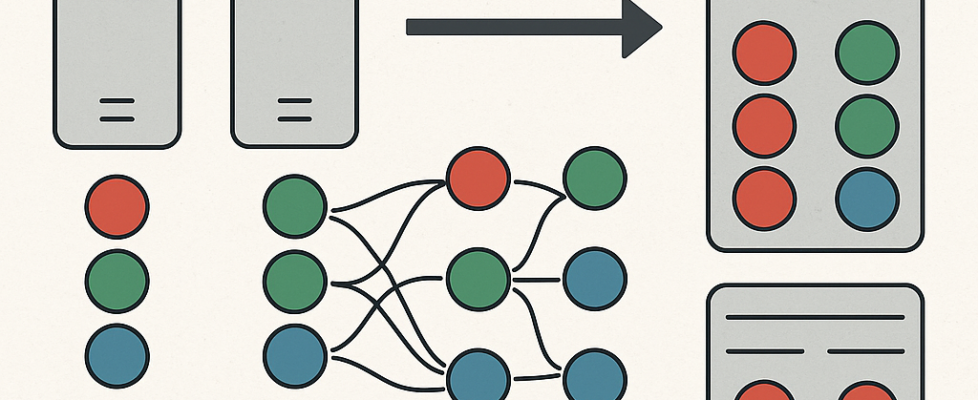

The Faster Pattern: GroupBy → Aggregate → Filter

Instead of joining the table to itself, let Spark scan the data once, aggregate, and filter. Let’s change the code above to this, which results in the same output.

from pyspark.sql import functions as F

same_day_transactions = (

transactions_df

.groupBy("first_name","last_name", "transaction_date")

.agg(F.collect_list(F.struct("*")).alias("rows"))

.withColumn("l", F.explode("rows"))

.withColumn("r", F.explode("rows"))

.filter(F.col("l.transaction_id") < F.col("r.transaction_id"))

.select("first_name",

"last_name",

"transaction_date",

"l.transaction_id",

"l.transaction_description",

"r.transaction_id",

"r.transaction_description"

)

)

This avoids:

- global shuffle

- cross-key comparisons

- skew amplification

In addition you have no row explosion risk and you perform only one data scan of the table versus two with the inner join.

Real Cost Savings at Scale

On large production datasets of hundreds of millions to billions of rows, teams using this method routinely see:

- 3–10× runtime reductions

- 50–80% less shuffle I/O

- Significant cloud cost savings (fewer DBUs, fewer executor hours)

This is especially impactful in environments like Databricks or EMR, where shuffle-heavy workloads directly translate to higher spend.

By replacing self inner joins with groupBy + aggregation + filters, you allow Spark to do less work, move less data, finish faster and cost less.

So before writing your next self-join, ask yourself: “Do I really need two copies of this data?”

Chances are, you don’t.

Stop Using Self-Joins: How Using GroupBy and Filters Instead Can Save Massive Time and Cost in… was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.