Shipping Real Sales Forecasts: How Model Context Protocol Enables AI Agents to Use Your Data in…

Shipping Real Sales Forecasts: How Model Context Protocol Enables AI Agents to Use Your Data in Production

If you have worked on sales forecasting as a product manager, data analyst, or data scientist, this situation will sound familiar.

Most sales forecasts work well in notebooks but fall apart the moment they’re connected to real systems. Pipelines break when schemas change. Adding new inputs requires custom ETL. No one can fully explain why the forecast changed. Over time, people stop trusting the numbers and return to manual judgment.

So the forecast exists. But it never truly ships.

In retrospectives, the same issues surface again and again. The forecast lives in a notebook instead of the product. Integrations break when schemas change. Adding new context like promotions or weather requires custom ETL work. When the forecast shifts, no one can easily explain why.

As a result, forecasts exist, but they do not ship.

This article is for product managers, data scientists, and ML engineers who have built forecasting models that work in isolation but fail to become reliable product features. By the end of this article, you will understand how Model Context Protocol enables production ready forecasting by turning models into system components with stable inputs, clear ownership, and human feedback loops. This is a conceptual and architectural deep dive.

Why Forecasts Fail to Reach Production

Most forecasting failures are not caused by weak models.

They happen because forecasting systems rely on brittle, point to point integrations that cannot evolve as the business changes. Each new data source introduces more custom logic. Each schema change risks breaking the pipeline. Over time, the forecast becomes fragile, opaque, and difficult to trust.

When forecasting depends on fragile data plumbing, teams stop iterating. Eventually, the forecast becomes an experiment rather than infrastructure.

How MCP Solves in Forecasting Workflows

Model Context Protocol (MCP) addresses this exact gap.

Instead of ad-hoc, human-driven data assembly, MCP gives an agent a structured way to gather the same context automatically, consistently, and in one place.

The result is far less time spent coordinating systems and far more time spent using forecasts that actually stay up to date and usable.

What is MCP?

Model Context Protocol (MCP) is a standard way for AI agents to discover and call internal tools and data sources with structured context.

Instead of building one-off integrations between models and every database or API, MCP lets teams expose systems as tools with explicit contracts. Agents can then assemble the context they need without custom glue code for each workflow.

For forecasting, this matters because the hardest part of forecasting usually isn’t the model, it’s gathering consistent inputs.

An End-to-End Forecasting Agent Architecture

Consider a deliberately narrow scope.

A short-term sales forecast for one product line, continuously updated, and visible to a planning team.

Trigger and Cadence (Product Ownership)

Every hour, or when new orders arrive, a forecasting agent runs. This cadence reflects how fresh the forecast needs to be in order to support decisions.

Context Assembly via MCP

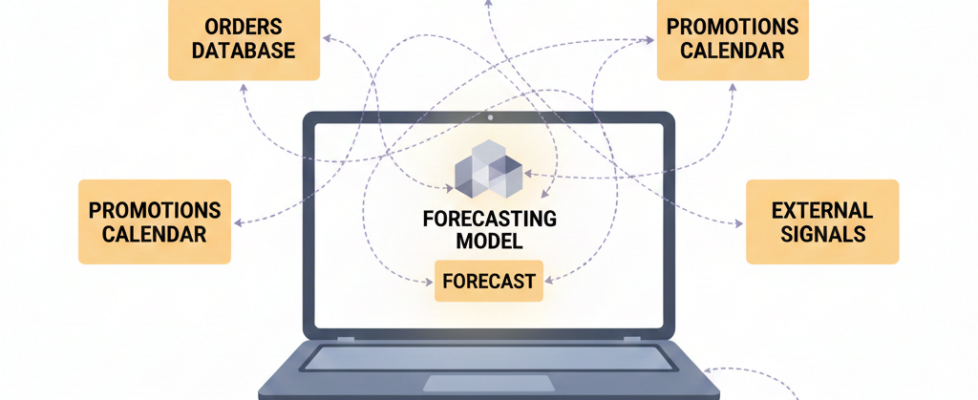

When the agent runs, it discovers and calls MCP exposed tools such as:

• get_last_90_days_sales

• get_open_orders

• get_upcoming_promotions

• get_weather_signal

In this context, tools are named, callable capabilities with clear schemas and contracts, not raw data access.

When it runs, the agent calls MCP-exposed tools to gather context. The outputs are assembled into a structured context object similar to a feature snapshot in offline ML.

Nothing magical yet. The agent is just doing what a human analyst would do but automatically and consistently.

Forecast Generation

The agent passes the structured context object to a deployed forecasting model. This could be a classical time series model or a machine learning based approach.

The output is a forecast artifact. This might be a point forecast, a distribution with uncertainty bounds, or multiple scenario projections. Importantly, this forecast artifact is versioned alongside the context that produced it, enabling traceability and reproducibility.

MCP does not change the model itself. It changes how consistently and transparently the model receives its inputs.

Surfacing Forecasts in the Product

The agent writes the forecast artifact into a surface people already use, such as a planning UI, alerting system, or internal API. In my case, this was Google Sheets.

Forecasts fail most often because they live in dashboards that no one checks. Writing forecasts into existing workflows dramatically increases adoption and trust.

Closing the Loop with Human Actions

The agent highlights meaningful changes.

“Forecast for next week is down 8 percent.”

It suggests possible drivers.

“A promotion was delayed. Consider adjusting inventory targets.”

When humans override or adjust forecasts, those actions are logged. Over time, these override logs become evaluation data. They reveal where the model consistently disagrees with operators and where additional context or features are needed.

At this point, forecasting becomes a living system rather than a static output.

A Short Practical Example

Consider a beverage company forecasting weekly demand for a new product line.

Before MCP

- Sales data lived in one warehouse

- Promotions lived in another system

- Weather came from an API someone wired manually

- Whenever schemas changed, the model broke

- Planners stopped trusting the dashboard and used gut judgement

After MCP

- Each signal became a named MCP tool with a clear contract

- The agent rebuilt the full context snapshot every day

- Forecasts were written directly into the planning sheet

- Overrides were logged and reviewed weekly

The result wasn’t just better accuracy — it was stability, transparency, and trust.

Defining Ownership Boundaries with MCP

Forecasting systems fail when ownership is unclear. Product, data, and ML teams make changes in isolation, breaking trust and alignment. MCP prevents this by enforcing a shared contract layer that separates responsibilities while keeping systems interoperable

Product ownership includes

• When the agent runs

• Which forecast horizon matters

• Where results appear

• How users act on them

Data and ML ownership includes

• Which signals feed the model

• Feature engineering

• Model choice and evaluation

MCP sits between these layers, enforcing structure without collapsing responsibilities into a single team.

Takeaway: MCP Turns Forecasts into Product Infrastructure

When teams adopt MCP, forecasting stops being a fragile experiment.

Inputs become stable and discoverable. Context evolves without breaking pipelines. Forecasts appear where decisions are made. Human overrides become learning signals.

Most importantly, forecasting shifts from notebook-based analysis to reliable product infrastructure.

If you already have forecasting models but struggle to operationalize them, treating MCP as a first-class architectural layer may be the missing step between insight and impact.

AI assistance disclosure

Parts of this article, including some diagrams, were created with the assistance of AI tools with user prompts these were reviewed, edited, and validated by the author.

Shipping Real Sales Forecasts: How Model Context Protocol Enables AI Agents to Use Your Data in… was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.