Serve 10M+ agentic AI requests a day: Python-Rust API + WebAssembly + vLLM Backend

When RAG systems move from prototype to production… the architecture must solve four interrelated problems simultaneously.

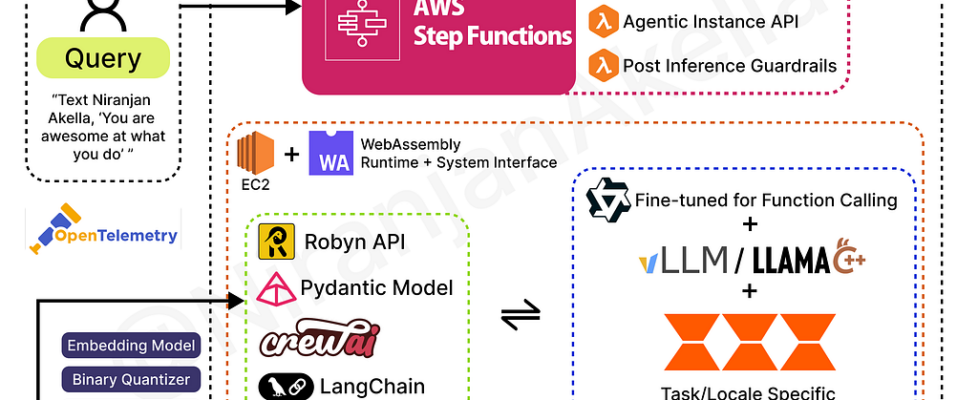

Orchestration of complex workflows, costefficient knowledge retrieval, adaptive model serving, and enterprise-scale search performance. The architecture that I illustrated represents a awesome approach to solving each of these challenges. I very in detail show how the combination of AWS Step Functions, EC2-hosted vLLM inference, Qdrant’s vector database, binary quantization, and LoRA adapters converge to create a system capable of serving millions of queries while maintaining sub-second latencies and permitting rapid task specific customizations necessary too at Prod scale.

The Nervous System Folk!! : AWS Step Functions + EC2

The foundation of any production RAG system is orchestration, the ability to coordinate multiple specialized services reliably, transparently, and at scale. AWS Step Functions provides this orchestration layer by managing the state and sequencing of distributed tasks without requiring custom queueing or state management infrastructure.

When a user submits a query to this system, that request doesn’t immediately flow to the LLM. Instead, Step Functions acts as a traffic controller, coordinating a multi-step workflow that includes guardrail evaluation, instance API invocations, and post-inference validation. Each step maintains explicit state: if a network failure occurs mid-pipeline, Step Functions records exactly where the process stopped and can resume from that point, a critical capability for long-running inference requests in production environments.

The architecture pairs Step Functions with EC2 instances running vLLM via WebAssembly runtime and system interface integration. This pairing represents a pragmatic choice. Step Functions cannot directly invoke arbitrary services; it requires them to expose HTTPS endpoints or register as Activity Tasks. EC2 instances running containerized inference services satisfy this requirement while offering complete control over the compute environment. Unlike Lambda functions, which face cold-start penalties and memory constraints for large language models EC2 instances persist across requests, preload models, and can be vertically scaled to accommodate models ranging from 7B to 70B+ parameters.

The workflow orchestrates three categories of tasks:

Pre-Inference Guardrails apply prompt injection detection and content policy filtering before inference, ensuring that the LLM never processes potentially adversarial input. This stage is computationally lightweight but security-critical

Agentic Instance API calls invoke the actual inference engine. Step Functions handles request batching, timeout management, and retry logic infrastructure concerns that are otherwise easy to mishandle and cause cascading failures.

Post-Inference Guardrails would validate the generated response against safety and consistency thresholds, flagging potentially problematic outputs before they reach end users.

This 3gate pattern transforms a single inference call into a fortified pipeline. The state machine nature of Step Functions means each gate’s decisions are logged, audited, and reversible which is a significant operational advantage in regulated industries.

The Knowledge Retrieval Stack

Binary Quantization Meets Sparse-Dense Hybrid Search

Within the guardrailed workflow, the RAG retrieval component must answer a deceptively simple question:

Which documents from the knowledge base are most relevant to this query?

The naive approach is to embed the query as a single dense vector and retrieve its nearest neighbors, this sacrifices precision for speed. Production systems cannot afford this tradeoff.

The architecture addresses this through a three-phase retrieval pipeline: initial dense embedding with COIL style token-level representations, binary quantization for ultrafast candidate generation, and reranking for precision recovery.

COIL and Token-Level Embeddings

Information Density at the Token Level

Traditional embedding models pool token-level representations into a single dense vector, a process that discards fine-grained semantic information. Late-interaction models like ColBERT preserve token embeddings, enabling more precise query-document matching. The COIL model extends this principle by encoding queries and documents at the token level, supporting token-level matching where specific query terms align with specific document passages.

In Qdrant, this capability is implemented through multivector representations. Rather than storing a single 384-dimensional vector per document, the system stores output token embeddings multiple vectors, one per token, each capturing the semantic contribution of that individual token to the overall meaning. When a query arrives, it too is tokenized and embedded at the token level. Retrieval then applies MaxSim matching: for each query token, find the highest-scoring match among document tokens, then aggregate these matches to compute an overall relevance score.

This approach delivers two compounded advantages. First, it enables more precise matching: a query asking about “bank lending rates” won’t inadvertently retrieve passages about “river banks” because the model can discriminate at the term level. Second, token-level embeddings can be quantized independently, allowing aggressive compression without proportional loss of semantic precision.

Binary Quantization and the 96% Quality Puzzle

Here is where engineering rigor meets surprising returns: converting float32 embeddings to binary values thresholding normalized embeddings at zero to produce single-bit representations which reduces memory consumption by a factor of 32 and accelerates distance computation to 2 CPU cycles via Hamming distance [SOmething that you can dig into].

The catch? well… Binary quantization alone degrades retrieval quality by approximately 4–10%, potentially significant for high-stakes applications. This is where the re-scoring step becomes essential.

The re-scoring pattern works as follows: First, execute an over-fetch strategy, retrieving perhaps the top-50 candidates using binary embeddings and Hamming distance, an operation so computationally cheap it essentially costs nothing. Then, for only these 50 candidates, recompute similarity using full-precision float32 query embeddings and quantized document vectors, effectively a second-stage ranking.

The result is remarkable: rescoring recovers approximately 96% of baseline retrieval quality while preserving the memory and speed advantages of quantization. For an enterprise managing hundreds of millions of documents, this means indexing an encyclopedia in 5GB of memory rather than 200GB, with retrieval latencies in the 10–20ms range instead of 100–200ms.

Qdrant’s Production-Ready Integration

Qdrant, the open-source vector database underpinning this retrieval layer, is purpose-built in Rust for the exact problem this architecture solves: billion-scale vector search without sacrificing relevance or performance. The database supports binary quantization natively, allowing operators to define collections with binary indexes while maintaining full-precision vectors for rescoring.

Qdrant’s clustering infrastructure handles horizontal scaling through intelligent data partitioning, similar vectors are physically co-located on disk and in memory, dramatically reducing the search space for each query. For the vLLM+LangChain pipeline to execute efficiently, Qdrant exposes both simple search APIs and complex Query APIs supporting multi-stage retrieval: initial search over binary vectors, conditional filtering, and token-level reranking all within a single API call.

Quantized Token Embeddings: The Efficiency Frontier

Qdrant’s support for token-level embeddings extends to quantization. A recent benchmark found that uint8 quantization of token embeddings, reducing each token vector from 4 bytes per dimension to 1 byte, achieved 25% memory savings compared to ColBERT while preserving near-baseline retrieval quality. For systems managing millions of documents, each with dozens of tokens, this compression is the difference between economically viable and prohibitively expensive.

Adaptive Model Serving: vLLM and Hot-Swap LoRA Adapters

Once the retrieval pipeline surfaces the top-10 most relevant documents, the system must generate a contextual response. This is where the vLLM inference engine, paired with hot-swappable LoRA adapters, becomes the innovation multiplier.

vLLM’s Continuous Batching and Throughput at Scale

vLLM is a production inference engine that solves a critical bottleneck in LLM serving: token-level scheduling. Traditional batch inference processes entire requests as atomic units, meaning a batch containing one short request (5 tokens) and one long request (100 tokens) must wait for both to complete before returning results. This batching mismatch causes severe latency tail problems under high load.

vLLM’s continuous batching approach dynamically manages requests at the token level. As the model generates output, it produces one token per request per iteration. Requests that finish earlier are immediately evicted from the batch, and new incoming requests are immediately added, a process that eliminates idle GPU time and dramatically improves system utilization.

The performance improvements are substantial. In vendor benchmarks, continuous batching achieved 23x higher throughput than traditional static batching while reducing p50 latency by up to 80%. This means the same GPU can serve 23x more concurrent users, a transformative economic advantage for inference-heavy systems.

The engine achieves this through PagedAttention, a memory management technique that treats the KV cache (the stored query and key vectors used in attention computation) as pageable memory, similar to OS-level virtual memory. By paging the KV cache to disk when memory pressure increases, the system can handle much longer sequences and larger batches without running out of GPU memory.

AsyncLLM: Non-Blocking Concurrent Request Handling

The vLLM architecture includes AsyncLLMEngine, an asynchronous wrapper that permits concurrent request handling without blocking. When a request arrives, AsyncLLMEngine adds it to a request queue and returns an async generator. A background event loop, kicked when requests are available, continuously calls engine_step() to process requests and yield outputs.

Critically for the Agentic AI HLA architecture, AsyncLLMEngine supports request-level LoRA loading. This means different requests can use different task-specific adapters without reloading the base model. A request marked as “customer support” loads the customer-support LoRA; the next request marked as “technical documentation” loads the documentation-writing LoRA. The base model remains in GPU memory while adapters, typically 10–100MB each, are loaded into the remaining capacity.

This capability is what enables the “Task/Locale Specific LoRA Adapter Swapping” box in the architecture diagram. Rather than maintaining separate GPU instances per task, a single 7B-parameter base model can host dozens of task-specific adapters, each fine-tuned for optimal performance on its specialty, yet sharing the same underlying weights.

LoRA: Efficient Task Specialization

Sourced from other paper & blogs for quantifying

Low-Rank Adaptation (LoRA) is a parameter-efficient fine-tuning technique that reduces trainable parameters to roughly 1–2% of the full model. Instead of updating the full 7B parameter matrix during fine-tuning, only two small low-rank matrices (typically rank 8–64) are trained. During inference, the contribution of these adapter matrices is added to the base model’s output, an operation that costs ~5% additional latency.

The memory and cost implications are revolutionary. Full fine-tuning a 7B model requires storing gradients and optimizer states for all 7B parameters, often totaling 40–60GB of GPU memory. LoRA reduces this to perhaps 100–200MB, enabling fine-tuning on consumer GPUs.

For the agentic system, this unlocks a new operational model: organizations can maintain a shared base model and rapidly develop specialized adapters for specific domains, tasks, or customer segments. A financial services organization might maintain separate adapters for equity research, credit analysis, and regulatory compliance, each optimized for its domain, all running on the same inference cluster.

Moreover, operators can A/B test adapters at inference time. Requests routed to variant A use adapter A; variant B uses adapter B. After gathering user feedback or monitoring metrics, the superior adapter is promoted and others are decommissioned. This rapid experimentation cycle accelerates model improvement.

Knowledge Base Architecture: Qdrant at the Enterprise Scale

Behind the guardrails and inference engine lies the knowledge retrieval system, which must support the multitenancy and scale dynamics characteristic of production enterprise AI.

Tiered Multitenancy: Solving the Noisy Neighbor Problem

Consider an SaaS platform where 1000 customers access a shared Qdrant cluster. In the traditional multitenancy model, each customer’s data resides in the same collection, differentiated only by a payload field (e.g., customer_id). Queries filter by customer ID to isolate relevant documents.

This works for small or uniformly-sized customers. When a single customer represents 30% of the data and receives 60% of query traffic, the cluster’s performance degrades for all others, the classic “noisy neighbor” problem.

Qdrant 1.16 introduced tiered multitenancy, a feature that elegantly solves this. Collections now support user-defined sharding, allowing operators to create named shards within a collection. Large tenants are isolated into dedicated shards with their own HNSW indexes, while small tenants share a “fallback” shard.

Crucially, requests remain unified. A query specifies the customer ID, and Qdrant automatically routes it to either the dedicated shard (if one exists) or the fallback shard (if not). As a customer grows, an operator can promote them to a dedicated shard via a single API call, which streams their data into the new shard while maintaining read/write consistency.

For global companies and startups alike, this architecture delivers:

- Performance isolation: Large customers don’t degrade service for others

- Cost control: Small customers avoid paying for dedicated infrastructure

- Operational simplicity: Promotion/demotion happens transparently.

ACORN: Filtered Search for Complex Constraints

Production systems frequently implement complex filtering

Query: “Show me technical whitepapers from the last 90 days authored by top-cited researchers in machine learning.”

Without indexing support, such queries require scanning most of the collection to apply filters, a latency killer.

Qdrant 1.16 introduced ACORN (ANN Constraint-Optimized Retrieval Network), a search algorithm that improves the quality of filtered vector search when multiple filters with low selectivity apply. The algorithm observes that when most direct neighbors in the HNSW graph are filtered out (because they don’t match constraints), relevance suffers dramatically, the algorithm must settle for suboptimal candidates.

ACORN’s solution: when direct neighbors are filtered out, traverse to neighbors of neighbors, examining second-hop candidates. This expands the search space and often recovers high-quality results, though at a computational cost.

The trade-off is explicit: ACORN is 2–10x slower than standard search but improves recall by 10–40% for highly constrained queries. Operators enable ACORN on a per-query basis, deciding case-by-case whether the accuracy gain justifies the latency cost. For a legal discovery search with strict filtering, ACORN’s accuracy gain is worth the latency; for a user-facing autocomplete, it likely isn’t.

Deployment Flexibility: Cloud, Hybrid, On-Premise

The Qdrant ecosystem acknowledges that enterprise data governance and compliance requirements vary wildly. Three deployment models address this:

Qdrant Cloud is a fully managed service where Qdrant operates the infrastructure, handles scaling, backups, and upgrades. This is the lowest operational burden but sacrifices data residency control.

Qdrant Hybrid Cloud deploys Qdrant as a managed service on customer-controlled Kubernetes infrastructure. The actual database runs in the customer’s cloud account or on-premise data center, while Qdrant Cloud provides the management plane. This model is increasingly preferred by enterprises with strict data residency or sovereignty requirements.

Open-Source Qdrant runs on customer infrastructure with no managed service. This option provides maximum control and transparency, ideal for organizations with mature DevOps practices.

All three models support the same API, feature set, and performance characteristics, allowing organizations to migrate between deployment options as their requirements evolve

Ranking as the Decision Layer: Why Reranking Matters

The retrieval pipeline produces 10–50 candidate documents ranked by relevance. The re-ranking stage is often overlooked, but it is where precision engineering separates production systems from prototypes.

A re-ranker: typically a fine-tuned cross-encoder such as a BERT-based model , receives the query and each candidate document and independently scores the relevance of that specific pair. Unlike the retriever (which approximates and prioritizes speed), the re-ranker can invest 100–200ms per query to evaluate each candidate carefully.

In practice, the two-stage pipeline works as follows:

1. Retrieve the top-50 candidates using vLLM+binary quantization (extremely fast)

2. re-rank these 50 using a cross-encoder (moderately fast)

3. pass the top-10 to the LLM for generation.

The performance gains are substantial. In a benchmark by Google, adding a re-ranking layer improved answer accuracy by 30%, especially for ambiguous queries where semantic understanding matters more than keyword matching.

Re-rankers also enable multi-source fusion: if the RAG system queries both a semantic store (Qdrant) and a keyword store (Elasticsearch), re-ranking harmonizes their scores into a unified ranking, ensuring that the best results, regardless of source, surface first.

Critically for this architecture, re-ranking can be parallelized. While a single request is re-ranking its top-50 candidates, other requests can retrieve or generate in parallel. vLLM’s continuous batching and Qdrant’s horizontal scaling mean that re-ranking contention rarely becomes a bottleneck.

The Complete Production Loop

Now, let’s trace a query through the entire system:

- User submits query to the API. Step Functions immediately accepts it and triggers the pre-inference guardrails state.

- Pre-inference guardrails check for injection attacks, profanity, or policy violations. If checks pass, the request proceeds to retrieval.

- Query embedding: The query is embedded using a retrieval model. If token-level embeddings are used, the output token embeddings are captured.

- Candidate retrieval: Qdrant retrieves the top-50 documents using binary quantization and Hamming distance. If ACORN is enabled for highly constrained queries, it traces to second-hop neighbors if needed.

- Re-ranking: The top-50 candidates are re-scored using full-precision embeddings and optionally re-ranked using a cross-encoder model.

- LLM inference: The top-10 documents and the user query are passed to vLLM. The appropriate LoRA adapter (if any) is loaded into the remaining GPU capacity. vLLM batches this request with other concurrent requests and generates a response via continuous batching.

- Post-inference guardrails check the response for safety violations, hallucinations, or inconsistency with the retrieved context.

- Response returned to the user via Step Functions.

This entire loop right from submission to response, executes in 300–500ms for typical queries on production hardware, with 99th percentile latencies under 1.5 seconds even during traffic spikes.

Economic Implications and the Scale Story

A frequently overlooked aspect of production RAG is cost dynamics. Naive implementations scale linearly or worse with query volume: more queries mean more inference, more queries, more GPU instances. Binary quantization, continuous batching, and tiered multitenancy compress this relationship dramatically.

Consider a hypothetical case: an organization manages 100 million documents and serves 100,000 queries per day. Using standard float32 embeddings with traditional batching, this requires approximately 200GB of GPU memory for the vector index alone, necessitating multiple GPU instances totaling ~$50,000+ per month.

With binary quantization, the index shrinks to 6.5GB, fitting on a single high-memory instance. Continuous batching lets that instance handle 5x the throughput, and tiered multitenancy allows profitable service to diverse customers.

The economic result: an 80% cost reduction while improving latency

That’s a Wrap!! Folks.

Do reach out to me for possible collaborations

- Github

Serve 10M+ agentic AI requests a day: Python-Rust API + WebAssembly + vLLM Backend was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.