RAG Doesn’t Neutralize Prompt Injection. It Multiplies It.

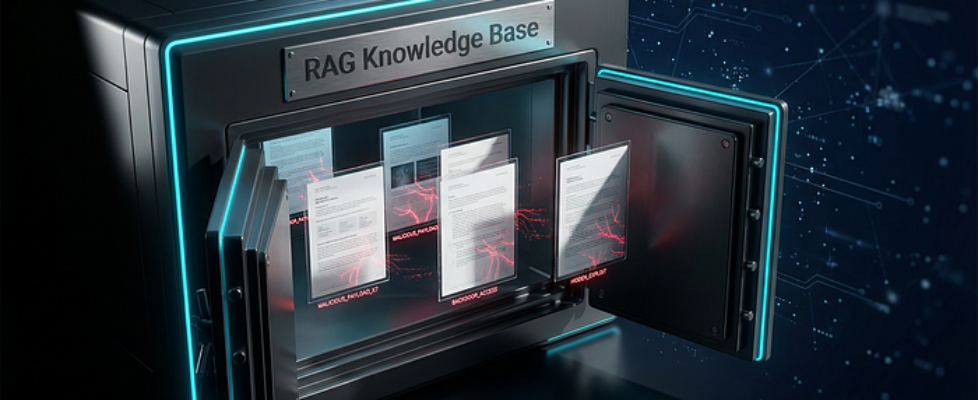

Author(s): AhmedAbdelmenem Originally published on Towards AI. Every retrieved document, web page, and ‘trusted’ data source becomes a new attack vector, and most security teams don’t know it yet. The sales pitch was simple. Connect your LLM to trusted internal documents, and you’d get accurate, grounded responses without the hallucination problem. Retrieval-Augmented Generation would make AI safer by limiting what the model could access. The vault looks secure. The documents inside tell a different story.The article discusses the significant security risks posed by Retrieval-Augmented Generation (RAG) systems, highlighting that these systems, instead of reducing the attack surface, actually expand it by allowing multiple entry points through trusted data sources and documents. Researchers discovered that many RAG implementations are vulnerable to indirect prompt injections, where hidden instructions in retrieved content can compromise outputs without traditional malware or phishing attacks. The security community must recognize that the very features marketed as protections can also introduce vulnerabilities, and that understanding these risks is crucial as organizations increasingly integrate RAG systems into their workflows. Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI