Model Context Protocol (MCP) — Why Your AI Agents Keep Colliding

Model Context Protocol (MCP) — Why Your AI Agents Keep Colliding

Why your agents duplicate work, miss updates, and force humans to step in — and how a shared, versioned context fixes it

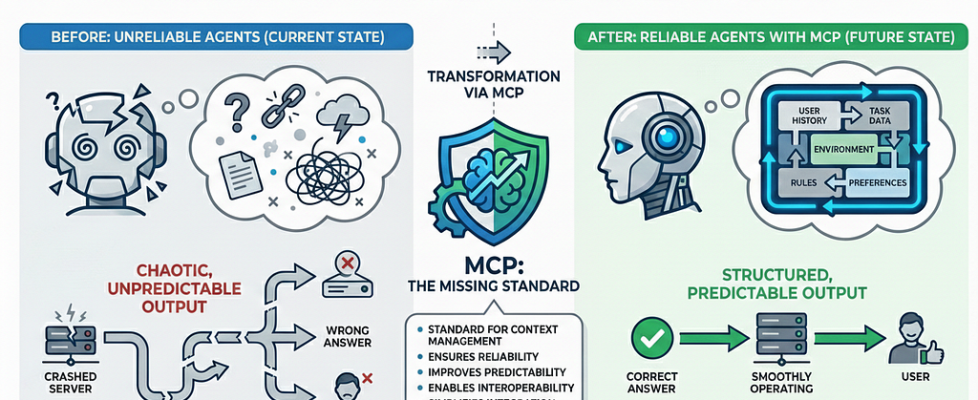

TL;DR: Multi-agent systems don’t fail because models are dumb. They fail because agents don’t share a single, versioned understanding of reality. Model Context Protocol (MCP) is a lightweight, implementation-agnostic contract for sharing structured context, tracking state history, and coordinating actions. Use MCP to reduce duplicate actions, improve auditability, and make multi-agent workflows production-safe.

A Failure You Feel in Your Gut

Two agents charged the same customer for the same order — and your ops team only found out after a complaint landed in the inbox.

That single incident captures what really breaks when agents don’t share a reliable, versioned understanding of reality.

1. Introduction

Imagine a logistics fleet with three agents:

- Agent A (Tracker) updates package location and ETA.

- Agent B (Inventory) updates stock and dispatch schedules.

- Agent C (Notifier) sends SMS/email to drivers and customers.

Without a reliable shared context, Agent A changes an ETA, Agent B never sees it and dispatches the truck, Agent C notifies a customer with the old ETA — and the package gets delayed, inventory is wrong, customers are angry, humans must intervene.

That’s not a model error. It’s a coordination error. Every agent acted rationally from its own memory — and the system as a whole failed.

This article introduces Model Context Protocol (MCP): a practical specification for representing, sharing, versioning, and reconciling context between agents so workflows behave like a single, auditable system instead of a collection of isolated actors.

2. The concrete problems MCP solves

Short, real-world failure modes:

- Duplicated work: Two agents perform the same irreversible action (charge, ship, provision).

- Stale decisions: Agents act on outdated facts (revoked approvals, changed budgets).

- Untraceable state: No clear history of why a decision occurred.

- Human fallback: Reconciliation by humans becomes the default safety valve.

If any of these occur frequently in your stack, MCP is worth adding.

3. What MCP actually is (and what it isn’t)

MCP is:

- A structured context schema (how agents represent facts).

- A versioned commit model (how changes are recorded, with history).

- A set of coordination rules (who can write, merge, or reject).

- Lightweight APIs for publish/read/subscribe/merge.

MCP is not:

- A replacement for LLMs, orchestration engines, or business logic.

- A single implementation: it’s an interoperability protocol you can implement with DB-backed services, message buses, or on-device stores.

4. The MCP data model

This is intentionally small so teams can iterate:

{

"context_id": "string", // e.g., "order-6942"

"commit_id": "string", // hash or uuid

"parents": ["string"], // parent commit_ids for DAG history

"timestamp": "2026-01-21T09:12:00+05:30",

"author_agent": "string", // agent id

"intent": "string", // short intent: "capture_payment"

"payload": { "type": "object" },// domain-specific data

"metadata": {

"source": "string",

"priority": "low|medium|high"

},

"flags": { "conflict": false },

"signature": "string" // optional Ed25519 signature

}

Key design choices:

- parents allow a DAG of commits so concurrent edits can be merged explicitly.

- signature supports non-repudiation & auditing.

- Keep payload schema pluggable per context type (order, shipment, approval).

5. Minimal API surface

Design the API to be small and cache-friendly.

POST /contexts // Create new commit (body: context JSON) -> returns commit_id

GET /contexts/{context_id} // Get latest commit + summary

GET /contexts/{context_id}/history // Returns DAG of commits

POST /contexts/{context_id}/merge // Request an automated merge

WS /subscribe?context={id} // Push real-time commit notifications

Usage patterns:

- Agents read latest commit before acting.

- Agents post commits for state changes.

- Subscribers receive notifications and reconcile as needed.

6. Merge rules — simple example

Merging concurrent commits is where many systems hide subtle bugs. Keep it explicit.

def merge_commits(commit_a, commit_b):

if commit_a.parents == [commit_b.commit_id] or commit_b.parents == [commit_a.commit_id]:

# linear history -> accept newer

return commit_a if commit_a.timestamp > commit_b.timestamp else commit_b

# concurrent edits -> domain merge

merged_payload, conflict_flag = domain_specific_merge(commit_a.payload, commit_b.payload)

new_commit = {

"parents": [commit_a.commit_id, commit_b.commit_id],

"payload": merged_payload,

"flags": {"conflict": conflict_flag}

}

store_commit(new_commit)

if conflict_flag: notify_human_review(new_commit)

return new_commit

Rules to implement in domain_specific_merge:

- Numeric fields: sums or max/min depending on semantics.

- Idempotent operations: prefer operation ids.

- Non-mergeable fields (billing confirmation): force human review.

7. Consistency guarantees & tradeoffs

Make consistency pluggable per context type:

- Eventual consistency (default): low latency, merge-on-conflict. Good for notifications, status updates.

- Optimistic locking: use version checks for single-writer flows (e.g., check commit_id before write).

- Strong linearizability: distributed locks or single-orchestrator for financial/legal operations (higher latency, simpler correctness).

Design principle: pick the weakest consistent model that keeps your system correct — not the most expensive one.

8. Security, privacy & governance

- Authenticate agents (mTLS, OAuth client credentials).

- Sign commits (Ed25519) to verify author and integrity.

- Encrypt sensitive payloads at rest & transit.

- Role-based ACLs on context keys (who can read/write).

- Immutable audit trail via the DAG for compliance and forensics.

9. Testing, metrics & canary rollout

Testing is where MCP proves itself.

Tests

- Unit: domain_specific_merge with many conflict cases.

- Integration: simulate network partitions and concurrent writes.

- Chaos: kill and restart agents; ensure recovery converges.

Metrics to track

- Duplicate-action rate (per 1000 workflows) — primary KPI.

- Manual reconciliation rate.

- Average time-to-converge after conflict.

- Commit verification failures (signature, ACL violations).

Canary strategy

- Start with low-risk contexts (read-only notifications).

- Canary on 5–10% of workflows.

- Monitor duplicate-action rate & manual reconciliations.

- Expand when metrics improve.

Example target: reduce duplicate actions by ≥60% in canary before broad rollout.

10. Implementation patterns (pick one)

- Central MCP service: REST + WebSocket backed by append-only store (easy audit).

- Distributed DAG replication: for edge/offline agents (use CRDTs for mergeability).

- Message bus adapter: publish commits to Kafka/SQS and maintain local context caches for latency-sensitive reads.

Important: keep the protocol consistent across patterns — the representation and semantics are the contract.

11. Migration playbook

- Inventory context types where agents coordinate.

- Select one non-critical context to adopt MCP (e.g., delivery ETA).

- Implement a minimal MCP adapter in each agent (read latest, write commits, subscribe).

- Canary and measure the metrics above.

- Iterate: tighten merge rules for troublesome fields; introduce signatures and ACLs.

- Roll out to more contexts.

Aim for incremental adoption — MCP is additive and doesn’t require a big-bang refactor.

13. Common Questions

Q: Isn’t this just another message bus?

A: No. A message bus moves events; MCP manages shared, versioned context. It defines how agents represent state, track history, detect conflicts, and merge changes. Message buses deliver messages — MCP preserves truth.

Q: Won’t this add latency to agent workflows?

A: Only where you explicitly choose stronger consistency. MCP allows per-context consistency levels, so latency-sensitive paths stay fast while critical operations trade speed for correctness.

Q: Who governs MCP?

A: Start small. Define MCP as an internal RFC, evolve it through real usage, and standardize later if it proves valuable. Governance should follow adoption — not block it.

14. Conclusion

MCP isn’t a silver bullet — but it is a practical, low-friction layer that turns brittle multi-agent meshes into auditable, reliable systems. Define a small context schema, enforce versioned commits, and introduce explicit merge rules. Start with a canary, measure duplicate actions and manual reconciliation, and iterate.

Do this consistently, and something important happens: agents stop colliding and start cooperating. Decisions become traceable. Failures become explainable. And multi-agent systems finally behave like systems — not guesswork stitched together with retries.

Your turn

❤️ If this shifted how you think about agents and deployment, drop a clap 👏 and follow for more battle-tested AI engineering playbooks.

Comment one sentence: what’s the worst multi-agent coordination bug you’ve seen? I’ll reply to the first 10 and sketch how MCP would prevent it.

Model Context Protocol (MCP) — Why Your AI Agents Keep Colliding was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.