LLM API Token Caching: The 90% Cost Reduction Feature when building AI Applications

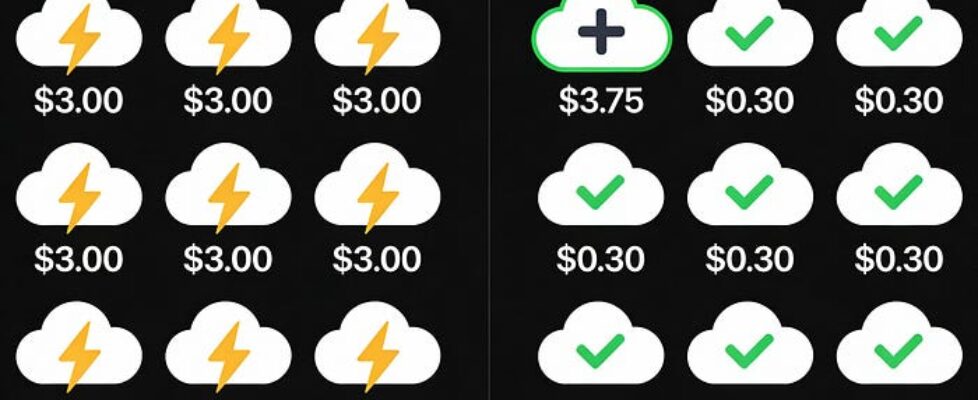

Author(s): Nikhil Originally published on Towards AI. LLM API Token Caching: The 90% Cost Reduction Feature when building AI Applications If you’ve used Claude, GPT-4, or any modern LLM API, you’ve been spending far more than necessary on token processing if you are not caching the system prompt or any prompt that just static and doesn’t change for every api call. Cost comparison: 10x savings on cached token readsToken caching provides substantial cost benefits by allowing reuse of cached prompts across LLM API calls, avoiding redundant computational costs on static prompts. This process dramatically reduces both the expense and latency involved in repeated API requests, transforming the economic model of LLM applications. Various implementations from providers like Anthropic and OpenAI offer different caching methods, with the former providing more explicit control and predictability, while the latter offers an automatic approach that requires no additional configurations from developers. Overall, the implementation of caching is essential for organizations looking to optimize their use of LLM APIs to cut costs. Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI