‘Learning’ the Stochastic Gradient Descent Algorithm

Let’s compare this situation to a more human-related real life scenario. Suppose you are studying for an exam. You have a set study plan, and followed the points in your plan to prepare. Unfortunately, you weren’t too satisfied with your result, since your exam result was way off the targeted score you wanted to achieve. So, what do you do? Well, you would want to make some adjustments to your study plan in order to prepare for your next exam effectively and efficiently.

This is exactly what optimization in machine learning is all about. It’s about making these small adjustments to see if an algorithm’s accuracy is improving. The algorithm wants to get as close to the target value, and optimization techniques can allow us to choose and make adjustments to our paramaters of our machine learning model (also known as the weights), so the algorithm performs better next time.

One of the most well known optimization methods used today is called the Stochastic Gradient Descent, (or SGD in short). How does this method work, and what certain adjustments does it make to our algorithm?

Before we add the word “Stochastic“

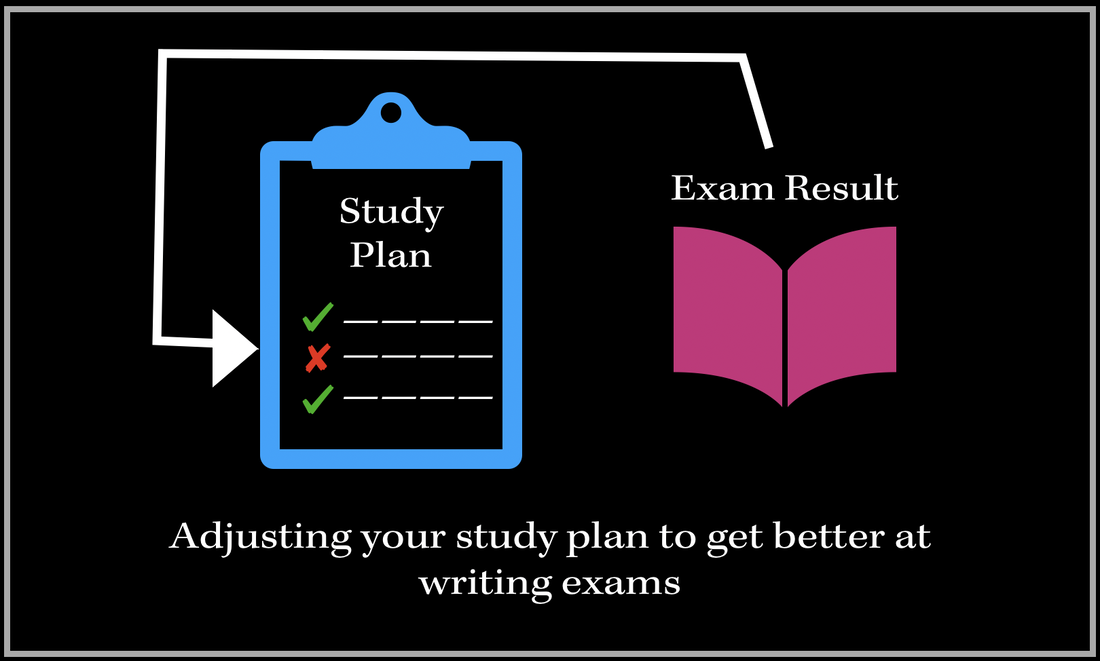

When we want to increase the accuracy of a machine learning algorithm, we are looking for our error difference. What is the difference betweeen the target value and the predicted value from our model?

Cost Function

Now we might be thinking–why can’t we just work with our error difference and minimize our error difference itself? Why do we have to work with a cost function? Our error function can also be negative–in this case it is ‘minimized’ in terms of the value, BUT, there still exists a huge difference between our predicted and actual values. So this is why we are going to instead focus on some function of our error, rather than just the error itself.

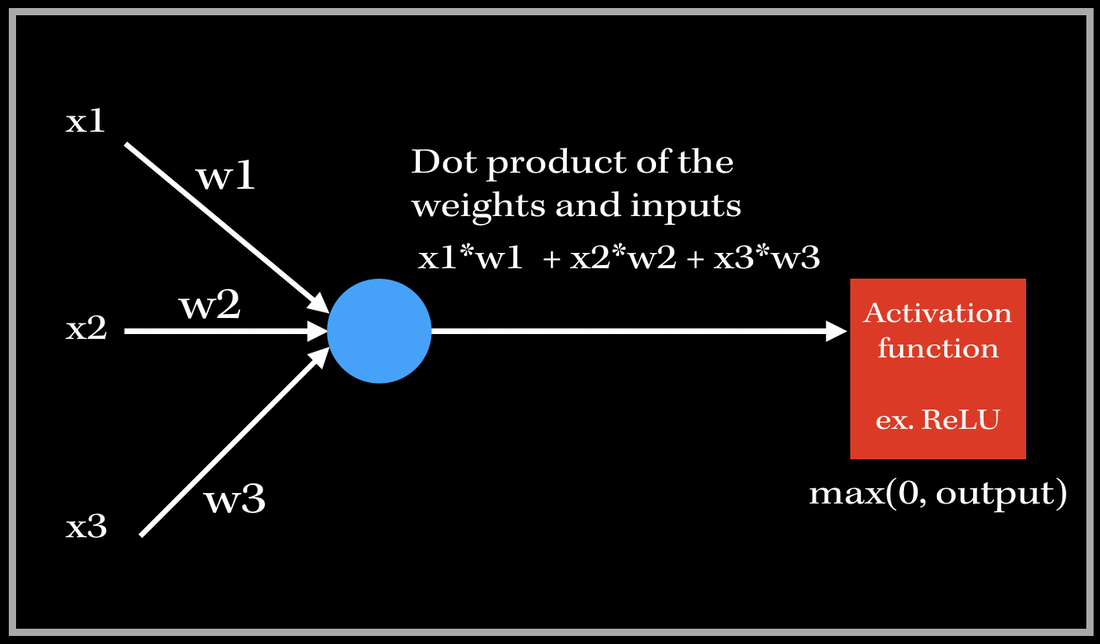

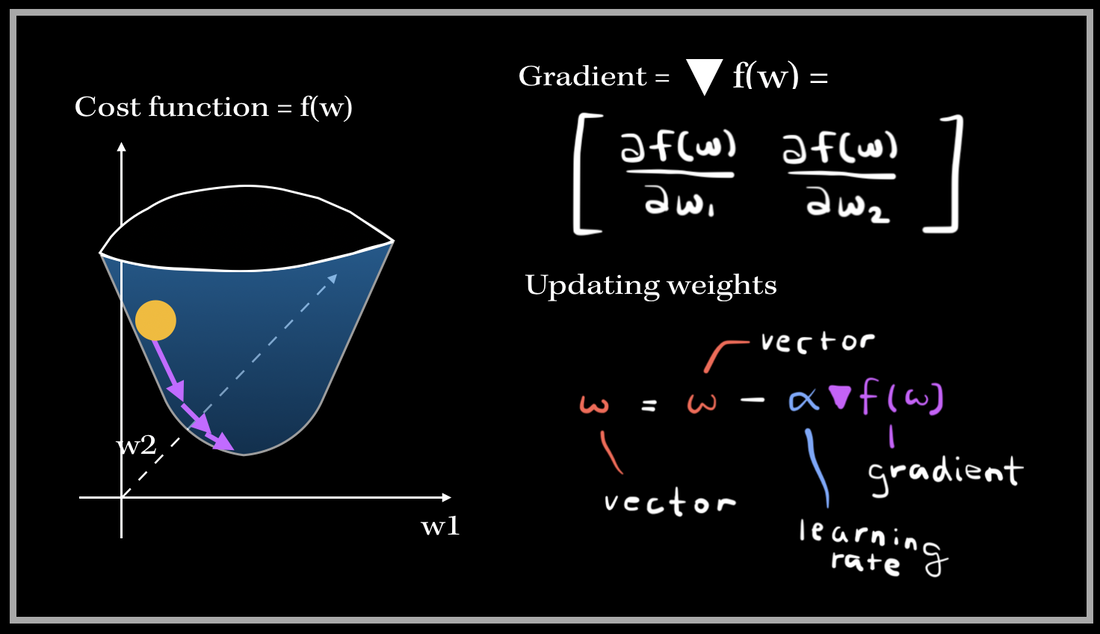

For instance, if we are focusing on improving a single linear neuron’s output prediction, (in a neural network), we will want to obtain a set of weights which will minimize the cost function. This means we will have to find the minima of the function–where is the lowest point of this particular function?

This neuron has three inputs and 3 associated weights for each feature. The output is then the dot product of the weights and inputs, which is then passed into an activation function, such as ReLU. Now, how would we essentially train our model to adjust our weight parameters to improve the accuracy? Since we use functions to get to the output of this neuron, we need to do just the opposite to backpropagate, i.e. go back change the weight parameters to perform better. This means we are gonna get into derivatives.

The Gradient and the Descent

*Note: We are assumming this cost function is a strictly convex function, i.e. a function which has no more than 1 minimum point.*

The step size basically tells us how fast is the iterative process towards convergence. For instance, a small step size will slow down the convergence process, and a large step size might lead to an infinite amount of iterations..,divergence, which is not good! That is why it is so important to find the right step size amount.

Back to the ‘Stochastic‘

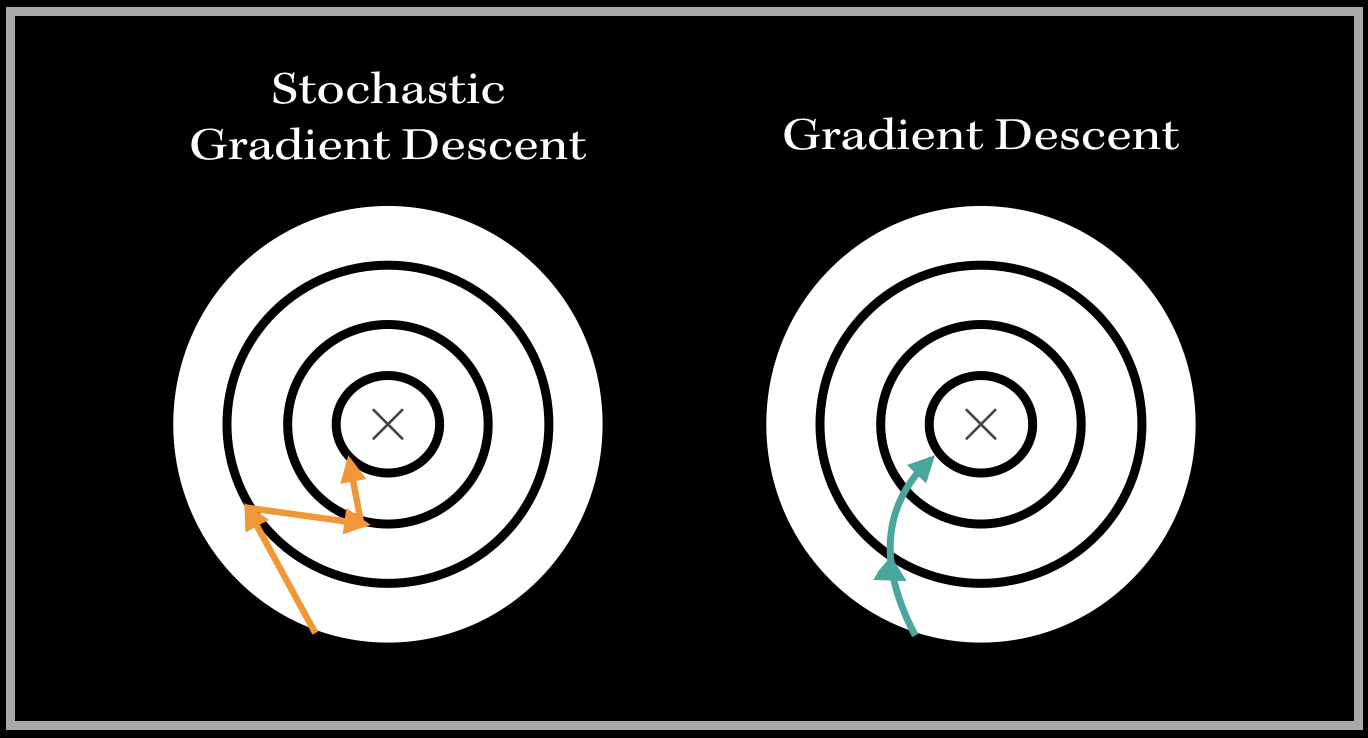

Though the Gradient Descent algorithm is very effective, it isn’t too efficient for huge amounts of data and parameters. The Gradient Descent algorithm updates weights only after one epoch (complete pass of the training data). It would have to compute the gradient after every iteration (after one pass of all the training samples). With a large amount of features and weights, this can be computationally exhaustive, and time consuming.

The Stochastic Gradient Descent, or SGD introduces a sense of randomness into this algorithm, which can make the process faster and more efficient. The dataset is shuffled (to randomize the process) and SGD essentially chooses one random data point at every iteration to compute the gradient. So instead of going through millions of examples, SGD using one random data point to update the parameters. This computationally makes the algorithm a bit more efficient. Consequently, it can lead to excessive ‘noise’ on the pathway to finding the minimum. For example:

Applications

The Gradient Descent Algorithm has applications in Adaptive Filtering (learning based systems), and is used to optimize the filter’s weights to minimize a cost value. An example of a particular Adaptive Filter is Noise Cancellation algorithm in headsets!!! 🙂