How to Think Like a Prompt Engineer (Not Just Write Better Prompts) | M007

📍 Abstract

Most prompt engineering content teaches you tactics. “Be specific.” “Add examples.” “Use chain of thought.” These work for demos. They fail in production…

I built an OCR extraction system where one mistake meant students losing marks. Where 95% accuracy was not good enough because that 5% had real consequences. That system taught me something most guides never mention. Prompt engineering is not about writing better instructions. It is about designing systems that survive reality.

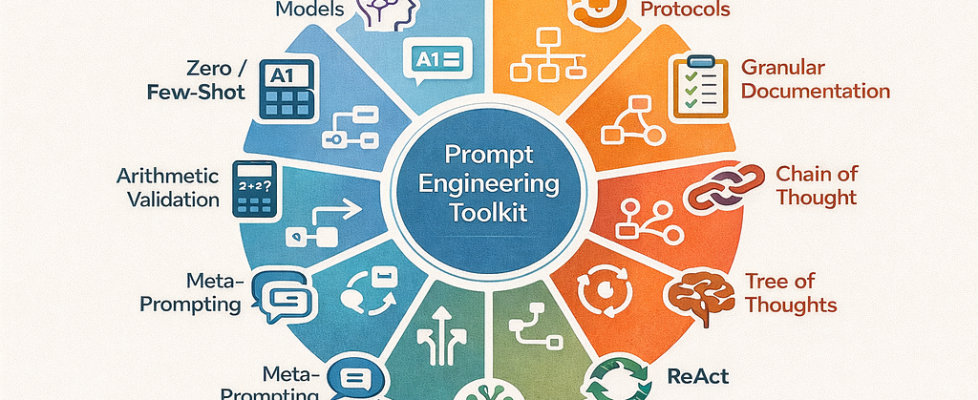

This article is different. No long stories. No fluff. Just ten techniques that actually work. Five from building production systems. Five from cutting-edge research in 2025. Each with concrete examples. Each immediately useful.

By the end, you will have a toolkit that works across domains. Code generation. Data extraction. Content moderation. Reasoning tasks. Everything.

If you want techniques that actually matter, keep reading.

📘 Contents

- Why Most Prompt Engineering Fails in Production

- Technique 1: Arithmetic Validation (When Math Becomes Truth)

- Technique 2: Algorithmic Protocols (Replace Judgment With Steps)

- Technique 3: Granular Documentation (Force Proof of Execution)

- Technique 4: Chain of Thought (Make Reasoning Visible)

- Technique 5: Tree of Thoughts (Explore Before Committing)

- Technique 6: ReAct (Reasoning Plus Action Loops)

- Technique 7: Self-Consistency (Generate Multiple Paths, Pick Best)

- Technique 8: Meta-Prompting (Let AI Design Its Own Instructions)

- Technique 9: Zero-Shot vs Few-Shot (When Examples Help, When They Hurt)

- Technique 10: Prompting Reasoning Models (O1, O3, and Why They Are Different)

- When to Use What: The Decision Framework

- Universal Applications Across Domains

- Final Thoughts

- What Comes Next

🔴 Why Most Prompt Engineering Fails in Production

Here is what breaks most prompts. They work on clean test cases. Then production hits. Messy inputs. Edge cases. Ambiguous requirements. And everything fails.

I learned this building an OCR system. Initial version worked beautifully on simple papers. Then real messy examination papers arrived. Multi-page answers. Nested structures. Ambiguous layouts. Accuracy dropped from 95% to 60%. Students lost marks because extraction failed.

The problem was not the model. The problem was my prompts made assumptions that broke under pressure. They relied on the model’s judgment. They trusted visual cues. They allowed vague completion claims. And all of that failed when complexity increased.

Fixing it required ten techniques. Five from debugging production failures. Five from staying current with research. Let me show you all ten.

🔴 Technique 1: Arithmetic Validation (When Math Becomes Truth)

The principle is simple. Wherever numbers should add up, force the model to validate them before finalizing output.

I discovered this parsing question papers. A question worth 4 marks had subsections worth 1, 1, 2, and 2 marks. Visual layout suggested one structure. But when I checked arithmetic, only one structure made sense. Subsections worth 1 and 1 are mandatory (total 2 marks), then pick one of the 2-mark subsections. Total equals 4. Math validated what visual cues missed.

Here is how you use it. Add a validation step to your prompt. “Before finalizing, verify arithmetic. Do extracted values sum to expected total? If not, flag for review.” Make math the final authority.

This works everywhere. Extracting time allocations? Check if they sum to 24 hours. Parsing budget breakdowns? Verify they match total budget. Structuring hierarchical data? Ensure child values sum to parent. Numbers do not lie. Use them.

Example for code:

“After generating resource allocation, validate that CPU percentages sum to 100%. If not, revise allocation.”

🔴 Technique 2: Algorithmic Protocols (Replace Judgment With Steps)

Most prompts say things like “extract relevant content” or “check if there is more.” These rely on judgment. Judgment fails on edge cases.

The fix is algorithmic thinking. Replace vague instructions with numbered steps that cannot be misinterpreted.

When my OCR system failed on multi-page extraction, I replaced “check next page for continuation” with a 6-step protocol.

Step 1: Identify start page.

Step 2: Extract all content from current page.

Step 3: Check next page using three specific criteria.

Step 4: If continuation detected, extract and repeat.

Step 5: Stop at defined boundary types.

Step 6: Document every page extracted.

Multi-page accuracy jumped from 60% to 98%.

Make your prompts mechanical. Not “do this if needed” but “Step 1: do X. Step 2: check Y. Step 3: if condition Z, repeat, else stop.”

Example for data processing:

“Step 1: Load file. Step 2: For each row, validate format using regex. Step 3: If valid, append to output. If invalid, log error with row number. Step 4: After all rows, report total valid and invalid counts.”

🔴 Technique 3: Granular Documentation (Force Proof of Execution)

Models claim completion without executing. “I extracted pages 2–5.” Sounds confident. But when you check output, page 5 is missing. This is process hallucination.

The fix is forced specificity. Make the model document what it actually saw, not what it planned to do.

Instead of “extracted all pages,” require “Page 2: extracted intro and six bullet points. Page 3: extracted diagram with three labels. Page 4: extracted explanation paragraphs. Page 5: extracted eight lines covering encryption and maintenance.” You cannot fake specifics. To describe it, you must execute it.

This works for any multi-step task. Data processing? Document each file processed with entry counts. Multi-step analysis? Log findings at each stage. Sequential operations? Confirm each step with observable evidence.

Example for API calls:

“For each API request, document: endpoint called, parameters sent, status code received, data extracted. Do not summarize as ‘made all requests.’ List each one.”

🔴 Technique 4: Chain of Thought (Make Reasoning Visible)

Chain of Thought prompting asks the model to show its reasoning before answering. This improves accuracy on complex tasks by forcing step-by-step thinking.

The technique is simple. Add “Let’s think step by step” or “Show your reasoning before the final answer” to your prompt. The model breaks down the problem, considers sub-steps, then produces output.

This works because language models are better at following reasoning chains than jumping to conclusions. When they articulate thinking, they catch errors they would miss otherwise.

When to use: Complex reasoning tasks. Math problems. Multi-step logic. Ambiguous questions.

Example:

“Before answering, think through: What is the core question? What information is relevant? What steps lead to the answer? Then provide your final response.”

🔴 Technique 5: Tree of Thoughts (Explore Before Committing)

Tree of Thoughts extends Chain of Thought by exploring multiple reasoning paths before choosing the best one.

Instead of following one reasoning chain, the model generates multiple possible approaches, evaluates each, and selects the most promising path. This is powerful for problems with multiple valid strategies.

The implementation involves asking the model to generate three to five different approaches, evaluate pros and cons of each, then proceed with the best option.

When to use: Open-ended problems. Strategic planning. Problems where the approach matters as much as the answer.

Example:

“Generate three different approaches to solve this problem. For each approach, list steps and potential issues. Then select the best approach and execute it.”

🔴 Technique 6: ReAct (Reasoning Plus Action Loops)

ReAct combines reasoning with actions in a loop. The model thinks about what to do, takes an action (like calling an API or searching), observes the result, then reasons about next steps.

This is powerful for tasks requiring external information or iterative refinement. The model does not guess. It acts, observes, and adjusts.

The pattern is: Thought (what should I do?), Action (do it), Observation (what happened?), repeat until complete.

When to use: Tasks requiring external data. Iterative debugging. Multi-step workflows with feedback.

Example:

“Thought: I need current stock price. Action: Search for ticker symbol. Observation: Price is $150. Thought: Now calculate percentage change from yesterday. Action: Search yesterday’s price. Observation: Yesterday was $145. Thought: Calculate change. Final answer: 3.4% increase.”

🔴 Technique 7: Self-Consistency (Generate Multiple Paths, Pick Best)

Self-Consistency improves accuracy by generating multiple independent solutions and selecting the most common answer.

Instead of relying on one output, you ask the model to solve the same problem multiple times with different reasoning paths. Then you pick the answer that appears most frequently across attempts.

This works because errors are usually inconsistent while correct reasoning converges to the same answer.

When to use: High-stakes decisions. Math problems. Situations where you can afford multiple generations.

Example:

“Solve this problem five times using different approaches. For each attempt, show full reasoning. Then identify the most common answer across all attempts.”

🔴 Technique 8: Meta-Prompting (Let AI Design Its Own Instructions)

Meta-Prompting uses the model to write or improve prompts. You give the model a task description and ask it to design the optimal prompt for that task.

This is powerful because the model understands what instructions work best for its own architecture. It can generate structured prompts, validation steps, and edge case handling better than manual trial and error.

The pattern is: describe your goal, ask the model to design a prompt that achieves it, then use that generated prompt.

When to use: Complex tasks where optimal prompting is unclear. Iterative improvement of existing prompts.

Example:

“I need to extract product names and prices from receipts. Design a detailed prompt that handles edge cases like missing prices, multiple currencies, and unclear formatting.”

🔴 Technique 9: Zero-Shot vs Few-Shot (When Examples Help, When They Hurt)

Zero-shot prompting gives the model a task with no examples. Few-shot prompting provides examples before asking for output.

Few-shot works better when the task format is unusual or specific. Examples teach the model exactly what output structure you want. But examples can also anchor the model to patterns that do not generalize.

Zero-shot works better when the task is clear and you want maximum flexibility. The model does not get constrained by example patterns.

When to use zero-shot: Clear, well-defined tasks. Tasks where examples might bias output.

When to use few-shot: Unusual formats. Specific output structures. Tasks the model struggles with initially.

Example of few-shot:

“Extract name and email from text. Example 1: ‘Contact John at john@email.com’ → Name: John, Email: john@email.com. Example 2: ‘Reach out to Sarah (sarah@work.com)’ → Name: Sarah, Email: sarah@work.com. Now extract from: ‘Message Mike via mike@company.org’”

🔴 Technique 10: Prompting Reasoning Models (O1, O3, and Why They Are Different)

Reasoning models like OpenAI’s O1 and O3 are different. They have extended internal reasoning before responding. They spend more time thinking through problems.

The key insight is that these models do not need explicit Chain of Thought prompting. They already do that internally. Adding “think step by step” can actually hurt performance by making them explain reasoning they already completed.

Instead, prompting for reasoning models focuses on clarity of task and constraints. Tell them what you want, define success criteria, specify edge cases, then let them reason internally.

When to use: Complex reasoning tasks. Math. Logic problems. Strategic planning.

How to prompt: Be clear about goals. Define constraints. Avoid telling them how to think. Let them reason their way.

Example:

“Solve this optimization problem. Constraints: budget under $10K, timeline under 3 months, must use existing infrastructure. Find the best approach that meets all constraints.”

🔴 When to Use What: The Decision Framework

Here is how you choose techniques based on your task.

For tasks requiring external data or iteration, use ReAct.

For tasks with multiple valid approaches, use Tree of Thoughts.

For complex reasoning where the path matters, use Chain of Thought.

For high-stakes accuracy, use Self-Consistency.

For tasks with specific output formats, use Few-Shot.

For tasks where flexibility matters, use Zero-Shot.

For tasks where you need optimal prompting, use Meta-Prompting.

For any task involving numbers that should validate, add Arithmetic Validation.

For any multi-step task, use Algorithmic Protocols.

For any task where execution might be skipped, force Granular Documentation.

And if you are using reasoning models like O1 or O3, skip explicit reasoning prompts and focus on clear task definition.

Mix techniques when needed. Combine ReAct with Granular Documentation. Use Chain of Thought with Arithmetic Validation. Techniques stack.

🔴 Universal Applications Across Domains

These techniques work everywhere.

Building code generation systems? Use Chain of Thought for logic, Algorithmic Protocols for multi-file generation, Granular Documentation to verify each function, Arithmetic Validation to check resource allocations.

Building content moderation? Use Tree of Thoughts to explore different interpretations, Self-Consistency for high-stakes decisions, ReAct to gather context, Few-Shot to teach edge cases.

Building data extraction? Use Algorithmic Protocols for multi-step parsing, Granular Documentation to verify completeness, Arithmetic Validation for numerical fields, Meta-Prompting to optimize extraction rules.

Building recommendation systems? Use ReAct to gather user data, Tree of Thoughts to explore strategies, Self-Consistency for final ranking, Arithmetic Validation to ensure constraints met.

The techniques are universal. The applications are endless.

🔴Final Thoughts

Prompt engineering is not about tricks. It is about systematic thinking. About knowing what will break before it breaks. About designing prompts that survive production.

These ten techniques transformed how I build systems. They took my OCR accuracy from 60% to 98%. They made my prompts handle edge cases I never tested. They turned fragile instructions into reliable protocols.

Use them. Mix them. Test them on your hardest problems. And watch what you can build.

🔴 What Comes Next

In the next article, I will break down real production prompts. How they are structured. How validation layers work. How to debug when techniques fail. Fully practical, fully technical, zero fluff.

As always, I write from experience. From systems that matter. From techniques that work.

📍 Let’s connect:

X (Twitter): www.x.com/MehulLigade

LinkedIn: www.linkedin.com/in/mehulcode12

Let’s keep building. One technique at a time.

How to Think Like a Prompt Engineer (Not Just Write Better Prompts) | M007 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.