From Automation to Intelligence: Applying Generative AI to Enterprise Decision Systems

Enterprise leaders are investing heavily in generative AI. Yet once these systems go live, many teams encounter a familiar problem. Tasks move faster, dashboards look more polished, and AI-generated responses sound confident, but decision quality often remains unchanged.

In some cases, confidence declines. Leaders approve outcomes they struggle to fully explain or defend. This gap reflects a persistent misconception that automation improves judgment. In practice, it does not.

Across regulated, high-volume enterprise environments, a consistent pattern emerges. When AI accelerates execution without redesigning how decisions are made and owned, organizations move faster toward misaligned outcomes. Systems optimize throughput, while judgment remains constrained by unclear ownership and limited visibility into reasoning.

The real opportunity lies elsewhere. Generative AI delivers value when it strengthens decision intelligence rather than replacing human judgment. This article examines how enterprise decision systems can apply generative AI responsibly, transparently, and at scale. The focus is on how decisions function inside complex enterprise systems.

The Automation Plateau in Enterprise AI

Automation improves efficiency, not decision quality. Many enterprise AI initiatives stall because they optimize surface tasks while leaving the underlying decision system unchanged. Polished dashboards and automated alerts improve visibility but rarely clarify accountability or reduce ambiguity.

AI-assisted decision-making shows that outcomes improve when AI supports evaluation rather than replaces it. When people treat AI outputs as authoritative, performance often declines. When they treat outputs as inputs for reasoning, results improve.

This distinction matters at enterprise scale, where decisions carry financial, legal, and reputational consequences. AI adds value by enhancing human reasoning, not overriding it.

The most persistent constraint is not data or models, but fragmented context. Policies live in one system. Historical decisions live in another. Explanations remain implicit. Decision intelligence addresses this gap by making context explicit.

Decision Intelligence as a System, Not a Model

In enterprise environments, decisions require data, context, constraints, explanation, and accountability. Treating AI as a standalone engine overlooks the fact that decisions emerge from systems, not just models.

A decision system assembles inputs, applies rules, surfaces trade-offs, and routes authority to the appropriate person at the right moment. Generative AI fits within this system as a synthesis layer that connects information across components. It does not replace the system itself.

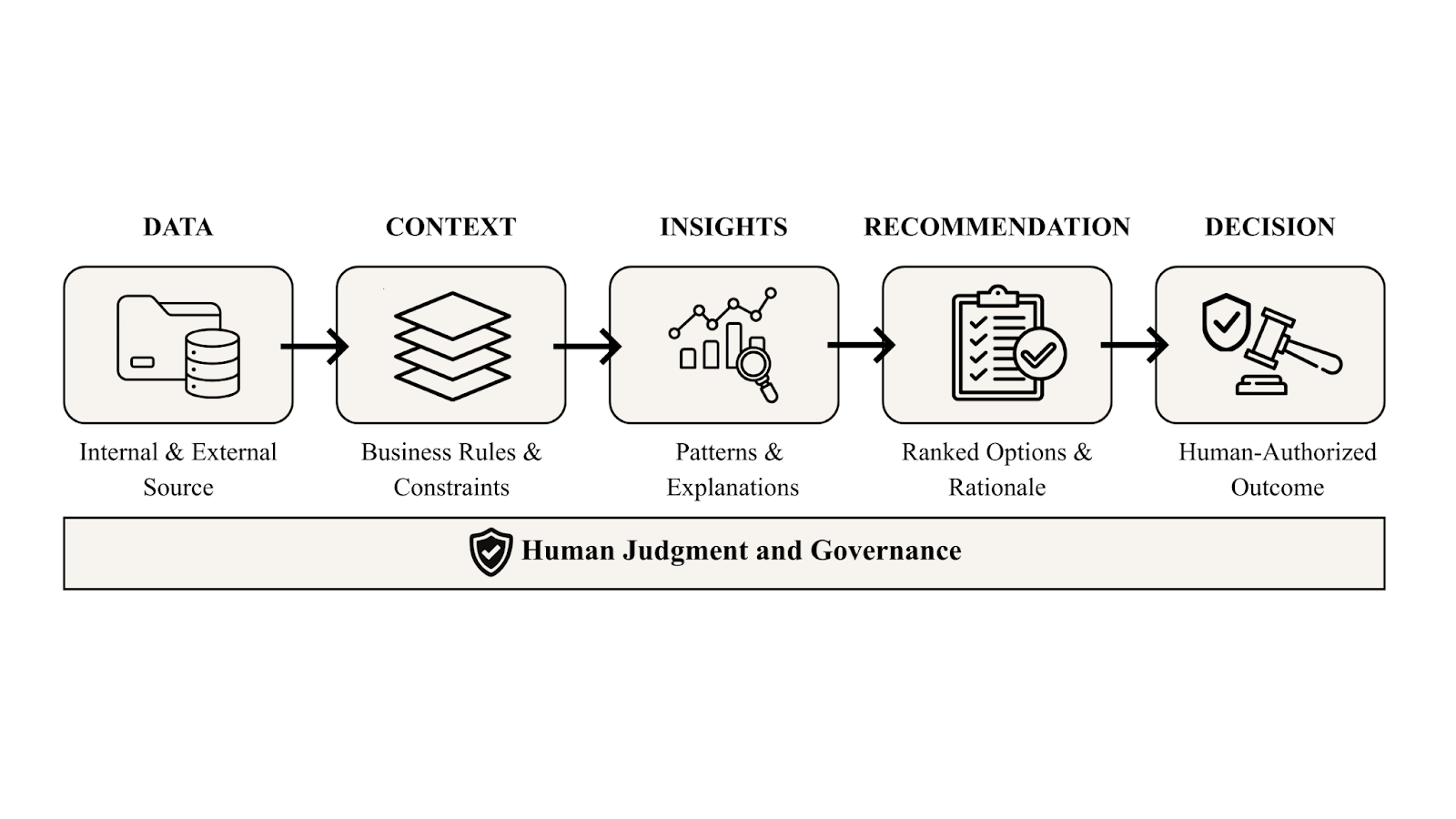

The figure below shows how data flows into context, context into insights, and insights into human-authorized decisions, with governance applied throughout.

This framing reflects how high-impact decisions function in practice. In enterprise product and analytics environments, measurable gains appear only when teams clarify where insights end and decisions begin. Clear boundaries preserve accountability.

Distinguishing Insights, Recommendations, and Decisions

In enterprise decision systems, confusion often arises because teams treat insights, recommendations, and decisions as interchangeable. Separating these layers is essential to preserve accountability, explainability, and human judgment, especially when generative AI is used.

Insights

Insights explain what is happening and why. They surface patterns, anomalies, and relationships across data sources. Insights reduce uncertainty, but they do not prescribe action or assume ownership.

Recommendations

Recommendations translate insights into ranked options under explicit constraints. They present rationale, assumptions, and trade-offs. Recommendations guide action, but they stop short of commitment.

Decisions

Decisions represent authoritative commitments with financial, legal, or operational consequences. They carry clear ownership and require human authorization, particularly when financial exposure, compliance obligations, or customer impact is involved.

This separation aligns with research on explainable, human-grounded decision support, which shows that decision systems perform best when humans can evaluate not just outputs but the reasoning and constraints behind them.

Positioning Generative AI Within Enterprise Decision Systems

Generative AI excels at synthesis. It summarizes complex histories. It translates structured and unstructured data into explanations. It supports scenario exploration through natural language. These strengths reduce cognitive load.

In enterprise deployments, generative systems add the most value when they operate behind clear guardrails. They ingest historical decisions, policies, and escalation records. They surface explanations and comparable cases. They never finalize outcomes.

Research on retrieval-augmented generation provides a practical foundation for this trust boundary. By constraining generative outputs to retrieved, verifiable sources, RAG architectures reduce hallucination and make reasoning traceable. These properties are essential for building reliable and trustworthy enterprise decision systems.

Retrieval-Augmented Generation as a Trust Boundary

Trust determines whether enterprise systems are adopted. Unconstrained generation erodes trust and does not belong in enterprise systems that require explainability and auditability. Traceability matters more than fluency alone. RAG introduces a boundary. It forces generative outputs to reference approved knowledge, past decisions, and policy artifacts.

In large-scale decision platforms, RAG enables explainability without slowing workflows. It allows teams to audit why a recommendation surfaced and which sources informed it. That capability becomes essential once AI systems operate across billing, pricing, and risk-sensitive domains.

Human-in-the-Loop as Intentional Architecture

Human oversight must be intentional, not an afterthought. High-performing systems trigger review based on uncertainty and impact, not volume. Human-in-the-loop works best when:

- Escalation triggers reflect uncertainty and risk, not activity counts.

- Review roles are explicit, including approvers and override authorities.

- Recommendations include rationale, sources, and constraints.

- Teams should log override actions with context and justification to support traceability and audit readiness.

- Outcomes feed back into policy thresholds and decision support logic.

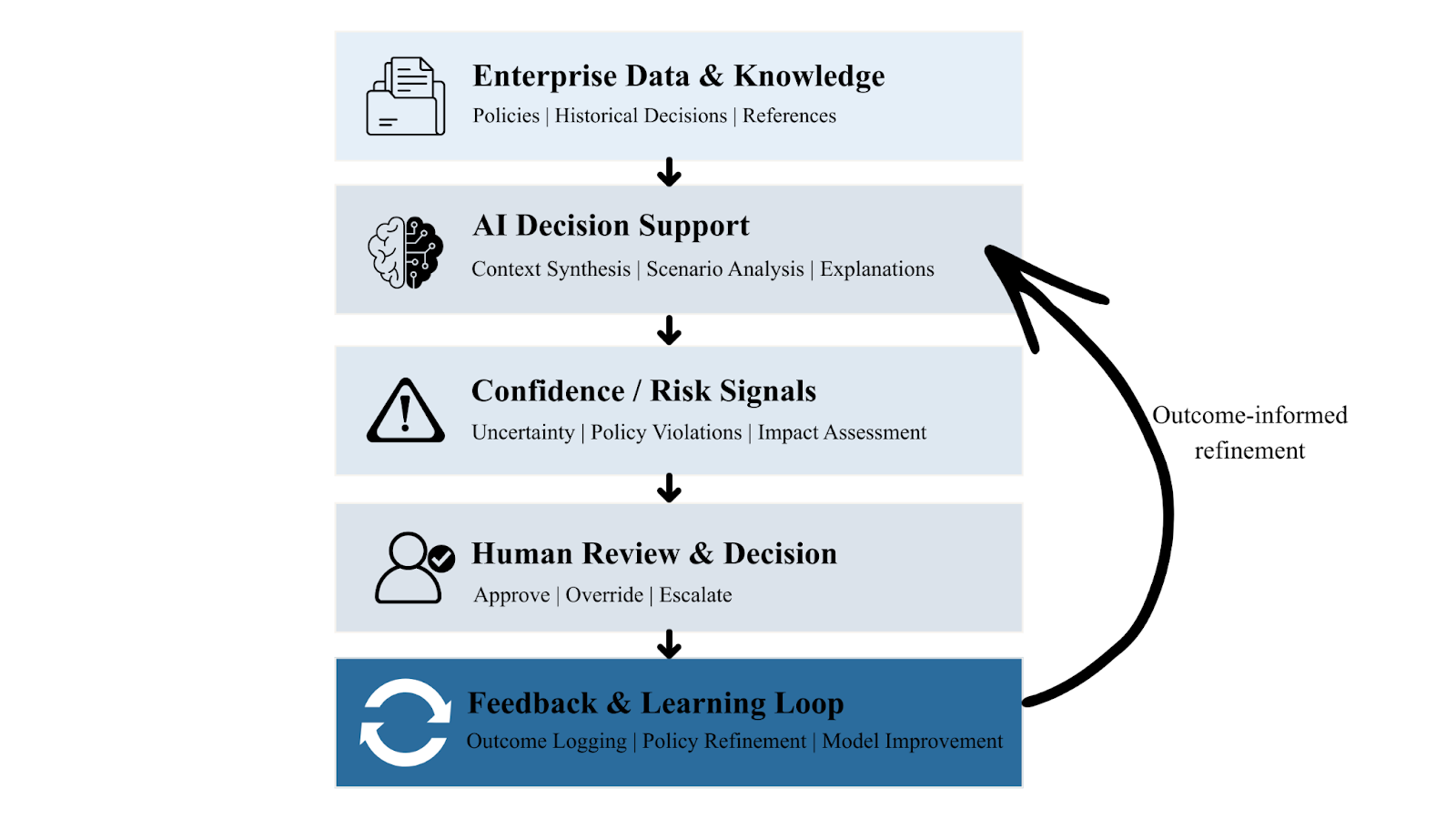

This diagram illustrates a downward flow of decision-making and an upward feedback loop for learning and system refinement.

This structure mirrors how enterprise leaders already operate. AI succeeds when it adapts to that reality rather than attempting to replace it.

Scaling Governance and Auditability Across Decision Platforms

Governance enables scale by making risk explicit. In enterprise environments, explainability and auditability are operational requirements.

Established AI risk management frameworks emphasize transparency, accountability, and continuous monitoring across the AI lifecycle, principles that align directly with decision intelligence systems that log outcomes and refine thresholds over time. Unchecked generative systems introduce hidden risk. Governed systems surface visible trade-offs.

Measuring the Impact of AI-Augmented Decisions

Success depends more on decision quality than on model accuracy alone. Practical metrics include:

- Decision latency: time between input and outcome

- Override rate: how often recommendations are bypassed

- Escalation-to-resolution ratio

- Downstream impact on revenue, compliance, or customer satisfaction

- Consistency across similar decision contexts

The Stanford AI Index highlights a growing gap between AI capability and organizational readiness. Adoption depends on trust and measurable value, not experimentation alone. Organizations that instrument decisions, not just models, learn faster and compound gains.

Preparing Organizations for Decision Intelligence at Scale

AI maturity does not come from adding more models. It comes from redesigning how decisions are owned, governed, and executed. Organizations that succeed with generative AI do so deliberately by clarifying decision ownership, preserving context through strong data foundations, and ensuring leaders can critically interpret AI outputs.

This redesign is not optional. McKinsey’s analysis shows that AI improves outcomes only when organizations rethink how decisions are made and who is held accountable, rather than treating AI as a standalone productivity layer.

Many enterprises feel stalled because AI capability exists, but decision architecture does not. Generative AI becomes transformative when it strengthens judgment, preserves accountability, and earns trust.

The next step is not increased automation. It’s an intentional decision system design.

For leaders responsible for AI adoption, the work begins by mapping end-to-end decision systems. Identify where context fragments across tools and teams. Define clear zones of human authority. Use generative AI to assemble context, surface trade-offs, and explain reasoning without making decisions.

Organizations that take this approach move beyond experimentation. They build decision intelligence as a durable capability. The results follow.

References:

- Dorsch, J. and Moll, M. (2024 September 23). Explainable and human-grounded AI for decision support systems: The theory of epistemic quasi-partnerships. Philosophy of Artificial Intelligence: The State of the Art. https://doi.org/10.48550/arXiv.2409.14839

- McKinsey & Company. (2025 June 04). When can AI make good decisions? The rise of AI corporate citizens. https://www.mckinsey.com/capabilities/operations/our-insights/when-can-ai-make-good-decisions-the-rise-of-ai-corporate-citizens

- National Institute of Standards and Technology (NIST). (2023 January). Artificial Intelligence Risk Management Framework (AI RMF 1.0). U.S. Department of Commerce. https://doi.org/10.6028/NIST.AI.100-1

- Potapoff, J. (2025 April 14). AI in the driver’s seat? Research examines human-AI decision-making dynamics. University of Washington Foster School of Business. https://magazine.foster.uw.edu/insights/ai-decision-making-leonard-boussioux/

- Stanford Institute for Human-Centered Artificial Intelligence (HAI). (2025 April 07). AI Index Report 2025. Stanford University. https://hai.stanford.edu/ai-index/2025-ai-index-report

- Wampler, D., Nielson, D. and Seddighi, A. (2025 November 07). Engineering the RAG stack: A comprehensive review of the architecture and trust frameworks for retrieval-augmented generation systems. arXiv. https://arxiv.org/abs/2601.05264

:::info

This article is published under HackerNoon’s Business Blogging program.

:::