Epoch in Machine Learning Explained with Examples | Epoch vs Iteration & Batch Size

Epoch in Machine Learning: When a student begins their journey into Machine Learning or Deep Learning, one of the most frequently used terms they come across during tutorials, courses, and practical projects is epoch in machine learning, and although the word sounds advanced, the idea behind it is actually very simple and logical.

Many learners often pause and ask:

“What exactly happens in one epoch?”

“Why do people talk about epochs again and again during training?”

“Is epoch the same as iteration or batch?”

In this blog, you will get a very clear understanding of epoch, along with detailed explanations of epoch vs iteration in machine learning, epoch in deep learning, epoch meaning in MATLAB, and the link between batch size and epoch, all explained for your easy understanding!

What Is Epoch in Machine Learning?

One epoch means that the model has gone through the entire training dataset exactly once. This means that if your dataset contains 1,000 training records, then after one epoch, the machine learning model has processed all 1,000 records once, learned from them, and adjusted its internal parameters accordingly to reduce mistakes.

If the same dataset is passed through the model again, that becomes the second epoch, and if it is passed again, that becomes the third epoch, and so on.

So when someone says:

- “The model was trained for 50 epochs,” it simply means the model learned from the same dataset 50 times.

This repeated learning process is the real meaning of epoch, and this is exactly how training happens in real-life Machine Learning and Artificial Intelligence models.

Now think about this: If a student can improve only by revising again and again, will a machine not improve by seeing the same data again and again?

That exact logic is the foundation of epoch.

Why Do We Need Multiple Epochs During Training?

Many beginners assume that one round of training should be enough for a model to learn, but in reality, that is never the case, especially when the data is large and complex.

During the first epoch:

- The model mostly makes rough guesses

- The error rate is high

- The understanding is very weak

During later epochs:

- The predictions become better

- The error slowly reduces

- The learning becomes more stable

This is the main reason why epoch is not limited to just one cycle, and instead, models are trained for multiple epochs to improve accuracy.

However, there is also a warning that every learner must remember. Too many epochs do not always mean better learning; sometimes they only make the model memorise the data instead of understanding it. This is where the connection between batch size and epoch becomes extremely important in real projects.

Epoch vs Iteration in Machine Learning

One of the most confusing topics for beginners is epoch vs iteration in machine learning, because both words are used together very often, but they do not mean the same thing.

Let us break this in a very simple way.

- An epoch means the model has completed one full round of learning from the entire dataset.

- An iteration means one single update of the model based on one batch of data.

For example:

- If your dataset has 1,000 records

- And your batch size is 100

Then:

- One epoch will complete in 10 iterations

This means epoch vs iteration in machine learning is basically the difference between:

- One full training cycle

- And one small training step inside that cycle

Understanding this concept is extremely important because many interview questions and practical training problems are directly based on epoch vs iteration in machine learning.

Batch Size and Epoch: How Both Work Together in Training

Another topic that is always discussed together with epoch is batch size and epoch, because these two values decide how the model actually learns in practical training.

- Batch size decides how many samples are passed into the model in one go.

- Epoch decides how many times the full dataset is reused by the model.

For example:

- Dataset size = 1,000 samples

- Batch size = 100

- Epochs = 10

Then:

- Each epoch will have 10 batches

- Total training steps will be 100 updates

The proper selection of batch size and epoch decides:

- Training speed

- Accuracy level

- Memory usage

- Stability of the learning process

This is why batch size and epoch are always adjusted together when training Machine Learning and Deep Learning models in industry projects.

Read More: MapReduce in Big Data: Meaning, Features, Framework, Examples

Epoch in Deep Learning and Its Strong Impact on Accuracy

The importance of epoch in deep learning becomes much higher because Deep Learning models are built using large neural networks that contain thousands or even millions of parameters.

Because these networks are very complex:

- Learning becomes slow

- Errors reduce gradually

- Small changes in training cycles make a big difference

This is why epoch in deep learning plays a very strong role in deciding whether the final model will perform well or not.

If the model is trained for very few epochs:

- It may fail to understand the data

- Accuracy remains low

If it is trained for too many epochs:

- The model may start memorising the training data

- It may fail when new data is given

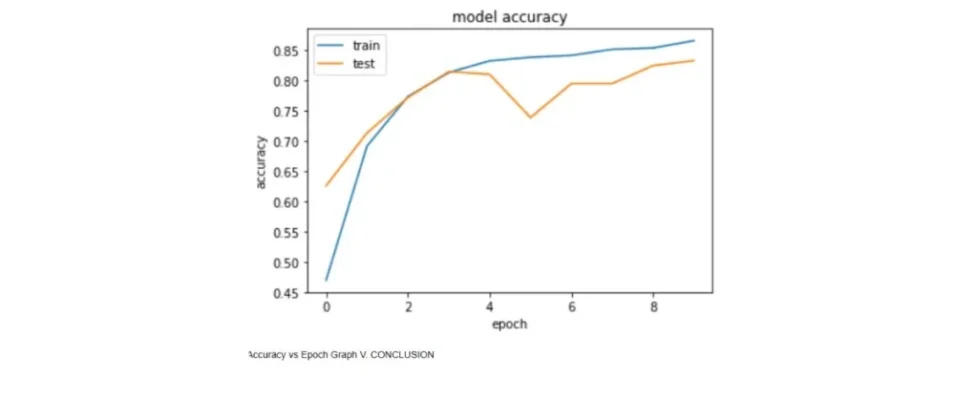

This problem is called overfitting, and it mainly happens when epoch in deep learning is not balanced properly with the correct batch size and epoch values.

Epoch Meaning in MATLAB for Engineering Students

Many engineering students who work with MATLAB often search for epoch meaning in MATLAB, especially when they are building neural network models for college projects or research work.

In MATLAB:

- One epoch means one complete training cycle of the neural network over all the training samples

- MATLAB also shows performance graphs where the error reduces after every epoch

- The training stops once the required number of epochs is reached

So, the epoch meaning in MATLAB is exactly the same as in Python, TensorFlow, or any other Machine Learning framework, it means one full pass of the training data. This makes epoch meaning in MATLAB an important exam concept for students from engineering and data science backgrounds.

Example of Epoch in Machine Learning

To understand this process in the simplest way, imagine a student preparing for an important competitive exam.

- First reading of the full book = 1 epoch

- Second revision = 2 epochs

- Third revision = 3 epochs

With every revision:

- Understanding becomes better

- Mistakes reduce

- Confidence increases

In the same way, the model also improves step by step as it sees the same data again and again.

Common Doubts About Epoch

Many learners can have the following doubts, so let us clear it for you:

- Does increasing epoch always improve performance?

No, after a point, it may reduce accuracy. - Is epoch related to dataset size?

Yes, larger datasets usually need more epochs. - Is epoch more important than batch size?

No, both batch size and epoch work together and must be chosen properly.

These doubts again connect strongly with epoch vs iteration in machine learning and actual training behaviour.

- Epoch – One full dataset learning cycle

- Iteration – One batch update

- Batch Size – Number of samples per step

- Epoch in Deep Learning – Controls stability of neural networks

- Epoch Meaning in MATLAB – One training round of the network

On A Final Note…

By now, you should clearly understand:

- the real difference in epoch vs iteration in machine learning

- the strong impact of epoch in deep learning

- the correct epoch meaning in MATLAB

- and how batch size and epoch work together during model training

If you plan to build a serious career in Data Science, Machine Learning, or Artificial Intelligence, then learning the correct role of epoch is not optional, it is a basic necessity.

FAQs

What is epoch in machine learning with example?

Epoch in machine learning means one complete pass of the training data through the model. If your dataset has 1,000 records and the model sees all 1,000 once, that is one epoch.

What is epoch vs iteration in machine learning?

Epoch means one full dataset learning cycle, while iteration means one batch update inside that cycle.

Why is epoch in deep learning important?

Epoch in deep learning controls how well the neural network learns from data. Too few epochs lead to poor learning, and too many can lead to overfitting.

What is the epoch meaning in matlab?

Epoch meaning in MATLAB refers to one full training cycle where the entire dataset is passed through the neural network once.