Connecting the Dots with Graphs

Your database knows what exists. A knowledge graph knows how everything relates.

Traditional databases store records in isolation. Row after row, table after table. But the real world doesn’t work that way. Products connect to suppliers. Customers connect to purchases. Purchases connect to recommendations. These relationships carry meaning that gets lost when you flatten data into tables and force-fit connections through foreign keys and JOIN statements.

Knowledge graphs flip this model. They treat relationships as first-class citizens, storing not just entities but the connections between them. The result? A data structure that mirrors how humans naturally think about information.

What is a knowledge graph?

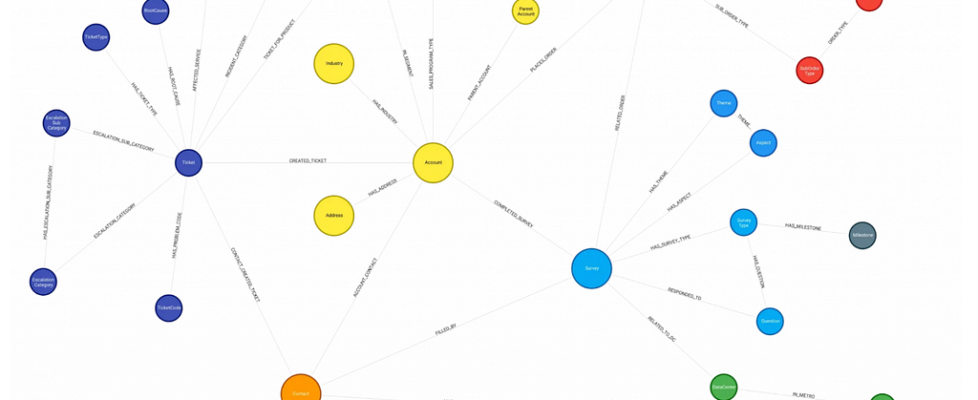

A knowledge graph is a database that represents information as a network of entities (nodes) and their relationships (edges). Each node represents a real-world object, concept, or event. Each edge represents how two nodes relate to each other. Both nodes and edges can carry properties that add context and detail.

The fundamental building block is the triple: (Subject) -[Predicate]-> (Object).

For example:

- (Alice) -[WORKS_AT]-> (Anthropic)

- (Anthropic) -[LOCATED_IN]-> (San Francisco)

- (Alice) -[KNOWS]-> (Bob)

String these triples together and patterns emerge. You can traverse from Alice to her company to her company’s location to other employees at that location to the projects those employees work on. Six degrees of separation become queryable data.

The triple structure

Every fact in a knowledge graph reduces to three components:

{

"subject": ["Alice", "Anthropic", "iPhone 17"],

"predicate": ["WORKS_AT", "MANUFACTURES", "PURCHASED"],

"object": ["Anthropic", "Apple", "2026-01-15"]

}

This structure gives knowledge graphs their flexibility. You don’t need to predefine a rigid schema. New entity types and relationship types can be added without migrations or downtime. The graph grows organically as your understanding of the domain deepens.

How knowledge graphs differ from traditional databases

Relational databases optimize for structured queries on well-defined schemas. They answer questions like “Give me all orders placed in Q4 2025” efficiently. But they struggle with relationship-heavy queries. Finding “all products purchased by customers who also purchased products from the same category as items in my cart” requires multiple JOINs, subqueries, and careful index tuning.

Knowledge graphs optimize for these traversal queries. Relationships aren’t computed at query time through JOINs; they’re pre-stored as explicit connections. Following a relationship costs the same whether your graph has 1,000 nodes or 100 million.

Document databases like MongoDB offer schema flexibility but lack native relationship handling. Graph databases like Neo4j store relationships as directly addressable elements, making multi-hop traversals orders of magnitude faster.

Benefits of knowledge graphs

1. Relationship-first data modeling

Real-world problems center on connections. Fraud detection needs to find suspicious transaction patterns. Recommendation engines need to understand user-product-category relationships. Drug discovery needs to map protein interactions. Supply chain optimization needs to trace dependencies.

Knowledge graphs make these relationships explicit and queryable. Instead of reverse-engineering connections from foreign keys, you model them directly. The data structure matches the problem domain.

2. Flexible and evolving schemas

Business requirements change. New entity types emerge. Existing relationships need additional properties. Traditional databases handle this through ALTER TABLE statements, migration scripts, and careful backward compatibility planning.

Knowledge graphs handle schema evolution gracefully. Adding a new node type or relationship type doesn’t affect existing data. You can store heterogeneous data in the same graph; a Person node can connect to a Company node, a Product node, or a Concept node without schema conflicts.

3. Powerful traversal queries

“Find all people within 3 degrees of connection from this user who have expertise in machine learning and work at companies in the healthcare sector.”

In SQL, this query requires recursive CTEs, multiple JOINs across several tables, and careful optimization. In a graph database, it’s a straightforward traversal: start at the user node, expand outward through KNOWS relationships for 3 hops, filter by expertise and company sector.

The performance difference scales dramatically. Relational databases slow down exponentially as traversal depth increases. Graph databases maintain consistent performance regardless of how many hops you need.

4. Semantic richness and inference

Knowledge graphs can encode ontologies; formal definitions of concepts and their hierarchical relationships. An ontology might define that “Engineer” is a subtype of “Employee” and “Software Engineer” is a subtype of “Engineer.”

With this structure, a query for “all employees” automatically includes engineers and software engineers without explicit enumeration. Reasoning engines can infer new facts from existing ones. If Alice works at Anthropic and Anthropic is located in San Francisco, the system can infer that Alice works in San Francisco.

5. Integration across data silos

Large organizations store data in multiple systems: CRMs, ERPs, HR platforms, product databases, customer support tools. Knowledge graphs serve as an integration layer, mapping entities from different sources into a unified representation.

A customer record from Salesforce connects to support tickets from Zendesk connects to product usage from your application database. Analysts can explore cross-system relationships without understanding the underlying source systems.

Challenges of Knowledge graphs

1. Data quality and entity resolution

Knowledge graphs amplify data quality issues. A single misspelled entity name creates a disconnected node that breaks relationship chains. Duplicate entities fragment information that should be unified.

Entity resolution; determining when two records refer to the same real-world entity; is particularly difficult. Is “J. Smith” the same person as “John Smith” and “Jonathan Smith”? Different data sources use different identifiers, abbreviations, and formats.

Solutions include fuzzy matching algorithms, machine learning-based entity linking, and manual curation workflows. But these add complexity and ongoing maintenance burden.

2. Scale and performance optimization

Graph databases use different optimization strategies than relational databases. Index design, query planning, and memory management all require specialized knowledge.

Super-nodes; entities with millions of connections like popular products or celebrity users; create performance bottlenecks. Queries that traverse through super-nodes can explode in complexity. Mitigation strategies include relationship partitioning, label-based filtering, and query timeout limits.

Horizontal scaling presents additional challenges. Unlike relational databases where sharding strategies are well-understood, graph partitioning is NP-hard. Cutting a graph into balanced partitions while minimizing cross-partition edges requires careful design.

3. Query complexity and learning curve

Graph query languages like Cypher or SPARQL have different mental models than SQL. Developers need to think in patterns and traversals rather than sets and JOINs. The learning curve is real, and debugging graph queries requires understanding how the query planner expands paths.

Complex traversals can also return unexpectedly large result sets. A 4-hop traversal through densely connected nodes might touch millions of paths. Query profiling and result limiting become essential practices.

4. Maintenance and evolution

Graphs grow. Without careful governance, they become tangled webs of inconsistent relationship types, redundant paths, and orphaned nodes. Establishing naming conventions, relationship type taxonomies, and data stewardship processes takes effort.

Version control for graph schemas is less mature than for relational databases. Tracking changes, rolling back modifications, and coordinating schema changes across teams requires custom tooling or careful process discipline.

5. Tooling and ecosystem maturity

The knowledge graph ecosystem is younger than the relational database ecosystem. Visualization tools, migration utilities, backup solutions, and monitoring dashboards exist but often lack the polish of their SQL counterparts.

Integration with existing data pipelines requires custom connectors. ETL tools have strong support for loading data into Postgres or Snowflake; support for Neo4j or Amazon Neptune is less comprehensive.

Cypher query language

Cypher is the declarative query language for Neo4j, the most widely-adopted property graph database. It uses ASCII-art syntax to visually represent graph patterns, making queries intuitive once you grasp the basics.

Pattern matching fundamentals

The core syntax uses parentheses for nodes and arrows for relationships:

// Find all people

MATCH (p:Person)

RETURN p

// Find Alice specifically

MATCH (p:Person {name: 'Alice'})

RETURN p

// Find who Alice knows

MATCH (alice:Person {name: 'Alice'})-[:KNOWS]->(friend)

RETURN friend

// Find friends of friends

MATCH (alice:Person {name: 'Alice'})-[:KNOWS]->()-[:KNOWS]->(foaf)

RETURN DISTINCT foaf

The arrow direction matters. -[:KNOWS]-> finds outgoing relationships, <-[:KNOWS]- finds incoming ones, and -[:KNOWS]- ignores direction.

Variable-length paths

Cypher handles multi-hop traversals with path length specifiers:

// Find connections within 1 to 3 hops

MATCH (alice:Person {name: 'Alice'})-[:KNOWS*1..3]->(connection)

RETURN connection

// Find shortest path between two people

MATCH p = shortestPath(

(alice:Person {name: 'Alice'})-[:KNOWS*]-(bob:Person {name: 'Bob'})

)

RETURN p

Filtering and aggregation

Standard filtering and aggregation operations work similarly to SQL:

// Filter by property

MATCH (p:Person)-[:WORKS_AT]->(c:Company)

WHERE c.industry = 'Technology' AND p.experience_years > 5

RETURN p.name, c.name

// Aggregate relationship counts

MATCH (p:Person)-[:PURCHASED]->(product)

RETURN p.name, COUNT(product) AS purchase_count

ORDER BY purchase_count DESC

LIMIT 10

Creating and modifying data

Data manipulation follows a similar pattern syntax:

// Create nodes

CREATE (alice:Person {name: 'Alice', role: 'Engineer'})

CREATE (anthropic:Company {name: 'Anthropic', industry: 'AI'})

// Create relationships

MATCH (alice:Person {name: 'Alice'})

MATCH (anthropic:Company {name: 'Anthropic'})

CREATE (alice)-[:WORKS_AT {since: 2023}]->(anthropic)

// Update properties

MATCH (alice:Person {name: 'Alice'})

SET alice.role = 'Senior Engineer'

// Delete with cascade

MATCH (p:Person {name: 'TestUser'})

DETACH DELETE p

Advanced pattern queries

Cypher excels at complex pattern matching:

// Find triangles (mutual connections)

MATCH (a:Person)-[:KNOWS]->(b:Person)-[:KNOWS]->(c:Person)-[:KNOWS]->(a)

WHERE a.name < b.name AND b.name < c.name // Avoid duplicate triangles

RETURN a.name, b.name, c.name

// Find triangles (mutual connections)

MATCH (a:Person)-[:KNOWS]->(b:Person)-[:KNOWS]->(c:Person)-[:KNOWS]->(a)

WHERE a.name < b.name AND b.name < c.name // Avoid duplicate triangles

RETURN a.name, b.name, c.name

// Find paths through specific node types

MATCH path = (user:User)-[:PURCHASED]->(p:Product)<-[:MANUFACTURES]-(company:Company)

WHERE company.country = 'Japan'

RETURN user.name, p.name, company.name

// Collect related entities

MATCH (author:Author)-[:WROTE]->(book:Book)

RETURN author.name, COLLECT(book.title) AS books

Where knowledge graphs fit in the data architecture

Knowledge graphs don’t replace your existing databases. They complement them by serving specific use cases where relationship traversal dominates.

Integration layer for enterprise data

Large organizations run dozens of operational systems that each own a slice of business data. Customer data lives in Salesforce, product data in a PIM system, order data in an ERP, support interactions in Zendesk.

A knowledge graph sits above these systems as a semantic integration layer. Entity resolution maps records across systems to unified concepts. The graph stores relationships that span system boundaries. Analysts query the graph to answer cross-functional questions without learning five different query languages.

This pattern works well when you need to answer questions like “What’s the total business impact of this customer across all touchpoints?” or “Which products are frequently mentioned in support tickets by customers in specific industries?”

Real-time recommendation engines

Recommendation systems fundamentally operate on relationships: users connect to items through purchases, views, ratings, and similar behaviors. Graph-based recommendations traverse these connections to find items with high relevance signals.

Collaborative filtering becomes a graph traversal: find users similar to this user, find items those users liked, rank by connection strength. Content-based filtering works similarly: traverse from the current item through category, attribute, and similarity relationships to find related items.

Graph databases handle the real-time traversal requirements that make recommendations feel instantaneous. Pre-computing all recommendations isn’t feasible when you have millions of users and items; you need to compute personalized results on-demand.

Fraud detection and compliance

Fraudulent activity often involves hidden relationships: shell companies connected through shared directors, transaction patterns that form suspicious cycles, accounts linked through device fingerprints or IP addresses.

Knowledge graphs make these patterns queryable. Investigators can explore connection networks interactively. Automated detection systems run pattern queries to flag suspicious structures. The graph accumulates investigation history, so insights from past cases inform future detection.

Similar patterns apply to anti-money-laundering compliance, sanctions screening, and beneficial ownership tracking.

Master data management

Master data; the core business entities like customers, products, suppliers, and locations; often exists in fragmented, inconsistent states across systems.

Knowledge graphs provide a canonical representation layer. Each entity gets a persistent identifier. Relationships to source system records track data lineage. Versioning captures how entity attributes change over time. Governance workflows route data quality issues for resolution.

The graph serves as the source of truth for entity resolution. When a new record arrives, it gets matched against existing graph entities before propagating to downstream systems.

Building an LLM-powered knowledge graph application with Neo4j

Here’s a practical implementation: a question-answering system that combines Neo4j’s graph capabilities with LLM reasoning. Users ask natural language questions, the system converts them to Cypher queries, executes against the graph, and generates human-readable responses.

Architecture overview

The system has four components:

- Neo4j database: Stores the knowledge graph with entities and relationships

- Query translation layer: Converts natural language to Cypher using an LLM

- Query execution: Runs the generated Cypher against Neo4j

- Response generation: Synthesizes query results into natural language answers

Setting up the graph

First, populate Neo4j with a domain-specific knowledge graph. For this example, we’ll use a technology company dataset:

// Create companies

CREATE (anthropic:Company {name: 'Anthropic', founded: 2021, industry: 'AI Safety', employees: 500})

CREATE (openai:Company {name: 'OpenAI', founded: 2015, industry: 'AI Research', employees: 1500})

CREATE (google:Company {name: 'Google DeepMind', founded: 2010, industry: 'AI Research', employees: 2000})

// Create people

CREATE (dario:Person {name: 'Dario Amodei', role: 'CEO', expertise: ['AI Safety', 'Machine Learning']})

CREATE (daniela:Person {name: 'Daniela Amodei', role: 'President', expertise: ['Operations', 'Policy']})

CREATE (sam:Person {name: 'Sam Altman', role: 'CEO', expertise: ['Startups', 'AI']})

// Create products

CREATE (claude:Product {name: 'Claude', type: 'LLM', launched: 2023})

CREATE (gpt4:Product {name: 'GPT-4', type: 'LLM', launched: 2023})

// Create relationships

MATCH (dario:Person {name: 'Dario Amodei'}), (anthropic:Company {name: 'Anthropic'})

CREATE (dario)-[:WORKS_AT {since: 2021, position: 'CEO'}]->(anthropic)

CREATE (dario)-[:FOUNDED]->(anthropic)

MATCH (daniela:Person {name: 'Daniela Amodei'}), (anthropic:Company {name: 'Anthropic'})

CREATE (daniela)-[:WORKS_AT {since: 2021, position: 'President'}]->(anthropic)

CREATE (daniela)-[:FOUNDED]->(anthropic)

MATCH (claude:Product {name: 'Claude'}), (anthropic:Company {name: 'Anthropic'})

CREATE (anthropic)-[:DEVELOPS]->(claude)

MATCH (dario:Person {name: 'Dario Amodei'}), (openai:Company {name: 'OpenAI'})

CREATE (dario)-[:PREVIOUSLY_WORKED_AT {from: 2016, to: 2020}]->(openai)

The query translation prompt

The LLM needs context about the graph schema to generate valid Cypher:

SCHEMA_CONTEXT = """

You are a Cypher query generator for a Neo4j knowledge graph about technology companies.

## Node Types

- Company: name, founded (year), industry, employees (count)

- Person: name, role, expertise (list of strings)

- Product: name, type, launched (year)

## Relationship Types

- (Person)-[:WORKS_AT {since, position}]->(Company)

- (Person)-[:FOUNDED]->(Company)

- (Person)-[:PREVIOUSLY_WORKED_AT {from, to}]->(Company)

- (Company)-[:DEVELOPS]->(Product)

- (Company)-[:COMPETES_WITH]->(Company)

- (Person)-[:KNOWS]->(Person)

## Query Generation Rules

1. Use parameterized queries with $param syntax for user-provided values

2. Always use OPTIONAL MATCH for relationships that might not exist

3. Return specific properties, not entire nodes

4. Use DISTINCT to avoid duplicate results

5. Limit results to 25 unless the user specifies otherwise

Generate ONLY the Cypher query. No explanations.

"""

Implementation with Python

import os

from neo4j import GraphDatabase

from anthropic import Anthropic

class GraphQASystem:

def __init__(self, neo4j_uri: str, neo4j_user: str, neo4j_password: str):

self.driver = GraphDatabase.driver(neo4j_uri, auth=(neo4j_user, neo4j_password))

self.client = Anthropic()

def generate_cypher(self, question: str) -> str:

"""Convert natural language question to Cypher query."""

response = self.client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=1024,

system=SCHEMA_CONTEXT,

messages=[

{"role": "user", "content": f"Generate a Cypher query to answer: {question}"}

]

)

cypher = response.content[0].text.strip()

# Remove markdown code blocks if present

if cypher.startswith("```"):

cypher = cypher.split("```")[1]

if cypher.startswith("cypher"):

cypher = cypher[6:]

return cypher.strip()

def execute_query(self, cypher: str, params: dict = None) -> list:

"""Execute Cypher query and return results."""

with self.driver.session() as session:

result = session.run(cypher, params or {})

return [dict(record) for record in result]

def generate_response(self, question: str, query_results: list) -> str:

"""Generate natural language response from query results."""

response = self.client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=1024,

messages=[

{

"role": "user",

"content": f"""Based on these query results, answer the user's question in natural language.

Question: {question}

Query Results: {query_results}

Provide a clear, conversational answer. If the results are empty, say you couldn't find that information."""

}

]

)

return response.content[0].text

def ask(self, question: str) -> dict:

"""End-to-end question answering."""

# Generate Cypher

cypher = self.generate_cypher(question)

# Execute query

try:

results = self.execute_query(cypher)

except Exception as e:

return {

"question": question,

"cypher": cypher,

"error": str(e),

"answer": "I encountered an error querying the database. The generated query might have syntax issues."

}

# Generate response

answer = self.generate_response(question, results)

return {

"question": question,

"cypher": cypher,

"results": results,

"answer": answer

}

def close(self):

self.driver.close()

# Usage example

if __name__ == "__main__":

qa = GraphQASystem(

neo4j_uri="bolt://localhost:7687",

neo4j_user="neo4j",

neo4j_password="password"

)

questions = [

"Who founded Anthropic?",

"What products does Anthropic develop?",

"Which people have worked at both OpenAI and Anthropic?",

"List all AI companies and their CEOs",

]

for q in questions:

result = qa.ask(q)

print(f"nQ: {result['question']}")

print(f"Cypher: {result['cypher']}")

print(f"A: {result['answer']}")

qa.close()

Extending with graph-aware RAG

The basic QA system handles direct queries. For more complex reasoning, combine graph traversal with document retrieval:

def graph_rag_query(self, question: str) -> str:

"""

Enhanced RAG that uses graph structure to find relevant context.

1. Extract entities from the question

2. Find related entities via graph traversal

3. Retrieve documents associated with those entities

4. Generate answer with full context

"""

# Step 1: Entity extraction

entities = self.extract_entities(question)

# Step 2: Graph expansion - find related context

expansion_cypher = """

MATCH (e)

WHERE e.name IN $entities

OPTIONAL MATCH (e)-[r*1..2]-(related)

RETURN e.name AS entity,

labels(e) AS entity_type,

collect(DISTINCT {

name: related.name,

type: labels(related),

relationship: type(r[0])

}) AS related_entities

"""

graph_context = self.execute_query(expansion_cypher, {"entities": entities})

# Step 3: Retrieve associated documents

doc_cypher = """

MATCH (e)-[:HAS_DOCUMENT]->(d:Document)

WHERE e.name IN $entities

RETURN d.content AS content, d.source AS source, e.name AS entity

LIMIT 10

"""

documents = self.execute_query(doc_cypher, {"entities": entities})

# Step 4: Generate response with full context

context = f"""

Graph Context: {graph_context}

Related Documents: {documents}

"""

return self.generate_response(question, context)

Adding conversational memory

For multi-turn conversations, maintain a session graph that tracks what’s been discussed:

class ConversationalGraphQA(GraphQASystem):

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

self.conversation_history = []

self.mentioned_entities = set()

def ask_with_context(self, question: str) -> dict:

# Add conversation history to the prompt

history_context = "n".join([

f"User: {turn['question']}nAssistant: {turn['answer']}"

for turn in self.conversation_history[-5:] # Last 5 turns

])

# Track mentioned entities for coreference resolution

enhanced_question = self.resolve_coreferences(question)

result = self.ask(enhanced_question)

# Update conversation state

self.conversation_history.append({

"question": question,

"answer": result["answer"]

})

self.mentioned_entities.update(

self.extract_entities(result["answer"])

)

return result

def resolve_coreferences(self, question: str) -> str:

"""Replace pronouns with explicit entity references."""

if not self.mentioned_entities:

return question

# Use LLM to resolve coreferences given conversation context

response = self.client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=256,

messages=[

{

"role": "user",

"content": f"""Given these recently mentioned entities: {list(self.mentioned_entities)}

And this conversation history:

{self.conversation_history[-3:] if self.conversation_history else 'None'}

Rewrite this question to replace pronouns with explicit entity names:

"{question}"

Return only the rewritten question."""

}

]

)

return response.content[0].text.strip()

Production considerations

For production deployments, add these layers:

Query validation: Before executing generated Cypher, validate it against an allowlist of patterns. Block queries that could modify data or run expensive traversals.

SAFE_PATTERNS = [

r"^MATCH.*RETURN.*$",

r"^MATCH.*WHERE.*RETURN.*$",

r"^MATCH.*OPTIONAL MATCH.*RETURN.*$",

]

def validate_cypher(cypher: str) -> bool:

cypher_normalized = " ".join(cypher.upper().split())

return any(re.match(pattern, cypher_normalized) for pattern in SAFE_PATTERNS)

Query caching: Cache generated Cypher for common questions. LLM calls are expensive; don’t regenerate the same query repeatedly.

Result limiting: Always enforce LIMIT clauses to prevent runaway queries from overwhelming the database or the response generation.

Observability: Log generated queries, execution times, and result counts. Monitor for query failures and patterns that indicate schema gaps.

Conclusion

Knowledge graphs transform how you model and query connected data. They make relationships explicit, enable powerful traversal queries, and provide a flexible foundation for integrating data across systems.

The learning curve is real. Graph thinking differs from relational thinking. Query optimization requires new mental models. Data quality challenges get amplified when everything connects.

But for the right use cases: recommendations, fraud detection, knowledge management, semantic search, the investment pays off. Neo4j’s Cypher language makes graph queries approachable. LLM integration opens natural language interfaces that lower the barrier to graph exploration.

Start with a focused use case where relationships matter more than individual records. Build fluency with graph patterns before attempting enterprise-scale integration. The dots connect faster than you’d expect.

Resources

🌐 Connect

For more insights on AI, data formats, and LLM systems follow me on:

Connecting the Dots with Graphs was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.