Building Self-Correcting RAG Systems

Standard RAG pipelines have a fatal flaw: they retrieve once and hope for the best. When the retrieved documents don’t match the user’s intent, the system generates confident nonsense. No feedback loop. No self-correction. No second chances.

Agentic RAG changes this. Instead of blindly generating answers from whatever documents come back, an agent evaluates relevance first. If the retrieved content doesn’t cut it, the system rewrites the query and tries again. This creates a self-healing retrieval pipeline that handles edge cases gracefully.

This article walks through building a production-grade Agentic RAG system using LangGraph for orchestration and Redis as the vector store. We’ll cover the architecture, the decision logic, and the state machine wiring that makes it all work.

The problem with “retrieve and pray”

Picture this: your knowledge base contains detailed documentation titled “Parameter-Efficient Training Methods for Large Language Models.” A user asks, “What’s the best way to fine-tune LLMs?”

The semantic similarity is there, but it’s not strong enough. Your retriever pulls back tangentially related chunks about model architecture instead. The LLM, having no way to know the context is wrong, generates a plausible-sounding but incorrect answer.

The user loses trust. Your RAG system looks broken.

Traditional RAG has no mechanism to detect this failure mode. It treats retrieval as a one-shot operation: query in, documents out, answer generated. Done.

Agentic RAG introduces checkpoints. An agent decides whether to retrieve at all. A grading step evaluates whether retrieved documents are relevant. A rewrite step reformulates failed queries. The system loops until it gets relevant context or exhausts its retry budget.

Architectural Flow

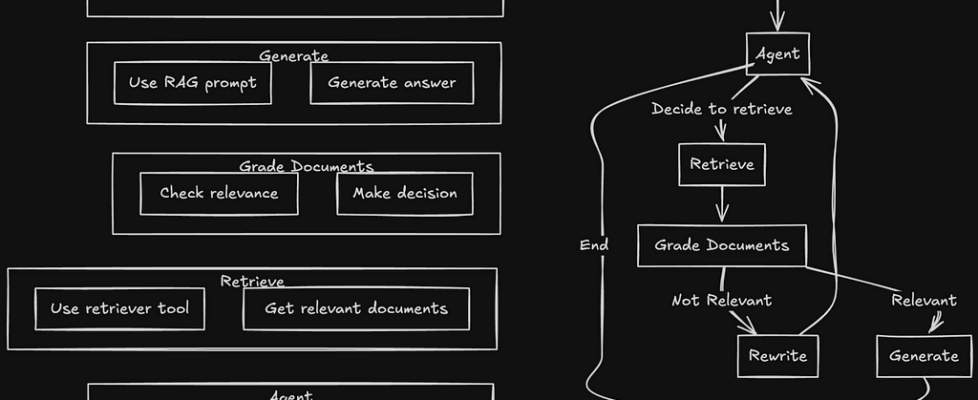

The system breaks down into six distinct components, each with a single responsibility:

Configuration layer handles environment variables and API client setup. Redis connection strings, OpenAI keys, model names; all centralized in one place.

Retriever setup downloads source documents (in this case, Lilian Weng’s blog posts on agents), splits them into chunks, embeds them with OpenAI’s embedding model, and stores everything in Redis via RedisVectorStore. The retriever then gets wrapped as a tool the agent can call.

Agent node receives the user’s question and makes the first decision: should I call the retriever tool, or can I answer this directly? If the question requires external knowledge, the agent invokes retrieval.

Grade edge evaluates whether retrieved documents are relevant to the original question. This is the critical checkpoint. Relevant documents flow to generation. Irrelevant documents trigger a rewrite.

Rewrite node transforms the original question into a better search query. The user’s phrasing was too colloquial. Key terms were missing. The rewriter reformulates and sends the new query back to the agent for another retrieval attempt.

Generate node takes relevant documents and produces the final answer. This only runs after the grading step confirms the context is appropriate.

The decision flow

Here’s how a query moves through the system:

User Question

↓

Agent ─────────────────────────────────┐

↓ │

[Calls retriever tool] │

↓ │

Retrieve documents │

↓ │

Grade documents │

↓ │

┌─────────────────┐ │

│ Relevant? │ │

└────────┬────────┘ │

│ │

Yes │ No │

│ │ │

↓ └────→ Rewrite query ──────┘

Generate

↓

Answer

The feedback loop from “Rewrite” back to “Agent” is what makes this agentic. The system doesn’t fail silently; it adapts and retries.

Project structure

The codebase follows a clean separation of concerns:

src/

├── config/

│ ├── settings.py # Environment variables

│ └── openai.py # Model names and API clients

├── retriever.py # Document ingestion and Redis vector store

├── agents/

│ ├── nodes.py # Agent, rewrite, and generate functions

│ ├── edges.py # Document grading logic

│ └── graph.py # LangGraph state machine

└── main.py # Entry point

Each file does one thing. Configuration stays in config/. Agent logic stays in agents/. The retriever handles all vector store operations. This makes testing and debugging straightforward.

Configuration: centralizing secrets and clients

The configuration layer serves two purposes: loading environment variables and providing consistent API clients across the codebase.

settings.py loads Redis connection strings, OpenAI API keys, and the index name from environment variables. All configuration lives here, not scattered across files.

openai.py creates the embedding model and LLM client instances. When you need to switch from gpt-4o-mini to a different model, you change one file. When you need to adjust embedding dimensions, you change one file. No hunting through the codebase.

This pattern matters more than it seems. Production systems evolve. Models get deprecated. API keys rotate. Centralizing configuration means these changes happen in one place.

Retriever: building the knowledge base with Redis

The retriever handles the ingestion pipeline: fetching documents, splitting them into chunks, generating embeddings, and storing everything in Redis for fast similarity search.

The source documents are Lilian Weng’s blog posts on agents and prompt engineering. These get loaded via WebBaseLoader, split into manageable chunks using RecursiveCharacterTextSplitter, and embedded with OpenAI’s embedding model.

Redis stores the vectors via RedisVectorStore. The retriever gets wrapped as a LangChain tool using create_retriever_tool. This wrapping is important: it lets the agent call retrieval as a tool, which means the agent can decide whether to retrieve at all.

Why Redis? Speed and simplicity. Redis handles vector similarity search without the operational overhead of a dedicated vector database. For systems that already run Redis, this adds RAG capabilities without new infrastructure.

Agent nodes: the decision makers

Three functions in nodes.py handle the core logic:

The agent function receives the current state (including the user’s question and any message history) and decides what to do next. It has access to tools, including the retriever. If the question requires external knowledge, the agent calls the retriever tool. If not, it answers directly.

The rewrite function takes a question that failed retrieval grading and reformulates it. The rewriter prompts the LLM to generate a better search query; one that’s more likely to pull back relevant documents. This reformulated question gets passed back to the agent for another attempt.

The generate function produces the final answer. It receives the original question and the relevant documents (now confirmed relevant by the grading step) and generates a response grounded in that context.

Each function is stateless. State flows through the graph, not through function internals. This makes the system easier to test and debug.

Edge logic: grading document relevance

The grade_documents function in edges.py is the checkpoint that makes this system agentic.

After retrieval, this function evaluates each document against the original question. Is this document relevant? Does it contain information that would help answer the query?

The grading logic uses an LLM call with a structured prompt. The prompt asks the model to evaluate relevance and return a binary decision: relevant or not relevant.

If documents pass the grade, the function returns “generate”, routing the flow to answer generation. If documents fail, it returns “rewrite”, triggering query reformulation.

This evaluation step catches the failure mode that kills standard RAG systems. Instead of generating from irrelevant context, the system gets another chance to find better documents.

Graph wiring: the LangGraph state machine

graph.py ties everything together using LangGraph’s state machine primitives.

The graph defines nodes (agent, retrieve, generate, rewrite) and edges (the connections between them, including conditional routing based on grading results).

The wiring looks like this:

- Start → Agent: every query starts at the agent node

- Agent → Retrieve: if the agent calls the retriever tool, flow moves to retrieval

- Retrieve → Grade: after retrieval, documents get graded

- Grade → Generate (if relevant): relevant documents flow to generation

- Grade → Rewrite (if not relevant): irrelevant documents trigger rewriting

- Rewrite → Agent: the reformulated query goes back to the agent

- Generate → End: the answer gets returned

LangGraph handles the state management. Each node receives the current state and returns updates. The graph engine routes messages based on conditional edge logic.

Runtime: what happens when you run main.py

The entry point builds the graph, sends a user question, and streams results.

build_graph() constructs the LangGraph state machine and initializes the retriever tool. This happens once at startup.

When a question comes in, the flow proceeds:

- The agent receives the question and decides to call retrieval

- Documents come back from Redis

- The grading step evaluates relevance

- If relevant, generation produces an answer

- If not relevant, rewriting reformulates the query and the loop continues

The main.py script streams node outputs to the console, so you can watch the decision-making in real time. You see when retrieval happens, when grading passes or fails, and when rewriting kicks in.

Why this architecture matters

Three properties make Agentic RAG superior to standard RAG:

Self-correction: the system detects poor retrieval and fixes it. No silent failures. No confident wrong answers from irrelevant context.

Transparency: the state machine makes decision points explicit. You can log every routing decision. You can audit why the system chose to rewrite. Debugging becomes tractable.

Modularity: each component has a single responsibility. Swap Redis for Pinecone by changing the retriever. Swap OpenAI for Anthropic by changing the config. The architecture doesn’t care.

When to use this pattern

Agentic RAG makes sense when:

- Your queries vary in phrasing and your users don’t write like your documentation

- You need to explain why the system retrieved what it retrieved

- You’re willing to trade latency for accuracy (rewriting adds LLM calls)

- Your failure mode for wrong answers is worse than slower answers

It’s overkill when:

- Your queries are predictable and uniform

- Latency requirements are strict and can’t tolerate retries

- Your retrieval quality is already high enough

Wrapping up

Standard RAG treats retrieval as a black box: query goes in, documents come out, hope they’re relevant. Agentic RAG opens that box and adds checkpoints.

The combination of LangGraph and Redis gives you a production-ready foundation. LangGraph handles the state machine complexity. Redis handles fast vector search. The grading and rewriting logic handles the edge cases that break simpler systems.

The code for this implementation is available in the Github repo. Clone it, run it, and adapt it to your use case.

Your RAG system doesn’t have to fail silently. Give it the ability to try again.

Resources

🌐 Connect

For more insights on AI, data formats, and LLM systems follow me on:

Building Self-Correcting RAG Systems was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.