Baby Shazam: Reverse Audio Search Engine with Qdrant + Discover Similar

There’s a very specific kind of nerd joy in hearing a random track in a cafe, pulling out your phone, and having an app instantly tell you not only what it is, but also recommend five more tracks in the same vibe. That magic is no longer reserved for Shazam or Google. With modern audio embeddings and a good vector database you can ship something dangerously close from your laptop.

Shazams classic architecture is built on audio fingerprinting not neural embeddings. The acutally rough idea is quick simple:

- Compute a spectrogram of the audio

- Pick the most prominent peaks to form a constellation map.

- Convert these peak patterns into compact hashes (audio fingerprints).

- Perform fast hash lookups against a giant fingerprint index to find a match.

This is insanely fast and robust to noise. But it’s also extremely literal. It’s built to answer:

“Is this exact recording in the database?”

It’s not built to answer:

- “Give me tracks that sound like this.”

- “Find covers / remixes / live versions of this.”

- “Find songs in the same mood / timbre / instrumentation.”

Those are semantic questions. They need something richer than discrete hashes: dense vector embeddings.

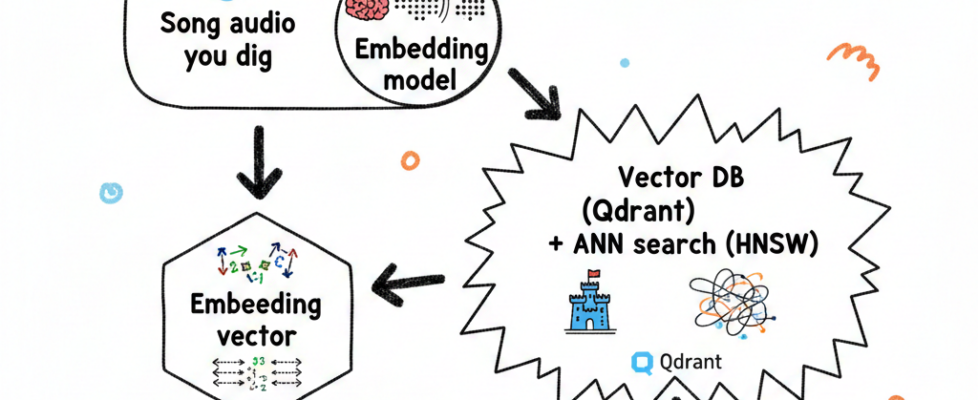

The modern stack for “Shazam‑but‑smarter” looks like this, this is what I am thinking..

Song audio you dig

↓

Embedding model

↓

Embedding vector

↓

Vector DB (Qdrant) + ANN search (HNSW)

↓

Top‑K similar songs + metadata

Audio embeddings in practice

Instead of fingerprints, we feed audio into a pre‑trained model and get back a dense vector that encodes timbre, phonetics, texture, rhythm, and sometimes even higher‑order semantics.

One very practical model here: Wav2Vec2‑large‑XLSR‑53. Architecturally, it’s a BERT‑like transformer with a 768‑dim hidden state, trained on multilingual speech/audio.

The trick is:

- You feed in audio at 16 kHz.

- The model outputs a sequence of hidden states over time.

- You pool over time to get a single 768dim embedding for the clip.

Two clips that sound similar → vectors close together (high cosine similarity)

Why Qdrant is a best fit for This

At this point, you have vectors. You could technically dump them into any vector DB or even FAISS. But Qdrant brings several very specific properties that matter a lot once you go beyond proof‑of‑concept.

1. Rust + HNSW = Fast, Predictable Latency

Qdrant is an open‑source vector DB written in Rust, using HNSW (Hierarchical Navigable Small World graphs) under the hood for approximate nearest neighbor search.

Key points that matter for audio:

- Cosine distance is fully supported and idiomatic for embeddings.

- HNSW gives near‑logarithmic search time instead of scanning everything.

- With tuned parameters (m, ef_construct, ef_search) you get millisecond‑level latency at tens of millions of vectors.

The RESULT!!

5‑second user recording can be embedded and searched across tens of millions of tracks in sub‑100 ms end‑to‑end, which is the UX bar set by Shazam.

2. Filtering Is First Not an Afterthought

Most vector DBs do approximate search first, then filter metadata. That means you:

- Fetch a big candidate set

- Throw half of it away because it doesn’t match filters like genre, region, or user scope

Qdrant lets you do filter aware search, it applies payload filters during HNSW traversal, not just after,, so the traversal itself respects constraints on fields like genre, user_id, release_date, etc.

This matters when you want stuff like:

- Songs similar to this clip, but only from the users library

- Similar songs but only indie + say… 2018‑2024

- Same vibe, but restrict to this label / region

3. Retrieval Quality Knobs

Because Qdrant exposes HNSW parameters and even an exact search mode, you can actually measure and tune the trade‑off between speed and recall. Dude…. that’s huge because! That’s not just academic, if you’re doing music discovery, you want to know when your ANN search drifts too far from exact kNN.

4. Open Source, Cloud, and Benchmarks

- Open source

- Available as a self‑hosted binary or Docker or hybrid deployments

- Offered as a managed Qdrant Cloud with distributed deployments

Benchmarks from Qdrant’s own comparisons show competitive or superior latency against other vector DBs at large scale while maintaining strong recall.

For a startup, that translates to:

- No vendor lock‑in.

- On‑prem or VPC‑ready.

- Managed option later when you don’t want to be on call.

5. Ingestion — 10 Songs into Qdrant with Wav2Vec2

We’ll start with a script that:

- Loads (up to) 10 songs from a songs/ directory.

- Generates a 768‑dim Wav2Vec2 embedding per track.

- Creates a Qdrant collection with cosine distance.

- Upserts all 10 songs into the songs collection.

import os

from typing import List, Tuple

import numpy as np

import librosa

import torch

from transformers import Wav2Vec2Processor, Wav2Vec2Model

from qdrant_client import QdrantClient

from qdrant_client.models import Distance, VectorParams, PointStruct

import torchaudio.transforms as T

class AudioEmbeddingGenerator:

def __init__(self, device: str = "cuda" if torch.cuda.is_available() else "cpu"):

self.device = device

self.processor = Wav2Vec2Processor.from_pretrained(

"facebook/wav2vec2-large-xlsr-53"

)

self.model = Wav2Vec2Model.from_pretrained(

"facebook/wav2vec2-large-xlsr-53"

).to(device)

self.model.eval()

print(f"✓ Wav2Vec2 model loaded on device: {device}")

print(" Model dimensions: 768")

def load_audio(self, audio_path: str, sr: int = 16000) -> np.ndarray:

waveform, original_sr = librosa.load(audio_path, sr=None)

if original_sr != sr:

resampler = T.Resample(orig_freq=original_sr, new_freq=sr)

waveform = resampler(torch.FloatTensor(waveform)).numpy()

waveform = waveform.astype(np.float32)

if np.max(np.abs(waveform)) > 0:

waveform = waveform / np.max(np.abs(waveform))

return waveform

def generate_embedding(self, audio_path: str) -> np.ndarray:

waveform = self.load_audio(audio_path, sr=16000)

inputs = self.processor(

waveform,

sampling_rate=16000,

return_tensors="pt",

)

with torch.no_grad():

outputs = self.model(

inputs.input_values.to(self.device),

output_hidden_states=True,

)

hidden_states = outputs.last_hidden_state

embedding = hidden_states.mean(dim=1).squeeze().cpu().numpy()

return embedding

class SongMetadata:

@staticmethod

def get_sample_songs() -> List[Tuple[str, dict]]:

return [

("songs/taylor_swift_midnight_rain.mp3", {

"title": "Midnight Rain",

"artist": "Taylor Swift",

"album": "Midnights",

"release_date": "2022-10-21",

"genre": "pop",

"duration": 183,

"bpm": 120,

"energy": 0.82,

"acousticness": 0.12,

"danceability": 0.75,

}),

("songs/the_weeknd_blinding_lights.mp3", {

"title": "Blinding Lights",

"artist": "The Weeknd",

"album": "After Hours",

"release_date": "2019-11-29",

"genre": "synthwave",

"duration": 200,

"bpm": 103,

"energy": 0.73,

"acousticness": 0.08,

"danceability": 0.80,

}),

# ... 8 more entries, same structure ...

]

class QdrantAudioDB:

def __init__(self, host: str = "localhost", port: int = 6333):

self.client = QdrantClient(host=host, port=port)

self.collection_name = "songs"

def create_collection(self, vector_size: int = 768):

try:

self.client.delete_collection(self.collection_name)

except Exception:

pass

self.client.create_collection(

collection_name=self.collection_name,

vectors_config=VectorParams(

size=vector_size,

distance=Distance.COSINE,

),

hnsw_config={

"m": 16,

"ef_construct": 200,

},

)

print(f"✓ Collection '{self.collection_name}' created")

print(f" - Vector size: {vector_size}")

print(" - Distance: COSINE")

print(" - Index: HNSW")

def upsert_songs(self, songs_data: List[Tuple[np.ndarray, dict, int]]):

points = []

for embedding, metadata, song_id in songs_data:

embedding = embedding.astype(np.float32)

points.append(

PointStruct(

id=song_id,

vector=embedding.tolist(),

payload=metadata,

)

)

self.client.upsert(

collection_name=self.collection_name,

points=points,

)

print(f"✓ Upserted {len(points)} songs")

def search(self, query_embedding: np.ndarray, limit: int = 5):

query_embedding = query_embedding.astype(np.float32)

results = self.client.search(

collection_name=self.collection_name,

query_vector=query_embedding.tolist(),

limit=limit,

with_payload=True,

with_vectors=False,

)

return [

{

"title": r.payload["title"],

"artist": r.payload["artist"],

"album": r.payload["album"],

"similarity_score": round(r.score * 100, 2),

"genre": r.payload.get("genre", "Unknown"),

"release_date": r.payload.get("release_date", "Unknown"),

"metadata": r.payload,

}

for r in results

]

def main():

print("=" * 60)

print("AUDIO SEARCH - SONG INGESTION PIPELINE")

print("=" * 60)

print("n[1/4] Initializing Wav2Vec2...")

embedding_gen = AudioEmbeddingGenerator()

print("n[2/4] Creating Qdrant collection...")

db = QdrantAudioDB()

db.create_collection(vector_size=768)

print("n[3/4] Generating embeddings for 10 songs...")

songs_data = SongMetadata.get_sample_songs()

embeddings_generated = []

for idx, (audio_path, metadata) in enumerate(songs_data, 1):

try:

if not os.path.exists(audio_path):

print(f" ({idx}/10) ⚠ {metadata['title']} - file not found, using random vector")

embedding = np.random.randn(768).astype(np.float32)

embedding = embedding / np.linalg.norm(embedding)

else:

print(f" ({idx}/10) Generating embedding: {metadata['title']}")

embedding = embedding_gen.generate_embedding(audio_path)

embeddings_generated.append((embedding, metadata, idx))

except Exception as e:

print(f" ({idx}/10) ✗ Error {metadata['title']}: {e}")

print("n[4/4] Upserting to Qdrant...")

db.upsert_songs(embeddings_generated)

collection_info = db.client.get_collection(db.collection_name)

print("n✓ Ingestion complete!")

print(f" - Total songs indexed: {collection_info.points_count}")

print(" - Qdrant dashboard: http://localhost:6333/dashboard")

print("n" + "=" * 60)

print("DEMO: Searching for similar songs")

print("=" * 60)

if embeddings_generated:

query_embedding = embeddings_generated[0][0]

query_title = embeddings_generated[0][1]["title"]

results = db.search(query_embedding, limit=5)

print(f"nQuery: '{query_title}'")

for idx, result in enumerate(results, 1):

print(f"n{idx}. {result['title']} - {result['artist']}")

print(f" Album: {result['album']}")

print(f" Similarity: {result['similarity_score']}%")

print(f" Genre: {result['genre']}")

if __name__ == "__main__":

main()

docker run -p 6333:6333 qdrant/qdrant:latest

python ingest_songs.py

6. A sample app that I could build, 5 Seconds from Mic to Match

Next, the UI: a Gradio app that lets you either:

- Record from mic for ~5 seconds, or

- Upload an audio file

Then it:

- Converts that audio into a Wav2Vec2 embedding (same pipeline as ingestion). You are free to aswell use models like multi-modal singular embedding model, but using this model so that all can follows

- Queries Qdrants songs collection

- Renders a table of top‑K similar tracks with similarity scores.

import gradio as gr

import numpy as np

import torch

import librosa

from transformers import Wav2Vec2Processor, Wav2Vec2Model

from qdrant_client import QdrantClient

from typing import List, Tuple

class AudioSearchApp:

def __init__(self, qdrant_host: str = "localhost", qdrant_port: int = 6333):

print("Initializing Audio Search Application...")

self.qdrant_client = QdrantClient(host=qdrant_host, port=qdrant_port)

self.collection_name = "songs"

try:

self.qdrant_client.get_collection(self.collection_name)

print(f"✓ Connected to Qdrant collection: {self.collection_name}")

except Exception as e:

print(f"✗ Error connecting to Qdrant: {e}")

print(" Make sure Qdrant is running: docker run -p 6333:6333 qdrant/qdrant")

raise

self.device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"✓ Using device: {self.device}")

self.processor = Wav2Vec2Processor.from_pretrained(

"facebook/wav2vec2-large-xlsr-53"

)

self.model = Wav2Vec2Model.from_pretrained(

"facebook/wav2vec2-large-xlsr-53"

).to(self.device)

self.model.eval()

print("✓ Wav2Vec2 model loaded")

def process_audio(self, audio_input: Tuple) -> np.ndarray:

sample_rate, audio_data = audio_input

if len(audio_data.shape) > 1:

audio_data = np.mean(audio_data, axis=1)

if sample_rate != 16000:

audio_data = librosa.resample(

audio_data.astype(np.float32),

orig_sr=sample_rate,

target_sr=16000,

)

audio_data = audio_data.astype(np.float32)

if np.max(np.abs(audio_data)) > 0:

audio_data = audio_data / np.max(np.abs(audio_data))

inputs = self.processor(

audio_data,

sampling_rate=16000,

return_tensors="pt",

)

with torch.no_grad():

outputs = self.model(

inputs.input_values.to(self.device),

output_hidden_states=True,

)

hidden_states = outputs.last_hidden_state

embedding = hidden_states.mean(dim=1).squeeze().cpu().numpy()

embedding = embedding.astype(np.float32)

return embedding

def search_songs(self, audio_input: Tuple, num_results: int = 5) -> List[List[str]]:

try:

if audio_input is None:

return [["Error", "No audio input provided", "", "", "", ""]]

print("Processing audio...")

embedding = self.process_audio(audio_input)

print("Searching Qdrant...")

results = self.qdrant_client.search(

collection_name=self.collection_name,

query_vector=embedding.tolist(),

limit=num_results,

with_payload=True,

)

output = []

for i, result in enumerate(results, 1):

payload = result.payload

output.append([

i,

payload.get("title", "Unknown"),

payload.get("artist", "Unknown"),

payload.get("album", "Unknown"),

f"{round(result.score * 100, 2)}%",

payload.get("genre", "Unknown"),

payload.get("release_date", "Unknown"),

])

return output

except Exception as e:

print(f"Error during search: {e}")

return [["Error", str(e), "", "", "", ""]]

def create_gradio_interface():

app = AudioSearchApp()

with gr.Blocks(

title="Reverse Audio Search - Qdrant",

theme=gr.themes.Soft(),

) as demo:

gr.HTML(

"""

<div style="text-align: center; margin-bottom: 2em;">

<h1>🎵 Reverse Audio Search</h1>

<h3>Powered by Qdrant Vector Database</h3>

</div>

"""

)

gr.Markdown(

"""

## How it works

1. Record or upload a 5+ second clip.

2. The model converts it into a 768‑dim embedding.

3. Qdrant searches for the nearest vectors.

4. You get back the closest songs with similarity scores.

"""

)

with gr.Group(label="📥 Input Audio"):

gr.Markdown("Choose one method:")

with gr.Tabs():

with gr.TabItem("🎤 Record from Microphone"):

gr.Markdown("**Record at least 5 seconds of audio.**")

audio_mic = gr.Audio(

label="Microphone Input",

sources=["microphone"],

type="numpy",

)

with gr.TabItem("📤 Upload File"):

gr.Markdown("Upload MP3, WAV, or other audio formats.")

audio_file = gr.Audio(

label="Upload Audio File",

sources=["upload"],

type="numpy",

)

with gr.Group(label="⚙ Settings"):

num_results = gr.Slider(

minimum=1,

maximum=10,

value=5,

step=1,

label="Number of Results",

)

search_btn = gr.Button(

"🔍 Search for Similar Songs",

variant="primary",

size="lg",

)

with gr.Group(label="📊 Results"):

results_table = gr.Dataframe(

headers=[

"#",

"Song Title",

"Artist",

"Album",

"Similarity",

"Genre",

"Release Date",

],

label="Similar Songs",

interactive=False,

)

status_text = gr.Textbox(

label="Status",

value="Ready. Record or upload audio and click 'Search'.",

interactive=False,

)

def search_from_mic(audio, num_res):

if audio is None:

return [], "Error: No audio recorded."

results = app.search_songs(audio, num_res)

return results, f"✓ Search complete! Found {len(results)} songs."

def search_from_file(audio, num_res):

if audio is None:

return [], "Error: No file uploaded."

results = app.search_songs(audio, num_res)

return results, f"✓ Search complete! Found {len(results)} songs."

search_btn.click(

fn=search_from_mic,

inputs=[audio_mic, num_results],

outputs=[results_table, status_text],

)

search_btn.click(

fn=search_from_file,

inputs=[audio_file, num_results],

outputs=[results_table, status_text],

)

gr.Markdown(

"""

---

### Tips

- Try humming or singing a recognizable part.

- Or just record 5 seconds of a song playing on your speakers.

- First request will be slower (model load), then it’s fast.

"""

)

return demo

if __name__ == "__main__":

print("n" + "=" * 60)

print("LAUNCHING AUDIO SEARCH APPLICATION")

print("=" * 60)

interface = create_gradio_interface()

interface.launch(

server_name="0.0.0.0",

server_port=7860,

share=False,

show_error=True,

)

You now have a local, Shazam‑style reverse audio search tool built on Qdrant.

Scaling This Beyond a Toy: Why Qdrant Is the Vector DB Startups Should Bet On

Everything above runs on your laptop. But the reasons to care about Qdrant go way beyond hobby projects.

1. From 10 Songs to 100M

With 768‑dim float32 vectors:

- 1M songs ≈ 768 * 4 bytes * 1M ≈ ~3 GB raw vectors

- 100M songs ≈ ~300 GB raw

Qdrant gives you:

- HNSW indexing with tunable m and ef_search for predictable latency at large n.

- Optional quantization to shrink vector footprint while keeping recall high.

- Distributed deployments and sharding in Qdrant Cloud, so you can grow to tens or hundreds of millions of vectors without rewriting your app.

2. Hybrid Search and Metadata‑Aware Retrieval

Because Qdrant treats payloads as first‑class, you can do things like:

- “Similar to this song, but only from this user’s liked catalog.”

- “Similar vibe, but restrict to Japanese city‑pop from after 2015.”

All within one vector search call that respects filters during HNSW traversal, instead of hacks with post‑filtering or separate stores.

In practice, that’s the difference between:

- A “cute demo” recommender, and

- A multi‑tenant, preference‑aware, policy‑aware system ready for production.

3. Retrieval Quality as a First‑Class Concern

Qdrant exposes:

- Exact search mode to benchmark ANN vs kNN precision.

- HNSW tuning knobs that let you trade off latency vs recall.

If you’re building music discovery, voice search, or any retrieval product where quality is your moat, having a DB that respects retrieval quality as a metric — not just throughput — is a huge deal.

4. The Startup Lens

As a senior ML engineer at a startup, the decision matrix is brutally simple:

- Do you want to own your embeddings & search stack or rent a black box?

- Do you need to go from laptop → on‑prem → managed cloud without rewriting everything?

- Do you care about latency, recall, and cost at the same time?

Qdrant hits a very sweet spot:

- Open‑source core you can run anywhere.

- Rust performance with real HNSW tuning, not just wrappers.

- Managed Qdrant Cloud when you don’t want to be the one paged at 3 AM.

- An ecosystem that’s already thinking in terms of hybrid search, dense + sparse, filters, and retrieval quality instead of just “throw vectors in a box”.

For anything in the space of:

- Music search / recommendation

- Voice search

- Multimodal retrieval (audio + text)

- Agentic systems that need fast semantic lookup

Qdrant is not just “A” vector DB. It’s the default you should be reaching for.

That’s a Wrap Folks!

With a few hundred lines of Python, a Wav2Vec2 model, and Qdrant, you now have:

- A reverse audio search pipeline that feels like a baby Shazam.

- A local Gradio app that records 5 seconds and returns near‑instant matches.

- A vector database setup that can realistically scale from 10 songs to tens of millions without changing the API surface.

The interesting part is not just that this works it’s actually how clean the architecture is when you anchor it on a vector DB designed for this era.

If you’re at a startup and building anything that smells like search, recommendation, or retrieval especially multimodal theres a strong argument that adopting Qdrant early gives you an infra moat. You get low latency, good recall, hybrid search, and flexible deployment options without locking yourself into a proprietary, opaque service.

The reverse audio search use‑case is just one very fun, very visual example of what you can build once “search by meaning” becomes cheap, fast, and open.

Baby Shazam: Reverse Audio Search Engine with Qdrant + Discover Similar was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.