Authentication Customer Segmentation — BEACON: K-Means Clustering

What is BEACON?

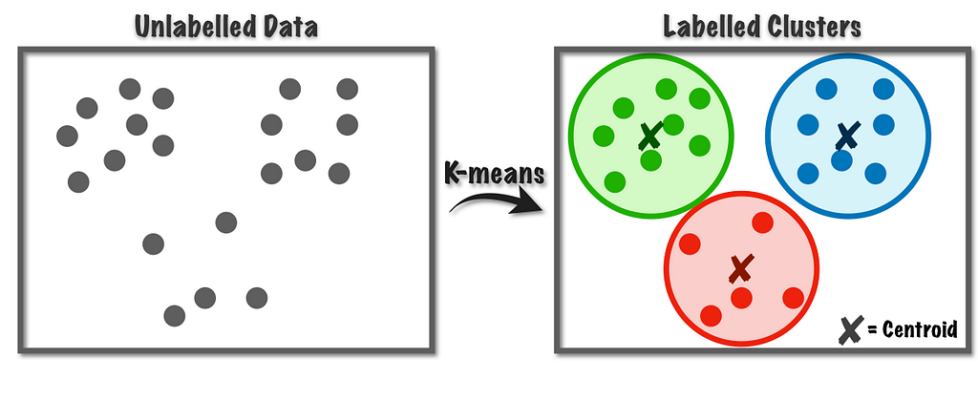

BEACON (Behavioral Evaluation for Authentication Cohorts & Outcomes Network) is a data-driven framework that segments customers based on their authentication behavior using machine learning (k-means clustering). It groups users along interpretable dimensions of engagement and risk to better understand login behavior, fraud patterns, and retention outcomes.

How do customers engage with the product/system?

Customers engage with BEACON indirectly through their authentication behaviors — such as login frequency, success rates, friction encountered (e.g., challenge verification), and security-related interactions. Their behavioral patterns determine which cohort they are assigned to, which then informs how Amazon manages security friction, fraud prevention, and user experience.

What are pitfalls for segmentation in BEACON?

- One segmentation trying to serve all teams — different orgs (security, product, CX, fraud) may need different lenses on user behavior.

- Feature selection driven only by business intuition —Business intuition helps us start, but data should confirm what truly matters.

- Lack of clearly tied business metrics — segments must be directly linked to outcomes like successful logins, fraud reduction, or retention to be useful.

What was the business problem you were solving in the project?

The core problem was to create a global, consistent way to understand authentication behavior across customers, rather than having each team define “risky,” “friction-prone,” or “high-engagement” users differently.

Specifically, the project aimed to:

- Identify distinct authentication behavior cohorts using clustering.

- Tie these cohorts to measurable outcomes such as successful logins, fraud prevention, and retention.

- Enable teams to act on these segments via a shared dashboard, informing challenge strategies, friction reduction, and targeted security interventions.

What was the goal for segmentation?

- Value that customer gets from the product (better UX, low friction)

- Value that customer brings to the product (Revenue, retention)

The goal of BEACON segmentation was to move as many customers as possible into the “Low Friction, Frequent Users ,Low Risk” quadrant by using behavioral signals from authentication data to balance security + experience + outcomes.

Why go with ML Based Clustering approach over 2D heuristic segmentation?

We used ML based clustering approach so that we could capture non linear cluster boundaries. When we have two features, then we can show cluster boundaries in the 2d plane and depict dimensions across the axis, but as the number of features increases the cluster boundaries become non linear so we us ML Based clustering instead of heuristic segmentation.

What are modeling tenets?

- Actionability (Insights generated eventually are actionable)

- Precision and Stability (Results and Algorithms are stable and reproducible).

- Interpretability (Cluster on fewer dimensions)

How did you do feature selection for your problem statement?

We followed a business-first, data-validated approach to feature selection for BEACON, starting from the core question of how to meaningfully represent authentication behavior in a simple, interpretable way. We examined a broad set of raw login signals and distilled them into three non-redundant, outcome-relevant dimensions that stakeholders could easily reason about: (1)Average Signin Attempts : an engagement dimension capturing how frequently and consistently users authenticate, (2) Signin Success Rate: a friction dimension reflecting how smoothly users are able to log in, and (3) Number of Risky logins : a risk dimension capturing security signals such as step-ups or anomalous behavior. These three dimensions were chosen because they were distinct from each other, directly tied to key outcomes like successful logins, fraud prevention, and retention, and actionable for product and security teams, and they became the input features for our k-means clustering in BEACON.

How did you decide upon the observation period for the cluster?

- We picked a time period that was long enough to see real behavior, not just one-off activity — for example, 30 days instead of 3 days so we capture regular login patterns.

- We made sure the window was recent enough to reflect current behavior, not outdated habits — e.g., last month rather than last year.

- We aligned it with business rhythms like monthly reviews(MBRs) or experiments, so teams could actually use the segments in decision-making.

- We tested different time windows (like 7, 30, 60 days) to see if the clusters stayed similar or changed a lot.

- We ensured most users had enough activity in that period — for example, at least a few login attempts in 30 days so their segment is reliable.

- Finally, we checked that behavior in this window actually related to outcomes like successful logins, fraud, and retention — if not, we adjusted the period.

How did you select the value of K for K means clustering?

We determined the optimal number of clusters by evaluating both inertia and Bayesian Information Criterion (BIC) across different values of k, where lower values for both metrics indicate better model fit with appropriate complexity. Inertia measures the total within-cluster sum of squared distances (WCSS), meaning it captures how tightly grouped the data points are within each cluster — lower inertia implies more compact and cohesive clusters. However, inertia always decreases as we increase the number of clusters, so it cannot alone determine the optimal k. To balance model fit with complexity, we also used BIC, which penalizes excessive numbers of clusters to avoid overfitting. BIC helps ensure that improvements in fit are meaningful and not just due to adding more clusters. By analyzing the trend of both inertia and BIC and identifying the point where improvements began to level off, we determined that 10 clusters provided the best balance between cluster compactness, interpretability, and model simplicity.

How do we ensure we got the same clusters back or cluster stability?

- Ran k-means multiple times with different random seeds to check that clusters did not depend on initialization.

- Re-trained the model on different samples of data (e.g., 80% subsets) and compared results.

- Checked whether core cluster patterns stayed the same (e.g., high-engagement/low-risk users kept appearing).

- Tested stability across different time windows (e.g., 30 days vs 60 days).

- Locked the segmentation only when clusters were consistent in shape, size, and business interpretation across runs, for example, the “high-engagement, low-risk” cluster consistently appeared with similar size (e.g., ~30% of users) and similar characteristics, regardless of changes in seed or sample data.

How generalizable was your model that is how well model works with new data or unseen data?

- Tested the model on out-of-time data — e.g., trained clusters on January data and applied them to February users to see if patterns still held.

- Checked that same types of segments reappeared in new data — for example, “high-engagement low-risk” users were still visible in later months, not just in training data.

- Validated that clusters still aligned with real outcomes on unseen users — e.g., users labeled “low-friction” continued to show higher successful login rates in future periods.

- Tracked state transition for different customers and captured this using below metrics:

Built a transition matrix showing probabilities like:

- % of users who stayed in the same state

- % who improved (moved to lower risk / higher engagement)

- % who degraded (became more risky or less engaged)

What were the final insights you were able to gather with this segmentation?

- Not all friction is bad — but poorly targeted friction is.

We found that many low-risk users were experiencing unnecessary challenges, while some high-risk users were under-challenged. - Risk and engagement are not perfectly correlated.

Some highly engaged users still showed risky behavior, meaning engagement alone is a poor proxy for trust. - A small set of users drove a disproportionate share of risk.

A distinct “high-risk, high-friction” cluster accounted for a large portion of fraud attempts and authentication failures. - Experience improvements could be safely focused on specific cohorts.

Low-risk, high-engagement users could receive lower friction without increasing fraud. - Behavior is dynamic, not static.

Users moved across states over time, which meant segmentation needed periodic refresh and continuous monitoring.

How did stakeholders use this segmentation?

- We collaborated with UX research and market research teams, we collected psychographic information that helped us in making these segments real.

- We held meet and greet sessions with concerned teams like product, content and UX.

- Created a self serve tool that profiled customers and then we projected their authentication activity in Quicksight.

How often will you revisit these clusters?

This is something we would think about while creating these clusters. As long as business use case stays relevant the model is relevant, as soon as the business changes we would need to incorporate that into our model.

When working on Feature Selection using XG Boost — how much did you focus on accuracy of the model or did you just look into the features importance?

We focused more on feature importance, we had picked these features from our feature store — these are baseline features which are used in some of our models so we looked more into feature importance rather than model accuracy.

Authentication Customer Segmentation — BEACON: K-Means Clustering was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.