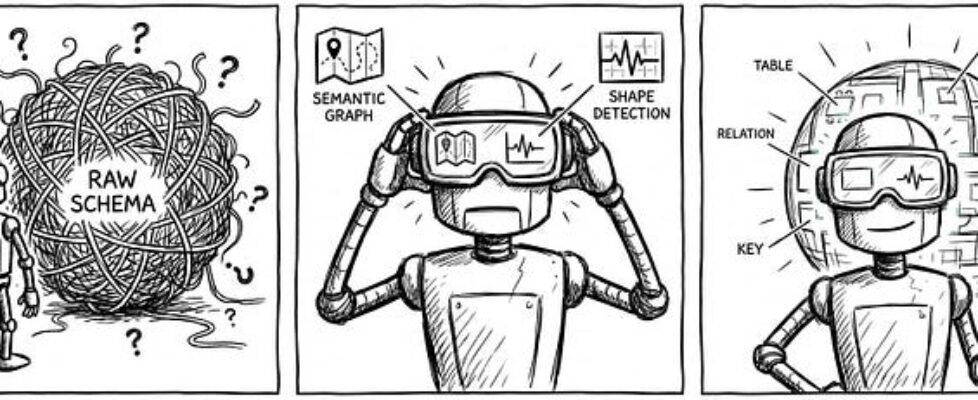

Engineering the Semantic Layer: Why LLMs Need “Data Shape,” Not Just “Data Schema

Author(s): Shreyash Shukla Originally published on Towards AI. Image Source: Google Gemini The “Context Window” Economy In the world of Large Language Models (LLMs), attention is a finite currency. While context windows are expanding, the “Lost in the Middle” phenomenon remains a persistent architectural challenge. Research from Stanford University demonstrates that as the amount of retrieved context grows, the model’s ability to accurately extract specific constraints significantly degrades [Lost in the Middle: How Language Models Use Long Contexts]. This creates a critical problem for enterprise analytics. The standard industry approach — Retrieval-Augmented Generation (RAG) — often involves stuffing the prompt with hundreds of CREATE TABLE statements (DDL) in hopes that the LLM figures it out. This leads to “Context Rot.” The model becomes overwhelmed by irrelevant columns and foreign keys, losing focus on the user’s actual question. Furthermore, relying on raw schema introduces the “Raw Schema Fallacy.” A DDL statement describes structure, not content. It tells the agent that a column named status exists, but not what values live inside it. Does it contain “Active/Inactive”? “Open/Closed”? “1/0”? Without this knowledge, the agent is forced to hallucinate filters, producing SQL that is syntactically perfect but semantically dead. DataCamp notes that this lack of semantic context is a primary driver of the 20-40% failure rate in text-to-SQL applications [State of Data & AI Literacy 2024]. To solve this, we must abandon “Passive RAG” in favor of a “Just-in-Time” architecture that provides rich, targeted context only for the specific tables involved. Image Source: Google Gemini Pillar 1 — The Enterprise Semantic Graph The first pillar of accuracy is the Enterprise Semantic Graph. We cannot rely on static documentation or manual wiki pages, which go stale the moment they are written. Instead, we must treat the SQL ETL scripts themselves as the source of truth. By parsing the scripts that create the tables, we generate a structured, JSON-based map of the data universe. This moves us away from a flat list of tables to a Semantic Layer — a concept that Databricks argues is essential for AI, as it “translates raw data into business concepts” [The Importance of a Semantic Layer for AI]. This structure allows the agent to navigate Data Lineage — understanding not just that a table exists, but identifying its upstream dependencies and downstream consumers. Crucially, it provides the agent with Verified Logic. Instead of guessing how to calculate “Churn,” the agent reads the simplified_sql_logic directly from the metadata, ensuring the math is identical to the official reporting. The Artifact: The Knowledge Object Instead of just indexing schema, we index the logic. { “table_name”: “revenue_daily_snapshot”, “lineage”: { “upstream_tables”: [ { “table_name”: “raw_bookings”}, { “table_name”: “currency_conversion_rates” } ] }, “metrics”: [ { “name”: “Net_Revenue_Retention”, “definition”: “Revenue from existing customers excluding new sales.”, “simplified_sql_logic”: “SUM(renewal_revenue) + SUM(upsell_revenue) – SUM(churn_revenue)”, “key_filters_and_conditions”: [“is_active_contract = TRUE”, “region != ‘TEST'”] } ]} Image Source: Google Gemini Pillar 2 — Statistical Shape Detection The second pillar of accuracy is Statistical Shape Detection. While the Semantic Graph provides the map of the data, Shape Detection provides the terrain. To write accurate SQL, an agent needs to know the statistical signature of the data columns before it attempts to query them. Without this, LLMs fall into the “Cardinality Trap.” For example, if a user asks to “Group customers by type,” and the customer_type column actually contains unique IDs (high cardinality) instead of categories (low cardinality), the resulting GROUP BY query could crash the database cluster. This is why Gartner predicts that organizations adopting “active metadata” analysis will decrease the time to delivery of new data assets by as much as 70% [Harnessing Active Metadata for Data Management]. By proactively scanning for data shape, the agent removes the friction of trial-and-error querying. To prevent this, our architecture pre-computes a “Shape Definition” for every critical column. The agent references these metrics “Just-in-Time” to validate its logic: The Artifact: The Shape Definition Before writing a single line of SQL, the agent consults these pre-computed signals: DISTINCT VALUE COUNT: The agent checks this to decide if a column is safe for a GROUP BY clause (Low Cardinality) or should be treated as an identifier (High Cardinality). FREQUENT VALUES OCCURRENCES: This prevents “Value Hallucination.” If the user asks for “United States” data, the agent checks this list to see if the actual value in the database is ‘USA’, ‘US’, or ‘United States’. QUANTILES & MIN/MAX VALUE: These allow the agent to detect outliers. If a revenue figure is outside the 99th percentile, the agent can flag it as an anomaly rather than reporting it as a trend. ROW COUNT: These serve as “Health Checks.” If the ROW_COUNT has dropped by 50% since yesterday, the agent knows the data pipeline is broken. This approach aligns with IDC’s findings that “mature data intelligence organizations” — those that actively manage metadata context — achieve 3x better business outcomes compared to their peers [IDC Snapshot: Data Intelligence Maturity Drives Three Times Better Business Outcomes]. Image Source: Google Gemini The Result — Deterministic SQL Generation When we combine these two pillars — the Enterprise Semantic Graph and Statistical Shape Detection — we achieve a fundamental shift in how the agent operates. We move from Probabilistic Text Generation to Deterministic SQL Assembly. In a standard LLM workflow, the model guesses the query based on patterns it learned during training. In our architecture, the agent acts more like a compiler. It does not guess; it assembles the query based on verified constraints: Selection (The Map): The Semantic Graph explicitly identifies my_company_data.revenue as the correct table for “Sales,” rejecting similar-sounding but irrelevant tables. Filtering (The Terrain): The Shape Detector confirms that the region column contains ‘NA’, not ‘North America’, ensuring the WHERE clause actually returns data. Logic (The Rule): The Knowledge Object provides the exact formula for “Net Revenue,” preventing the agent from inventing its own math. This “Constraint-Based” approach mirrors the evolution of Self-Driving Cars, which rely on high-definition maps (Semantic Layer) and real-time sensor data (Shape Detection) to navigate safely. […]