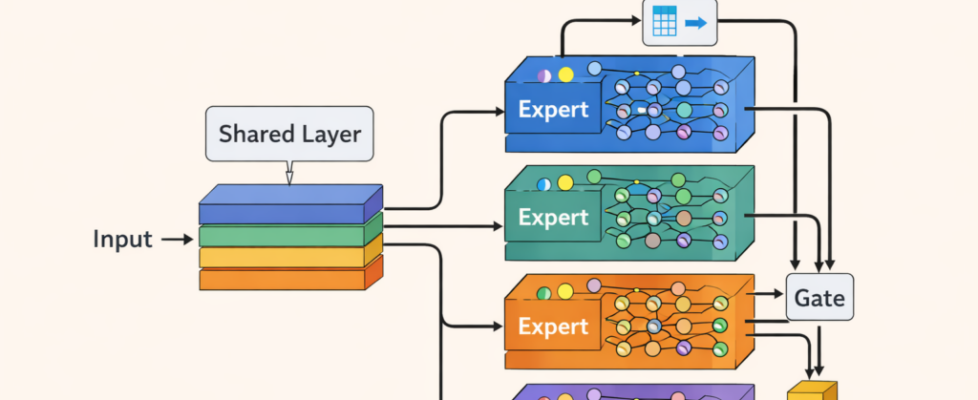

The 4 Mixture of Experts Architectures: How to Train 100B Models at 10B Cost

Understanding Sparse MoE, Dense-Sparse Hybrid, Expert Choice, and Soft MoE

Like

0

Liked

Liked

Understanding Sparse MoE, Dense-Sparse Hybrid, Expert Choice, and Soft MoE