How Amazon uses Amazon Nova models to automate operational readiness testing for new fulfillment centers

Amazon is a global ecommerce and technology company that operates a vast network of fulfillment centers to store, process, and ship products to customers worldwide. The Amazon Global Engineering Services (GES) team is responsible for facilitating operational readiness across the company’s rapidly expanding network of fulfillment centers. When launching new fulfillment centers, Amazon must verify that each facility is properly equipped and ready for operations. This process is called operational readiness testing (ORT) and typically requires 2,000 hours of manual effort per facility to verify over 200,000 components across 10,500 workstations. Using Amazon Nova models, we’ve developed an automated solution that significantly reduces verification time while improving accuracy.

In this post, we discuss how Amazon Nova in Amazon Bedrock can be used to implement an AI-powered image recognition solution that automates the detection and validation of module components, significantly reducing manual verification efforts and improving accuracy.

Understanding the ORT Process

ORT is a comprehensive verification process that makes sure the components are properly installed before our fulfillment center is ready for launch. The bill of materials (BOM) serves as the master checklist, detailing every component that should be present in each module of the facility. Each component or item in the fulfillment center is assigned a unique identification number (UIN) that serves as its distinct identifier. These components are essential for accurate tracking, verification, and inventory management throughout the ORT process and beyond. In this post we will refer to UINs and components interchangeably.

The ORT workflow has five components:

- Testing plan: Testers receive a testing plan, which includes a BOM that details the exact components and quantities required

- Walk through: Testers walk through the fulfillment center and stop at each module to review the setup against the BOM. A module is a physical workstation or operational area

- Verify: They verify proper installation and configuration of each UIN

- Test: They perform functional testing (i.e. power, connectivity, etc.) on each component

- Document: They document results for each UIN and move to next module

Finding the Right Approach

We evaluated multiple approaches to address the ORT automation challenge, with a focus on using image recognition capabilities from foundation models (FMs). Key factors in the decision-making process include:

Image Detection Capability: We selected Amazon Nova Pro for image detection after testing multiple AI models including Anthropic Claude Sonnet, Amazon Nova Pro, Amazon Nova Lite and Meta AI Segment Anything Model (SAM). Nova Pro met the criteria for production implementation.

Amazon Nova Pro Features:

Object Detection Capabilities

- Purpose-built for object detection

- Provides precise bounding box coordinates

- Consistent detection results with bounding boxes

Image Processing

- Built-in image resizing to a fixed aspect ratio

- No manual resizing needed

Performance

- Higher Request per Minute (RPM) quota on Amazon Bedrock

- Higher Tokens per Minute (TPM) throughput

- Cost-effective for large-scale detection

Serverless Architecture: We used AWS Lambda and Amazon Bedrock to maintain a cost-effective, scalable solution that didn’t require complex infrastructure management or model hosting.

Additional contextual understanding: To improve detection and reduce false positives, we used Anthropic Claude Sonnet 4.0 to generate text descriptions for each UIN and create detection parameters.

Solution Overview

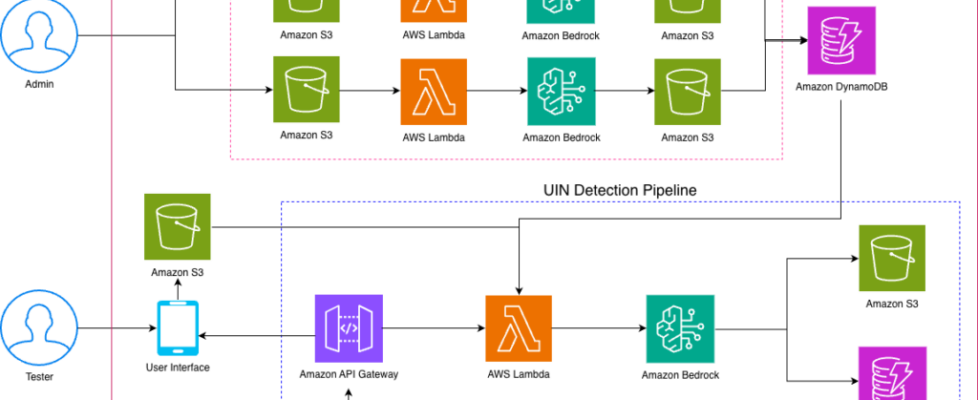

The Intelligent Operational Readiness (IORA) solution includes several key services and is depicted in the architecture diagram that follows:

- API Gateway: Amazon API Gateway handles user requests and routes to the appropriate Lambda functions

- Synchronous Image Processing: Amazon Bedrock Nova Pro analyzes images with 2-5 second response times

- Progress Tracking: The system tracks UIN detection progress (% UINs detected per module)

- Data Storage: Amazon Simple Storage Service (S3) is used to store module images, UIN reference pictures, and results. Amazon DynamoDB is used for storing structured verification data

- Compute: AWS Lambda is used for image analysis and data operations

- Model inference: Amazon Bedrock is used for real-time inference for object detection as well as batch inference for description generation

Description Generation Pipeline

The description generation pipeline is one of the key systems that work together to automate the ORT process. The first is the description generation pipeline, which creates a standardized knowledge base for component identification and is run as a batch process when new modules are introduced. Images taken at the fulfillment center have different lighting conditions and camera angles, which can impact the ability of the model to consistently detect the right component. By using high-quality reference images, we can generate standardized descriptions for each UIN. We then generate detection rules using the BOM, which lists out the required UINs in each module, their associated quantities and specifications. This process makes sure that each UIN has a standardized description and appropriate detection rules, creating a robust foundation for the subsequent detection and evaluation processes.

The workflow is as follows:

- Admin uploads UIN images and BOM data

- Lambda function triggers two parallel processes:

- Path A: UIN description generation

- Process each UIN’s reference images through Claude Sonnet 4.0

- Generate detailed UIN descriptions

- Consolidate multiple descriptions into one description per UIN

- Store consolidated descriptions in DynamoDB

- Path B: Detection rule creation

- Combine UIN descriptions with BOM data

- Generate module-specific detection rules

- Create false positive detection patterns

- Store rules in DynamoDB

- Path A: UIN description generation

False positive detection patterns

To improve output consistency, we optimized the prompt by adding additional rules for common false positives. This helps filter out objects that are not relevant for detection. For instance, triangle signs should have a gate number and arrow and generic signs should not be detected.

UIN Detection Evaluation Pipeline

This pipeline handles real-time component verification. We input the images taken by the tester, module-specific detection rules, and the UIN descriptions to Nova Pro using Amazon Bedrock. The outputs are the detected UINs with bounding boxes, along with installation status, defect identification, and confidence scores.

The Lambda function processes each module image using the selected configuration:

End-to-End Application Pipeline

The application brings everything together and provides testers in the fulfillment center with a production-ready user interface. It also provides comprehensive analysis including precise UIN identification, bounding box coordinates, installation status verification, and defect detection with confidence scoring.

The workflow, which is reflected in the UI, is as follows:

- A tester securely uploads the images to Amazon S3 from the frontend—either by taking a photo or uploading it manually. Images are automatically encrypted at rest in S3 using AWS Key Management Service (AWS KMS).

- This triggers the verification, which calls the API endpoint for UIN verification. API calls between services use AWS Identity and Access Management (IAM) role-based authentication.

- A Lambda function retrieves the images from S3.

- Amazon Nova Pro detects required UINs from each image.

- The results of the UIN detection are stored in DynamoDB with encryption enabled.

The following figure shows the UI after an image has been uploaded and processed. The information includes the UIN name, a description, when it was last updated, and so on.

The following image is of a dashboard in the UI that the user can use to review the results and manually override any inputs if necessary.

Results & Learnings

After building the prototype, we tested the solution in multiple fulfillment centers using Amazon Kindle tablets. We achieved 92% precision on a representative set of test modules with 2–5 seconds latency per image. Compared to manual operational readiness testing, IORA reduces the total testing time by 60%. Amazon Nova Pro was also able to identify missing labels from the ground truth data, which gave us an opportunity to improve the quality of the dataset.

“The precision results directly translate to time savings – 40% coverage equals 40% time reduction for our field teams. When the solution detects a UIN, our fulfillment center teams can confidently focus only on finding missing components.”

– Wayne Jones, Sr Program Manager, Amazon General Engineering Services

Key learnings:

- Amazon Nova Pro excels at visual recognition tasks when provided with rich contextual descriptions, and outperforms accuracy using standalone image comparison.

- Ground truth data quality significantly impacts model performance. The solution identified missing labels in the original dataset and helps improve human labelled data.

- Modules with less than 20 UINs performed best, and we saw performance degradation for modules with 40 or more UINs. Hierarchical processing is needed for modules with over 40 components.

- The serverless architecture using Lambda and Amazon Bedrock provides cost-effective scalability without infrastructure complexity.

Conclusion

This post demonstrates how to use Amazon Nova and Anthropic Claude Sonnet in Amazon Bedrock to build an automated image recognition solution for operational readiness testing. We showed you how to:

- Process and analyze images at scale using Amazon Nova models

- Generate and enrich component descriptions to improve detection accuracy

- Build a reliable pipeline for real-time component verification

- Store and manage results efficiently using managed storage services

This approach can be adapted for similar use cases that require automated visual inspection and verification across various industries including manufacturing, logistics, and quality assurance. Moving forward, we plan to enhance the system’s capabilities, conduct pilot implementations, and explore broader applications across Amazon operations.

For more information about Amazon Nova and other foundation models in Amazon Bedrock, visit the Amazon Bedrock documentation page.

About the Authors

Elad Dwek