The Gap Analysis Protocol: Engineering the “Consultant-in-the-Loop”

How to use Google’s Agent Development Kit (ADK) to replace probabilistic guessing with state-aware verification.

The Assumption Trap

In our previous articles, we equipped the agent with powerful tools to search and execute SQL. But a tool is only as good as the intent behind it. The single biggest barrier to enterprise AI adoption is not “hallucination” in the creative sense, but Confident Assumption.

Consider a common scenario: A user asks, “How was performance in the South?”

A standard LLM, trained to be helpful above all else, rushes to answer. It implicitly fills in the blanks to generate a valid SQL query. It assumes “Performance” means Revenue (ignoring Margin or Volume) and “South” means US_South (ignoring EMEA South or APAC South). It executes the query, gets a number, and presents it with 100% confidence.

The user sees the number and makes a business decision, never realizing that the agent answered a completely different question than the one asked. This is the Assumption Trap.

Harvard Business Review notes that this behavior is a primary reason why executives hesitate to deploy generative AI for decision-making. Their research suggests that trust is not built by an AI that always has an answer, but by an AI that knows when not to answer [AI Can’t Replace Human Judgment].

To fix this, we cannot rely on “better prompting.” We need an architectural intervention. We need a system that forces the agent to pause, pull out a “mental slate,” and audit the request for missing information before it ever touches the database.

The Architecture of Uncertainty (Gap Types)

We don’t just tell the LLM to “be careful.” We architecturally enforce caution using a Gap State Manager that persists across conversation turns. This concept is inspired by the “Human-in-the-Loop” design patterns advocated by Google PAIR (People + AI Research), which demonstrate that users are 3x more likely to trust a system that asks clarifying questions rather than one that guesses [People + AI Guidebook].

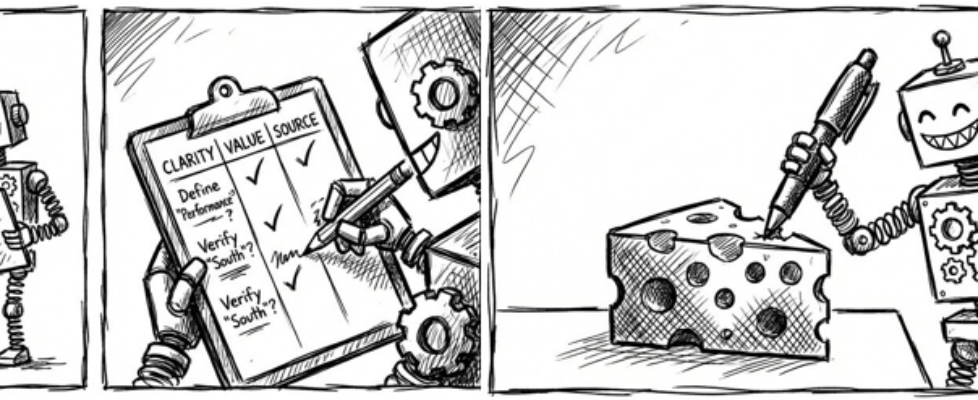

To operationalize this, we define a precise taxonomy of uncertainty. The agent does not simply report “I am confused”; it classifies the missing information into specific Gap Types on its slate:

CLARITY Gaps (Ambiguity):

- The Problem: The user’s intent is vague or has multiple interpretations.

- Example: “Show me the top products.” (Top by revenue? Volume? Growth?)

DEFINITION Gaps (Semantics):

- The Problem: The user uses a business term that is not mapped to a verified SQL formula in the Semantic Graph.

- Example: “What is the Attach Rate?” (The agent knows the tables, but lacks the specific calculation logic).

VALUE Gaps (Data Shape):

- The Problem: The user requests a filter value that might not exist in the database, risking a silent failure.

- Example: “Filter by Asia.” (Does the database use Asia, Europe, or a list of country codes? The agent must verify this against the Shape Detector before querying).

SOURCE Gaps (Lineage):

- The Problem: The agent has identified multiple potential tables for a request and cannot deterministically select the “System of Record.”

- Example: “Revenue” appears in both billing_data and sales_forecast. Which one is the truth?

By forcing the agent to categorize its uncertainty, we turn a “fuzzy” problem into a “structured” engineering problem. The agent cannot proceed until every checkbox on this slate is resolved.

The Control Loop (Using Google Agent Development Kit)

To implement this state machine, we cannot rely on the LLM to police itself. We must wrap the model in a deterministic “Control Loop.”

We utilize the Google Gen AI Agent Development Kit (ADK), an open-source framework that allows us to inject code before and after the model generates text. This allows us to implement what NVIDIA refers to as “Active Guardrails” — a safety layer that validates output before it reaches the user or the database [NVIDIA NeMo Guardrails Architecture].

We implement two specific architectural hooks:

1. The Injection (before_model_call): Before the LLM even sees the user’s message, this hook injects the current “Gap Slate” into the system instructions.

- The Mechanism: The hook pulls the list of open gaps (e.g., CLARITY, VALUE) from the State Manager and appends them to the prompt.

- The Effect: This ensures the model is mathematically aware of its own ignorance. It transforms the prompt from a generic “Answer the user” to a constrained instruction: “You have 2 Open Gaps. You must resolve them before proceeding.”

- Industry Validation: This pattern mirrors the “Context Steering” techniques advocated by Microsoft Research, which show that dynamically updating the system prompt with state constraints reduces hallucination by over 40% [Grounding LLMs via Context Injection].

2. The Gatekeeper (after_model_call): This is the firewall. In a standard agent, the LLM has the privilege to call tools whenever it wants. In our architecture, we revoke that privilege.

- The Mechanism: When the agent attempts to call the execute_query tool, this callback intercepts the request before it executes. It inspects the GapStateManager.

- The Logic: If the state shows any open CLARITY or VALUE gaps, the Gatekeeper rejects the tool call.

- The Pivot: Instead of running the SQL, the system overrides the response, forcing the agent to output a clarification question to the user instead.

This guarantees that no SQL is ever executed on assumptions. The agent literally cannot “guess” because the underlying code will not permit the function to run.

The Tools for Disambiguation

We established that the agent has a “Slate” (Gap State) and a “Gatekeeper” (Callbacks). But how does the agent actually write on that slate? It cannot simply “think” the gap into existence; it must perform a deterministic action.

To give the agent “write access” to its own memory, we define specialized tools in the Function Manifest. This aligns with the ReAct (Reason + Act) paradigm, where agents solve problems by interleaving thought traces with specific actions [ReAct: Synergizing Reasoning and Acting in Language Models].

1. The manage_gaps Tool When the agent detects ambiguity during its reasoning phase, it is trained to invoke this tool immediately rather than guessing.

- The Syntax: manage_gaps(action=’add’, type=’CLARITY’, details=’Ambiguous metric: Performance’).

- The Effect: This creates a structured record in the State Manager. The agent creates a persistent artifact of its own uncertainty that survives across context window limits.

2. The update_user_confirmation Tool When a user answers a clarifying question (e.g., “I meant Gross Revenue”), the agent must lock this in.

- The Syntax: update_user_confirmation(item=’confirmed_table’, value=’revenue_daily’).

- The Effect: This updates the persistent confirmation state, signaling to the Gatekeeper (discussed in Section 3) that the safety check has been passed.

The Feedback Loop (after_tool_call) The architecture is closed by the after_tool_call hook. When these tools are executed, the ADK intercepts the result and routes it to a specific Tool Handler.

- The Mechanism: The registry identifies the correct handler (e.g., GapManagerHandler) and physically updates the state object.

- The Result: The next time the before_model_call hook runs, it sees the updated state and modifies the system prompt accordingly.

By implementing the Gap Analysis Protocol, we transform the agent from a “Black Box” into a “Glass Box.”

We stop forcing users to be prompt engineers who must write perfect queries. Instead, we engineer the agent to be a Consultant — one that uses a rigorous, state-aware protocol to identify gaps, ask for clarification, and remember the answers.

The result is an agent that prioritizes Accuracy over Speed. It may take one extra turn to ask a question, but it ensures that when the SQL eventually runs, it is running on verified intent, not probability.

Build the Complete System

This article is part of the Cognitive Agent Architecture series. We are walking through the engineering required to move from a basic chatbot to a secure, deterministic Enterprise Consultant.

To see the full roadmap — including Semantic Graphs (The Brain), Gap Analysis (The Conscience), and Sub-Agent Ecosystems (The Organization) — check out the Master Index below:

The Cognitive Agent Architecture: From Chatbot to Enterprise Consultant

The Gap Analysis Protocol: Engineering the “Consultant-in-the-Loop” was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.