LAI #113: The Engineering Work That Decides Whether AI Holds Up

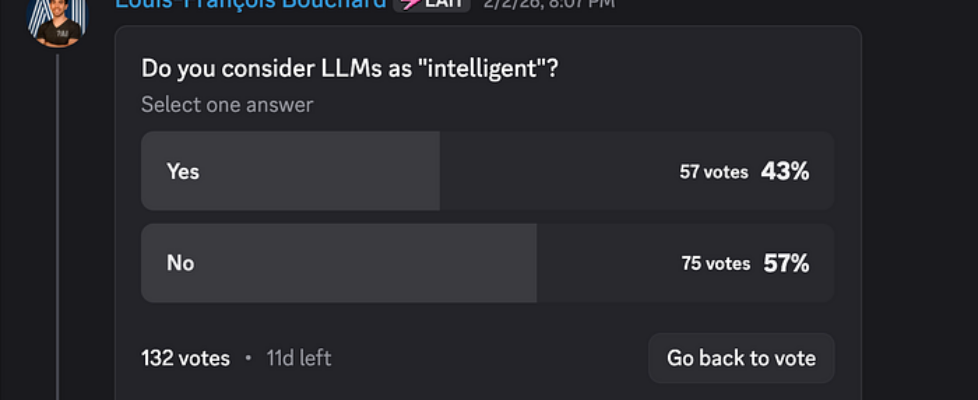

Author(s): Towards AI Editorial Team Originally published on Towards AI. Good morning, AI enthusiasts, Shipping AI in 2026 is about operational discipline: catching data drift before users do, keeping inference fast as workloads grow, choosing architectures that survive real traffic, and understanding what’s actually happening inside modern models. That’s what this week’s issue is built around. We cover how teams detect and respond to data drift in production, walk through the mechanics of speeding up LLM inference with techniques like KV caching and FlashAttention, and revisit microservice design principles that matter when ML systems scale beyond a single pipeline. On the modeling side, we unpack variational autoencoders in plain language and introduce a spectral view of transformers that challenges how most of us think about embeddings. Let’s get into it. What’s AI Weekly This week, in What’s AI, I walk through our decision-making process when building AI systems. We will go through two real builds: a single-agent system for marketing content generation and a multi-agent pipeline for article writing. Both projects required different architectural choices based on their constraints, and both delivered working systems. I’ll also share a cheatsheet we made for you to use to understand when and what to build in this new agentic era. By the end, you’ll know which questions to ask to design AI agent systems and prevent architectural rework mid-project. Read the article here or watch the video on YouTube. — Louis-François Bouchard, Towards AI Co-founder & Head of Community Learn AI Together Community Section! Featured Community post from the Discord Belocci has built Uni Trainer, a desktop-first AI training application that provides a modern GUI for training, fine-tuning, and inferencing computer vision, tabular machine learning, and small language models. It provides a unified desktop interface for model training, dataset handling, live logs, and progress; all without touching the command line. Additionally, it supports local CPU and GPU execution, and optional cloud GPU execution via CanopyWave. Check it out on GitHub and support a fellow community member. If you have any questions or suggestions, share them in the thread! AI poll of the week! The room leans no, most of you don’t call LLMs “intelligent.” It’s a healthy split, which probably says more about the word than the models. “Intelligent” isn’t a useful shipping term. Teams ship on measurable behavior: can the model generalize to new tasks, recover from mistakes, use tools reliably, and hit latency/cost targets rather than on a label that mixes philosophy with engineering. What’s one measurable behavior you’d use instead of the word “intelligent” when deciding to deploy a model? Let’s talk in the thread! Collaboration Opportunities The Learn AI Together Discord community is flooding with collaboration opportunities. If you are excited to dive into applied AI, want a study partner, or even want to find a partner for your passion project, join the collaboration channel! Keep an eye on this section, too — we share cool opportunities every week! 1. Lcorti is working on a diagnosis toolkit for language models and is looking for participants with industry experience in Natural Language Processing and language models for an ongoing user study. If you have experience with real-world applications of LLMs, find more details in the thread. 2. Matthewakkerhuis is looking for self-taught programmers who have built something to bounce off project ideas. If this sounds relevant to you, connect with them in the thread! 3. Devonburnedead is looking for team members with a technical background who want to be a part of building something for the industry. If that sounds like you, reach out to them in the thread! Meme of the week! Meme shared by aaditya_rx TAI Curated Section Article of the week Data Drift in Production ML: The Complete Detection and Monitoring Guide By Rohan Mistry When a machine learning model’s accuracy declines in production, data drift is a likely cause. This happens when the statistical properties of the input data shift away from those of the original training set. The article presents a practical framework for detection, using statistical methods such as the KS-test and the Population Stability Index to measure these shifts. It recommends addressing drift through continuous monitoring and a systematic retraining strategy using recent data, emphasizing the need for versioning to enable safe rollbacks. Our must-read articles 1. Variational Autoencoders in simple language By Sachin Soni This overview explains the mechanics of Variational Autoencoders (VAEs), generative models designed to create new data variations. It outlines the VAE architecture, in which an encoder maps the input to a probability distribution (not a fixed point), allowing for creative generation. It details the training process, which balances two objectives: a reconstruction loss to ensure accuracy and a KL divergence to organize the latent space for smooth, continuous outputs. It also clarifies the reparameterization trick, a technique essential for making these models trainable by addressing the issue of randomness in the network. 2. From Spatial Navigation to Spectral Filtering By Erez Azaria The common spatial analogy for transformer models, where concepts are points on a map, struggles to explain key behaviors during inference. Specifically, it doesn’t account for why embedding magnitudes grow while their directional similarity remains high across layers. It explores an alternative framework based on spectral filtering, treating embeddings as signals rather than coordinates. In this model, the attention mechanism selects signal channels, and the feed-forward network acts as a mixer. This perspective explains the observed phenomena as the modulation of a primary carrier signal and reframes next-token prediction as a filtering process to find the most resonant signal. 3. Principles of Microservice Architecture By TechwidSush This article provides a guide to microservice architecture, outlining 14 key design principles, including single responsibility, decentralized data management, and independent deployment. It contrasts synchronous and asynchronous communication methods and explains when to use each. The text also details critical communication patterns, such as API Gateway, message queues, and event-driven architecture. To ensure system reliability, it highlights best practices such as the circuit breaker pattern, idempotency, and distributed tracing. […]