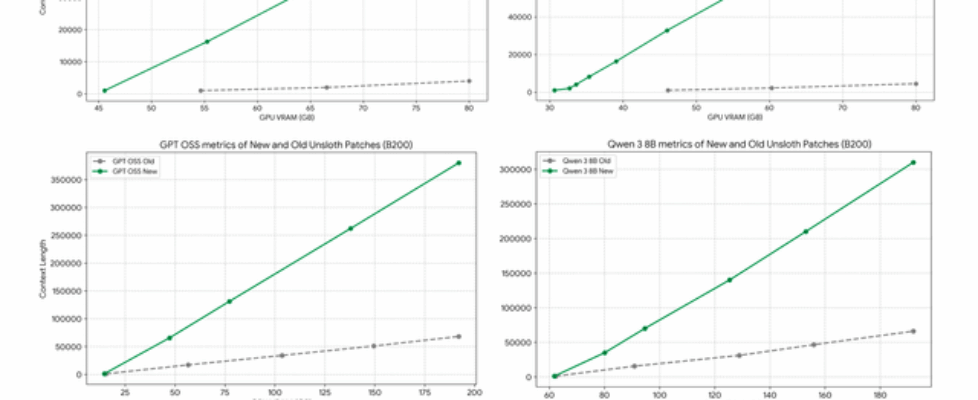

Hey RL folks! We’re excited to show how Unsloth now enables 7x longer context lengths (up to 12x) for Reinforcement Learning vs. setups with all optimizations turned on (kernels lib + FA2 + chunked cross kernel)!

By using 3 new techniques we developed, we enable you to train gpt-oss 20b QLoRA up to 20K context on a 24GB card — all with no accuracy degradation.

Unsloth GitHub: https://github.com/unslothai/unsloth

- For larger GPUs, Unsloth now trains gpt-oss QLoRA with 380K context on a single 192GB NVIDIA B200 GPU.

- Qwen3-8B GRPO reaches 110K context on an 80GB VRAM H100 via vLLM + QLoRA, and 65K for gpt-oss with BF16 LoRA.

- Unsloth GRPO RL runs with Llama, Gemma, and all models auto-support longer contexts.

Also, all features in Unsloth can be combined together and work well together:

- Unsloth’s weight-sharing feature with vLLM and our Standby Feature in Memory Efficient RL

- Unsloth’s Flex Attention for long context gpt-oss and our 500K Context Training

- Float8 training in FP8 RL and Unsloth’s async gradient checkpointing, and much more

You can read our educational blogpost for detailed analysis, benchmarks and more:

https://unsloth.ai/docs/new/grpo-long-context

And you can of course train any model using our new features and kernels via our free fine-tuning notebooks:

https://docs.unsloth.ai/get-started/unsloth-notebooks

Some free Colab notebooks below which has the 7x longer context support backed in:

- gpt-oss-20b GSPO Colab

- Qwen3-VL-8B Vision RL

- Qwen3-8B – FP8 L4 GPU

To update Unsloth to automatically make training faster, do:

pip install --upgrade --force-reinstall --no-cache-dir --no-deps unsloth pip install --upgrade --force-reinstall --no-cache-dir --no-deps unsloth_zoo

And to enable GRPO runs in Unsloth, do:

import os os.environ["UNSLOTH_VLLM_STANDBY"] = "1" # Standby = extra 30% context lengths! from unsloth import FastLanguageModel import torch max_seq_length = 20000 # Can increase for longer reasoning traces lora_rank = 32 # Larger rank = smarter, but slower model, tokenizer = FastLanguageModel.from_pretrained( model_name = "unsloth/Qwen3-4B-Base", max_seq_length = max_seq_length, load_in_4bit = False, # False for LoRA 16bit fast_inference = True, # Enable vLLM fast inference max_lora_rank = lora_rank, )

Hope you have a lovely day and let me know if you have any questions.