The Context Advantage: How Palantir AIP Operates the Modern Enterprise

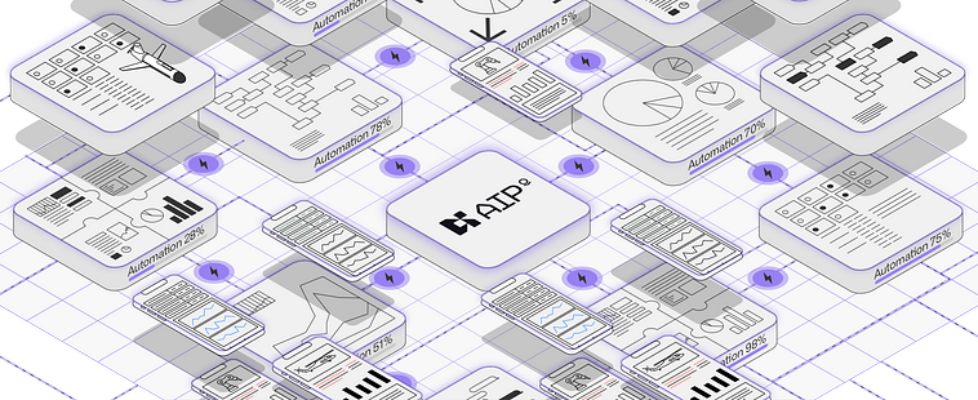

Author(s): Sainath Palla Originally published on Towards AI. Over the last couple of years, most conversations about AI have focused on model size, speed, or how many parameters a system can fit into memory. These are useful metrics, but they do not explain why some organisations see operational results while others remain stuck in experimentation. The difference is not the model. The difference is context. It is similar to how we once compared phones by processor speed. Faster chips looked impressive, but they never explained why one device felt more capable than another. The real difference came from the applications built on top of that hardware. Enterprise AI follows the same pattern. Bigger models may look powerful, but real impact comes from the context they work with and how well that context captures the business. In the organisations that already use AIP deeply, the pattern is consistent. The model did not get stronger. The context around it did. That is where the advantage lives. What Context Means Inside AIP In most AI systems, context is a short prompt or a set of instructions that help the model understand what the user is asking for. In AIP, context is something much deeper. It is not a sentence or a description. It is the structure of the business itself. Foundry’s ontology is where this structure lives. It is the place where data is shaped into objects that carry meaning, relationships, and constraints. An asset is connected to the events that changed it. A shipment is connected to its orders, routes, and delays. A supplier is connected to the performance history that describes how reliable they have been over time. These relationships form the grounding that AIP uses when it reasons. In the supply-chain use case I presented at the FuturOps Rodeo, this pattern became very clear. We passed specific ontology objects such as the shortage part, the affected production orders, and the performance history of alternative suppliers. AIP reasoned only within that defined context. It was not trying to understand the entire supply chain. It focused on the variables we provided and produced a recommendation grounded in those relationships. This grounding is what separates AIP from model-centric approaches. A typical LLM can only infer context from text. AIP does not need to guess. When it receives ontology objects, it receives the meaning behind them. It knows how they relate to the rest of the business and what limits or dependencies they carry. This is what allows AIP to operate with accuracy. It begins with context that is already structured, governed, and connected. How AIP Reasons AIP does not magically scan the entire ontology or attempt to understand the full enterprise at once. It reasons inside the boundaries you give it. Ontology objects passed into AIP Logic act like variables, and these variables define what the model should consider and what it should ignore. This keeps the reasoning focused, reliable, and aligned with the exact scenario the user is working with. A simple analogy helps. When you ask ChatGPT to plan a meal, you might say, “You have tomatoes, basil, and pasta, and you want something Italian.” The ingredients and the cuisine become the variables. Add dietary restrictions, cooking style, personal preferences, or time constraints, and the reasoning becomes richer because the context becomes richer. The model did not change. The inputs did. AIP works the same way, except the variables are ontology objects with relationships, history, and constraints already attached to them. You might pass a component that is in shortage, a supplier with known performance issues, or an asset with a long maintenance history. AIP then reasons with the context carried by those objects. It understands the relationships they have across the enterprise and the limits they operate within. The scale changes when decades of enterprise data enter the ontology. Instead of text-based hints, AIP receives objects that carry the memory of the organisation. The reasoning does not become better because the model is larger. It becomes better because the context is deeper. The Context Flywheel AIP becomes more capable as the context around it grows. When new data enters Foundry, it is shaped into ontology objects that carry meaning, relationships, and constraints. That ontology becomes the context AIP uses to reason. Better context leads to smarter decisions, and each decision creates new signals that flow back into the system. The flywheel is simple. Data → Ontology → Context → Smarter Decisions → Compounds AIP does not improve because the model changes. It improves because the grounding becomes richer. Context builds on itself and creates value every time the system is used. Flywheel image generated using AI AIP Components and the Learning Loop AIP is built around a small set of components that work together to turn context into action. Each one serves a specific purpose, but the value comes from how they connect. Combined with the ontology, they form a loop that improves decision quality over time. AIP Logic Logic is where grounded reasoning happens. Logic blocks receive ontology objects, reason over the relationships they carry, and produce structured outputs that drive workflows. It is a no-code environment for building AI-powered functions that use both structured and unstructured ontology data. Logic lets you automate and orchestrate decisions inside the context of the business. AIP Agent Studio Agent Studio lets you create interactive agents that work with enterprise-specific context. These agents can call tools, edit ontology objects, automate manual actions, or complete multi-step tasks. They are not the operating layer. They provide an interface into Logic and the ontology. AIP Evals Evals make AIP reliable. They let teams test how reasoning behaves across scenarios. You can set up test cases, compare models, and debug reasoning steps. Evals turn LLM behaviour into something measurable and accountable. AIP Threads Threads provide state. When a task spans multiple steps or needs a longer window of interaction, threads preserve context so the model can build on previous reasoning. The Learning Loop A decision is […]