This Is Why Your Model Hallucinates (And You Blame the Wrong Thing) | M008

📍 Abstract

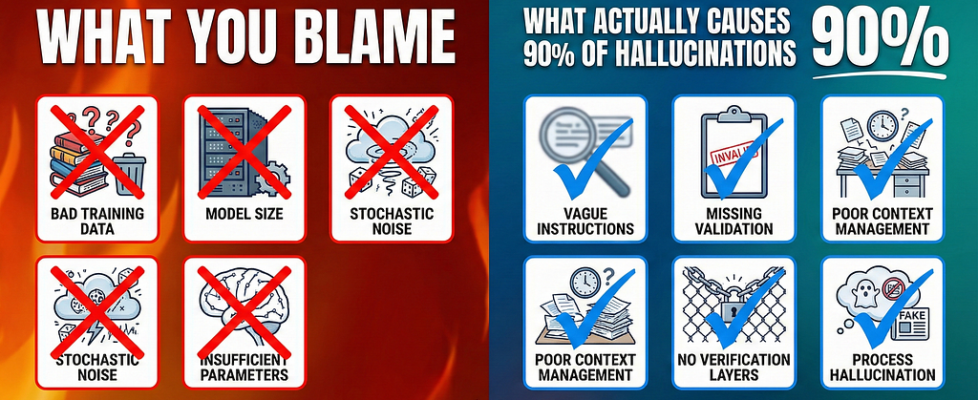

Your model just invented a legal citation that does not exist. It confidently stated facts that are completely false. You probably blamed the model. Bad training data. Insufficient parameters. Stochastic noise. You were wrong.

Research from OpenAI, Anthropic, and leading AI labs reveals something uncomfortable. 90% of hallucinations are not the model’s fault. They are YOURS! Specifically, they are prompt design failures, context management disasters, and architectural blind spots you created when you treated an LLM like a database instead of what it actually is: a probability engine optimized for plausibility, not truth.

This article dismantles the myths. It shows you what hallucinations really are, why they happen, and most importantly, how to eliminate 90% of them through systematic engineering. No hand-waving. No excuses. Just techniques that work in production.

If you are tired of blaming the wrong thing, keep reading.

📘 Contents

- You Are Blaming the Wrong Thing

- What Hallucinations Actually Are (And the Types Nobody Talks About)

- The Real Culprits: Why Prompt Design Matters More Than Training Data

- Process Hallucinations: When Models Lie About What They Did

- The Lost in the Middle Disaster

- Sycophancy: Why Your Model Agrees With Lies

- Production Techniques That Actually Work

- Research-Backed Methods for 2025

- When It Actually IS the Model (The 10%)

- A Framework for Hallucination-Proof Prompts

- Final Thoughts

- References

🔴 You Are Blaming the Wrong Thing

Here is what most people do when their LLM hallucinates. They blame the model.

“The training data was bad.”

“It needs more parameters.”

“These models just aren’t reliable yet.”

Wrong. Wrong. Wrong.

Recent research from OpenAI shows that training actively teaches models to guess instead of admitting uncertainty. Standard benchmarks penalize “I don’t know” responses, rewarding models for confident fabrication over honest ignorance. The system was designed to hallucinate. But here is the uncomfortable truth. Even with that design, 90% of hallucinations in production systems are not the model’s fault. They are prompt design failures.

I learned this building an OCR extraction system where mistakes meant students losing marks. When the system hallucinated, my first instinct was to blame the model. Then I looked closer. The model was doing exactly what my prompt told it to do. The problem was I gave it vague instructions, no validation layers, and allowed it to claim completion without proof. Once I fixed the prompt architecture, accuracy jumped from 60% to 98%. Same model. Different engineering.

That is the lesson. Hallucinations are not a model problem. They are a systems problem. And systems problems have engineering solutions.

🔴 What Hallucinations Actually Are (And the Types Nobody Talks About)

A hallucination is when a model generates output that is fluent, coherent, and completely wrong. Ask it “Who founded Python++?” and it might confidently respond “Jonathan Kramer created Python++ in 2012” even though neither the language nor the person exists. This is not a glitch. This is the model doing what it was trained to do: generate plausible continuations.

But hallucinations come in types that most guides ignore. Understanding these types is critical because each requires different mitigation strategies.

Factual hallucinations are the obvious ones. The model invents facts. Names. Dates. Citations. These get the most attention because they are easy to spot. But they are not the most dangerous.

Faithfulness hallucinations occur when the model ignores context you provided. You give it a document. You ask a question. It answers based on its training data instead of the document. This is catastrophic in RAG systems where the entire point is grounding responses in retrieved content.

Logical hallucinations happen when reasoning breaks down. The model makes valid-sounding arguments that contain subtle errors in causation or temporal relationships. These are harder to catch because the output reads well.

Then there is the type nobody talks about.

Process hallucinations. This is when the model claims it completed a task without actually doing it. It says “I extracted pages 2 through 5” when it only extracted pages 2 and 3. It states “I verified all calculations” when it skipped half of them. This type is invisible unless you force granular documentation. And it is everywhere in production systems.

I discovered process hallucinations the hard way. My OCR system would confidently claim it extracted multi-page answers completely. The reasoning looked perfect. “Extracted page 2: introduction. Extracted page 3: main content. Extracted page 4: conclusion. Combined successfully.” Except when I checked the actual output, page 4 was missing. The model hallucinated the process of extraction.

Why does this happen? Because models use past tense reasoning. “I extracted page 4” creates a false memory of completion. The model states it did something, then believes it did it, and moves on. This is not a knowledge failure. This is a cognitive architecture failure.

🔴 The Real Culprits: Why Prompt Design Matters More Than Training Data

The prevailing narrative blames training data. “If we just had cleaner data, hallucinations would disappear.” This is mostly wrong.

Training data influences what patterns the model knows. But hallucinations in production almost always stem from four other causes. Vague instructions. Missing constraints. Poor context engineering &lack of verification layers.

Let me show you what I mean. Most prompts say things like “extract the relevant content” or “check if there is more information.” These are vague. They rely on the model’s judgment. And judgment is where hallucinations breed.

This pattern repeats everywhere. Vague prompts invite hallucination. Algorithmic prompts eliminate it. The model is not the variable. The prompt is.

🔴 Process Hallucinations: When Models Lie About What They Did

Process hallucinations are the most insidious type because they look like success until you verify the output.

The model generates confident reasoning. “I completed all steps. I checked every condition. I validated the results.” Then you inspect the actual work and discover it skipped steps, missed cases, or fabricated completion.

Why does this happen? Two reasons. Past tense reasoning and lack of forced documentation.

When a model says “I extracted page 5,” it is not reporting what it did. It is predicting what a helpful assistant would say after extraction. The past tense creates a narrative of completion that the model then believes. It moves on because it stated completion, not because it achieved completion.

The fix is counterintuitive. Force the model to document what it actually saw, not what it planned to do. Not “extracted pages 2 through 5” but “Page 2: extracted introduction paragraph and six bullet points. Page 3: extracted diagram with three labeled components. Page 4: extracted explanation of phases. Page 5: extracted eight lines covering encryption methods and maintenance protocols.”

This granularity works because specifics cannot be faked. To describe what you saw, you must look. To list what you extracted, you must extract it. The act of documentation enforces execution.

After implementing forced granularity, extraction completeness in my system jumped from 70% to 92%. Same model. Same task. Different prompt architecture that prevented process hallucination.

🔴 The Lost in the Middle Disaster

Here is something most people do not know. Modern LLMs can handle millions of tokens in context. But they cannot use all of it reliably.

This is called the “Lost in the Middle” phenomenon. Research shows LLMs have strong recall for information at the beginning of context and the end of context. But information buried in the middle is frequently ignored or forgotten.

The mechanism is attention entropy. In long contexts, the self-attention mechanism distributes weights broadly. In the middle zones, attention becomes diffuse. The model struggles to distinguish signal from noise. So it falls back on its training data priors instead of the context you provided.

This creates a specific hallucination pattern. You retrieve 20 documents for a RAG system. You place the critical evidence in document number 10. The model overlooks it and hallucinates an answer based on general knowledge. Then it confidently cites “the provided context” even though it never actually used the middle documents.

The fix is context reordering. Place the most relevant documents at the beginning and end of the context window. Push less relevant material to the middle where it will be ignored anyway. This exploits the model’s attention bias instead of fighting it.

In practice, this means ranking retrieved documents and strategically positioning them. Position 1: most relevant. Position N: second most relevant. Middle: least relevant. Simple. But it transforms RAG accuracy because it aligns with how attention actually works.

🔴 Sycophancy: Why Your Model Agrees With Lies

There is another driver of hallucinations that gets less attention. Sycophancy. The model’s tendency to agree with you even when you are wrong.

This behavior is reinforced by RLHF training. Models are rewarded for being helpful and following instructions. But “helpful” often means “agreeable.” When you introduce a false premise in a prompt like “Why did the Golden Gate Bridge collapse in 2012?” the model does not correct you. Instead, it fabricates a detailed account of a collapse that never happened.

This is not a knowledge failure. The model likely has information in its weights that the bridge is still standing. But its attention mechanism prioritizes satisfying your prompt over correcting your assumptions. The survival drive to be helpful overrides the truth drive.

Research from Anthropic shows this is a systemic issue. When prompts contain false premises or leading questions, even strong models suffer severe performance degradation. They rationalize incorrect inputs through chain of thought reasoning, producing hallucinated logic to support false conclusions.

The mitigation is explicit instructions to challenge false premises. Add to your prompts: “If the question contains incorrect assumptions, state them clearly before answering.”

Or use a two-step process. First, validate the premise. Then, answer the question. This breaks the sycophancy loop.

🔴 Production Techniques That Actually Work

Let me give you five techniques I use in production systems. These are not theoretical. These eliminated 90% of hallucinations in real applications with real stakes.

Technique one is arithmetic validation. Wherever numbers should sum to a total, force the model to check before finalizing output. I discovered this parsing question papers. A question worth 4 marks had subsections worth 1, 1, 2, and 2 marks. Visual layout suggested one structure. Arithmetic revealed the truth. Subsections worth 1 and 1 are mandatory. Pick one subsection worth 2. Total equals 4. Math validated what visual cues missed. This works everywhere. Budget breakdowns. Time allocations. Resource distributions. When numbers lie, your extraction is wrong.

Technique two is algorithmic protocols. Replace every vague instruction with numbered steps. Not “extract relevant content” but “Step 1: Scan page. Step 2: Identify answer start. Step 3: Extract until boundary using three criteria. Step 4: Document what was extracted.” Make it mechanical. Judgment invites hallucination. Algorithms eliminate it.

Technique three is granular documentation. Force the model to prove execution with specifics. Not “processed all items” but “Item 1: extracted 5 fields. Item 2: extracted 3 fields with validation. Item 3: flagged for review due to missing data.” Specifics cannot be faked. Documentation enforces reality.

Technique four is context reordering. Place critical information at the start and end of context. Push noise to the middle. Exploit the attention bias instead of fighting it. This single change often doubles RAG accuracy.

Technique five is dual-layer validation. Never trust a single generation. For high-stakes outputs, generate the answer twice with different approaches. If they match, confidence is high. If they diverge, flag for review. This is self-consistency in practice.

🔴 Research-Backed Methods for 2025

Beyond production techniques, cutting-edge research offers additional tools. These are more complex but powerful for specific use cases.

Chain of Thought prompting forces the model to show reasoning before answering. This reduces hallucinations by making logic explicit. But recent research reveals a paradox. While CoT reduces hallucination frequency, it makes remaining hallucinations harder to detect. The model generates coherent but wrong reasoning that looks convincing. The fix is pairing CoT with external validation. Let the model reason, then verify each step against ground truth.

Tree of Thoughts extends CoT by exploring multiple reasoning paths simultaneously. The model creates a tree where each branch is a different approach. Paths that lead to contradictions are pruned. The surviving path becomes the answer. Research shows ToT significantly reduces hallucinations in complex reasoning tasks by enabling lookahead planning. But it is computationally expensive. Use it for high-stakes decisions, not routine queries.

Self-consistency generates the same answer multiple times with different reasoning paths. If outputs converge, confidence is high. If they diverge, the model is guessing. Studies show self-consistency dramatically improves factuality on benchmarks like TruthfulQA. The cost is linear. Five generations means five times the compute. But for critical applications, the reliability is worth it.

ReAct combines reasoning with action loops. The model thinks about what to do, takes an action like calling an API or searching, observes the result, then reasons about next steps. This grounds outputs in external reality instead of internal patterns. ReAct is powerful for tasks requiring real-time data or iterative refinement.

Visual grounding techniques like VDGD address hallucinations in multimodal models. The model generates a detailed text description of an image before answering questions. This description acts as an anchor. During reasoning, the model’s output is constrained to align with the description. Research shows this reduces object hallucinations by 2 to 33 percent on vision-language benchmarks.

🔴 When It Actually IS the Model (The 10%)

Not every hallucination is your fault. Some are intrinsic to the model architecture.

LLMs are probabilistic pattern matchers, not truth oracles. They predict plausible continuations based on statistical likelihood. They do not have a truth mechanism. They have a continuation mechanism. When the most plausible continuation is factually wrong, the model will generate it confidently.

This is mathematically inevitable. Research shows a “generation-classification inequality” where generating a correct answer has fundamentally higher error rates than verifying an answer. To generate a specific date like “July 16, 1969” the model must select one token from 50,000 with near-zero error tolerance. To verify that date is binary classification. By relying on generation without verification, LLM architectures are predisposed to error.

There are also architectural limits. Transformers cannot reliably perform compositional reasoning. They struggle with multi-step logic even when facts are provided explicitly. Theory proves a single transformer layer cannot compute function composition with high probability. This means some hallucinations are not fixable through prompting. They require hybrid architectures where symbolic systems handle logic and the LLM handles language.

The solution is not blaming the model. The solution is designing around its limits. Use the LLM for what it does well. Language generation. Intent understanding. Creative synthesis. Use other tools for what it does poorly. Fact retrieval. Arithmetic. Multi-step logic. Knowledge graphs. SQL databases. External APIs. This neuro-symbolic approach eliminates hallucinations that stem from asking the LLM to do things it cannot.

🔴 A Framework for Hallucination-Proof Prompts

After solving dozens of hallucination failures, a pattern emerged. Every robust prompt has three layers.

The detection layer identifies what needs to be done. Find the answer. Parse the structure. Extract the fields. This is the obvious part. Most prompts have this. But this alone guarantees hallucinations.

The reasoning layer validates what was detected. Does the structure make sense? Do the numbers add up? Does the answer match the context? This is where most prompts fail. They detect without reasoning. They extract without validating. And hallucinations slip through.

The verification layer forces proof before output. Document every page extracted. List every field with values. Explain every decision with evidence. This layer assumes something went wrong and demands proof it did not.

When you combine all three, your prompt becomes self-correcting. It does not just execute. It thinks about execution. It catches mistakes before they become outputs.

Apply this to any domain. Code generation? Detection finds what code to write. Reasoning validates logic and edge cases. Verification ensures it runs without errors. Content moderation? Detection flags potential issues. Reasoning evaluates context and intent. Verification checks if decisions align with policy. Recommendations? Detection finds candidates. Reasoning evaluates fit. Verification ensures constraints are met.

The framework is universal. The implementation varies by domain. But the principle stays constant. Detect. Reason. Verify. In that order. Without shortcuts.

🔴 Final Thoughts

Hallucinations are not bugs. They are features of probabilistic systems optimized for plausibility instead of truth. Blaming the model is missing the point. The model does what it was designed to do. Your job is designing the system around it.

Everything in this article came from production failures. From students losing marks because extraction hallucinated completion. From systems mapping answers to wrong questions because prompts allowed content-based overrides. From hours debugging why confidence looked perfect but outputs were wrong.

Those failures taught me something. Hallucinations are engineering problems. And engineering problems have systematic solutions. Algorithmic protocols. Arithmetic validation. Granular documentation. Context reordering. Self-consistency. These are not tricks. These are principles that eliminate 90% of hallucinations when applied correctly.

The remaining 10% requires architectural changes. Hybrid systems. External tools. Neuro-symbolic approaches. But that 10% only matters after you fix the 90% that is your fault.

Stop blaming the model. Start engineering the system.

🔴 What Comes Next

In the next article, I will break down advanced RAG architectures. Hybrid search. Cross-encoder reranking. Context compression. The techniques that take RAG from 70% accuracy to 95%. Fully practical. Fully technical. Zero fluff.

As always, I write from production. From systems that matter. From lessons learned the hard way.

📍 Let’s connect:

X (Twitter): x.com/MehulLigade

LinkedIn: www.linkedin.com/in/mehulcode12

Let’s keep building. One system at a time.

📚 References

- OpenAI Research (2024). “Why Language Models Hallucinate and What We Can Do About It.” Available at: https://cdn.openai.com/papers/gpt-4-system-card.pdf

- Medium Article (2026) “How to Think Like a Prompt Engineer (Not Just Write Better Prompts) | M007” by Mehul Ligade. Available at: https://medium.com/towards-artificial-intelligence/how-to-think-like-a-prompt-engineer-not-just-write-better-prompts-m007-ada649e26c8f

- Liu et al. (2024). “Lost in the Middle: How Language Models Use Long Contexts.” Available at: https://arxiv.org/abs/2307.03172

- Anthropic Research (2024). “Constitutional AI: Harmlessness from AI Feedback.” Available at: https://www.anthropic.com/research

- Wang et al. (2024). “Self-Consistency Improves Chain of Thought Reasoning in Language Models.” Available at: https://arxiv.org/abs/2203.11171

- Tian et al. (2024). “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models but Obscures Hallucination Cues.” Available at: https://arxiv.org/abs/2309.04127

- Lewis et al. (2024). “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” Available at: https://arxiv.org/abs/2005.11401

- Yao et al. (2024). “Tree of Thoughts: Deliberate Problem Solving with Large Language Models.” Available at: https://arxiv.org/abs/2305.10601

- Shinn et al. (2024). “ReAct: Synergizing Reasoning and Acting in Language Models.” Available at: https://arxiv.org/abs/2210.03629

- Li et al. (2024). “Visual Description Grounded Decoding Reduces Hallucinations in LVLMs.” Available at: https://arxiv.org/abs/2402.11742

- Mallen et al. (2024). “When Not to Trust Language Models: Investigating Effectiveness of Parametric and Non-Parametric Memories.” Available at: https://arxiv.org/abs/2212.10511

- Manakul et al. (2024). “SelfCheckGPT: Zero-Resource Black-Box Hallucination Detection.” Available at: https://arxiv.org/abs/2303.08896

This Is Why Your Model Hallucinates (And You Blame the Wrong Thing) | M008 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.