TTS LATENCY JUST DIED: This One Generates Perfect Speech in ONE STEP (10X Faster Than ElevenLabs)

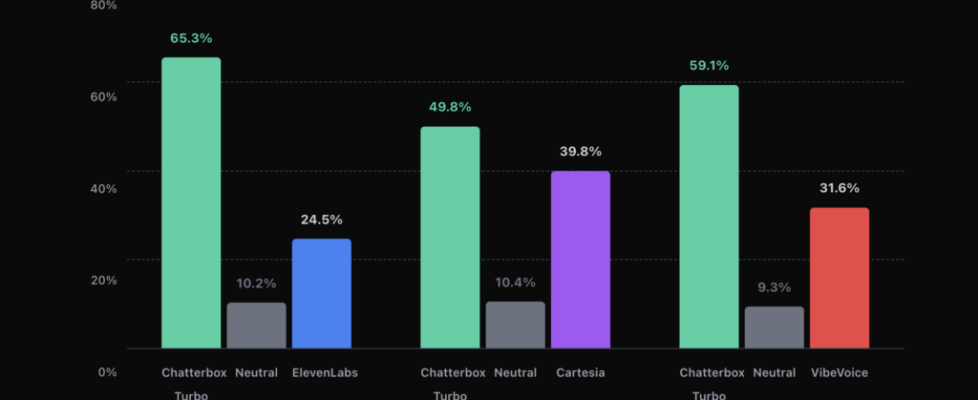

Author(s): Gowtham Boyina Originally published on Towards AI. How This Open-Source Voice Agent Model Kills the 10-Step TTS Bottleneck Forever — Real-Time Conversations Under 200ms with Natural Laughter, Coughs & Zero-Shot Voice Cloning I’ve worked with text-to-speech models for voice agents, and there’s this persistent latency problem: generation takes multiple decoder steps — 10, 20, sometimes 50 iterations to produce high-fidelity audio. Each step adds 20–50ms of latency. For real-time voice agents where every millisecond counts, this compounds quickly. You either accept the latency hit or sacrifice audio quality for speed. sourceThe article discusses the innovative Chatterbox-Turbo, an open-source text-to-speech model developed by Resemble AI, which significantly reduces latency in audio generation by using a single-step decoding process. This advancement enhances user interaction in voice agents, allowing for more realistic speech that can incorporate natural expressions like laughter and coughing, all while maintaining high audio quality. The model, suitable for real-time applications, supports zero-shot voice cloning and has a simplified architecture that makes it efficient for developers aiming to create responsive and human-like voice interfaces. Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI