Fine-Tuning Large Language Models (LLMs) Without Catastrophic Forgetting

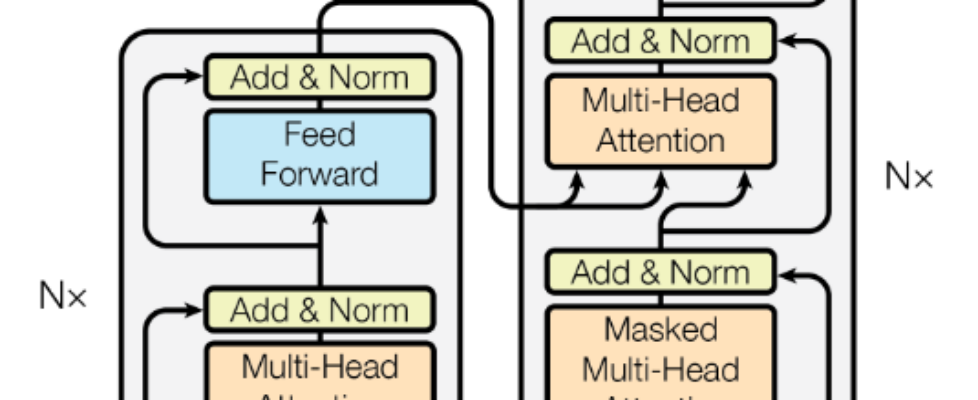

Author(s): Sachchida Nand Singh Originally published on Towards AI. Introduction Fine-tuning large language models (LLMs) is no longer optional — it is the standard way to adapt foundation models to domains such as healthcare, finance, legal text, customer support, or internal enterprise data. However, naive fine-tuning often leads to catastrophic forgetting, where the model learns the new task but degrades on previously learned capabilities. This article provides a practical, engineering-focused guide to: Fine-tuning strategies Preventing catastrophic forgetting Where and how to apply LoRA / QLoRA Layer-by-layer tuning strategies for GPT-style and encoder–decoder models Choosing between LoRA, adapters, and prefix tuning Why Fine-Tuning Matters Large Language Models (LLMs) like GPT, LLaMA, and T5 are trained on massive amounts of general text. Out of the box, they know language, but they don’t know your domain, your task, or how you want them to behave. Fine-tuning is the process of adapting these models so they: Understand your domain (medical, legal, finance, etc.) Perform specific tasks (QA, stigmatization, classification) Follow instructions reliably Behave safely and consistently However, fine-tuning must be done carefully — otherwise the model may forget what it already learned. Pls note: Fine-Tuning Comes Before Pruning Pretrained Model↓Fine-Tuning (Full / PEFT)↓(Optional) Pruning / Quantization↓Deployment (Edge / Cloud) Pruning removes model capacity. Fine-tuning needs that capacity to adapt effectively. Therefore, pruning should be applied after fine-tuning, especially for edge deployment. The Big Risk: Catastrophic Forgetting (In Simple Terms) Catastrophic forgetting occurs when gradient updates overwrite weights that encode general language knowledge. In Simple words “The model learns the new thing, but forgets the old things.” This happens when: Learning rates are too high All layers are updated aggressively The dataset is small Important layers are overwritten Preventing forgetting is largely about where, how much, and how aggressively we update parameters. Learning Rate: Why Warmup + Cosine Decay Is Important Warmup Training starts with a very small learning rate and slowly increases. Why? Prevents sudden weight changes Protects pretrained knowledge Stabilizes early training Cosine Decay After reaching the peak learning rate, it gradually decreases. Why? Learn faster early Fine-tune gently later Reduce forgetting This schedule is now the default best practice for transformer fine-tuning. Understanding Transformer Layers LLM Build with many Transformer layers stacked (Ex. GPT2 has 12 such decoder only layers) Each layer learns distinct features of the domain. ex for a transformer trained on English summerization task may have each layer learned Layer | What it learnsEmbeddings | Word meaningLower layers | Grammar, syntaxMiddle layers | Meaning, relationshipsUpper layers | Task behavior, style Key idea: Lower layers remember language basics — don’t change them much. so its like a kid learning basics of language we want him to become expert of a domain we must retain his basic language understanding so mostly we dont touch lower layers. we should train higher layers. so its better to freeze lower layers (in most of the cases unless we go for full fine tuning) Example: GPT-style model with 12 layers Embeddings → frozen Layers 1–6 → frozen Layers 7–9 → lightly trainable Layers 10–12 → fully adaptable Parameter-Efficient Fine-Tuning (PEFT) Instead of training billions of parameters, PEFT methods add small trainable components. Benefits: Faster training Less memory Much less forgetting LoRA Explained Simply LoRA (Low-Rank Adaptation) works by: Freezing original model weights Adding small trainable matrices Learning how to adjust behavior, not rewrite knowledge LoRA is usually applied only to: Attention Query (Q) Attention Value (V) This changes what the model focuses on and what it outputs. LoRA Where LoRA Is Placed in a Transformer Inside each transformer block: Attention → LoRA applied Feed-forward network → frozen This protects stored knowledge while allowing adaptation. Layer-by-Layer LoRA Strategy (GPT Example) Embedding → frozenLayer 1–6 (lower) → frozen (no LoRA)Layer 7–9 (middle) → LoRA (low rank)Layer 10–12 (upper) → LoRA (higher rank)LM Head → trainable Where LoRA is inserted Self-attention only Q and V projections only [Attention Block]Q_proj ← LoRAK_proj ← frozenV_proj ← LoRAO_proj ← frozenFFN ← frozen GPT Example | Layer Range | Role | Rank (r) | Alpha || ———– | —— | ——– | —– || 1–6 | frozen | – | – || 7–9 | middle | 4–8 | 16 || 10–12 | upper | 8–16 | 32 || LM head | task | train | – | Encoder–Decoder Models (T5, BART) For encoder–decoder models: Priority order: Decoder self-attention Cross-attention Encoder self-attention (optional) | Component | Rank || —————– | ————– || Decoder self-attn | 8–16 || Cross-attn | 8–16 || Encoder self-attn | 4–8 (optional) | HuggingFace + PEFT Code Decoder only from transformers import AutoModelForCausalLMfrom peft import LoraConfig, get_peft_modelmodel = AutoModelForCausalLM.from_pretrained( “gpt2″, torch_dtype=”auto”)lora_config = LoraConfig( r=16, lora_alpha=32, target_modules=[“c_attn”], # GPT-2 uses fused QKV lora_dropout=0.05, bias=”none”, task_type=”CAUSAL_LM”, layers_to_transform=[9, 10, 11] # upper layers only)model = get_peft_model(model, lora_config)model.print_trainable_parameters() Note: c_attn contains QKV fused PEFT internally applies LoRA correctly Encoder Decoder Type (T5/BART) from transformers import AutoModelForSeq2SeqLMfrom peft import LoraConfig, get_peft_modelmodel = AutoModelForSeq2SeqLM.from_pretrained(“t5-base”)lora_config = LoraConfig( r=8, lora_alpha=16, target_modules=[“q”, “v”], lora_dropout=0.05, bias=”none”, task_type=”SEQ_2_SEQ_LM”)model = get_peft_model(model, lora_config)model.print_trainable_parameters() QLoRA (Same Logic, Less Memory) QLoRA works by combining several techniques to balance memory efficiency with high performance: 4-bit NormalFloat (NF4): This is an information-theoretically optimal data type for normally distributed data used to store the frozen pre-trained model weights in 4-bit precision, drastically reducing their memory footprint. Double Quantization: This technique further optimizes memory usage by quantizing even the quantization constants, resulting in additional memory savings. Paged Optimizers: QLoRA uses the NVIDIA unified memory feature for the optimizer states, which acts like virtual memory, automatically offloading data to the CPU RAM when the GPU runs out of memory and paging it back when needed, ensuring error-free processing. Low-Rank Adaptation (LoRA) Adapters: While the main model weights are frozen in 4-bit, small, trainable “adapter” layers are injected into each layer of the LLM. Only these small adapter weights are trained in a higher precision (e.g., bfloat16), and gradients are backpropagated through the quantized model to update them. Key Benefits Memory Efficiency: QLoRA can fine-tune multi-billion parameter models on […]