Tencent Built a Billion-Parameter Model That Generates 3D Motion From Text

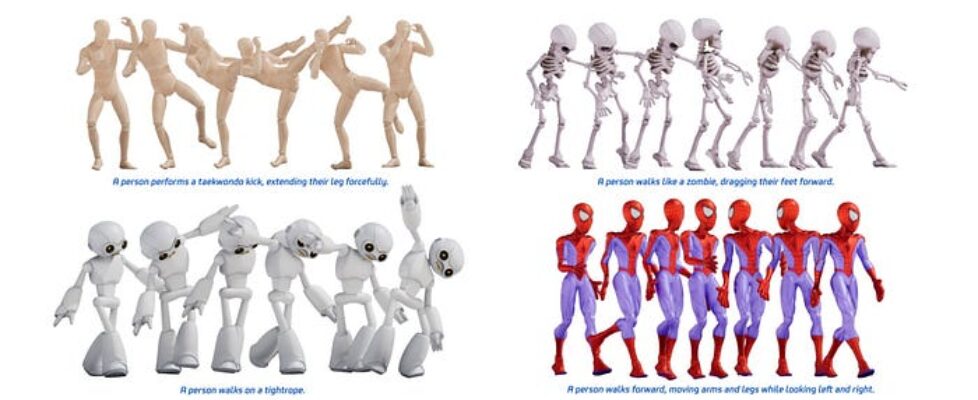

Author(s): Gowtham Boyina Originally published on Towards AI. And It’s the First to Scale DiT This Far I’ve watched text-to-motion generation struggle with the same problem for years: models either understand prompts decently but generate stiff, unnatural movement, or they produce smooth motion but can’t follow complex instructions. The trade-off has been frustrating — you get one or the other, rarely both. HY-Motion 1.0 generates skeleton-based 3D character animations from text descriptions. You write “A person performs a squat, then pushes a barbell overhead using the power from standing up,” and it outputs motion data you can plug directly into animation pipelines.Tencent’s release of HY-Motion 1.0 introduces a significant advancement in text-to-motion generation by utilizing a billion-parameter Diffusion Transformer (DiT) model that excels in both motion quality and instruction following. The architecture employs a unique three-stage training process to enhance performance, where initial broad training on extensive datasets is followed by fine-tuning and reinforcement learning from human feedback. This allows the model to generate naturalistic 3D animations, revolutionizing applications in game development, film production, and AI companions, while also highlighting hardware limitations and focusing on humanoid characters exclusively. Read the full blog for free on Medium. Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor. Published via Towards AI