AI-Powered Real-Time Egyptian Sign Language Translator

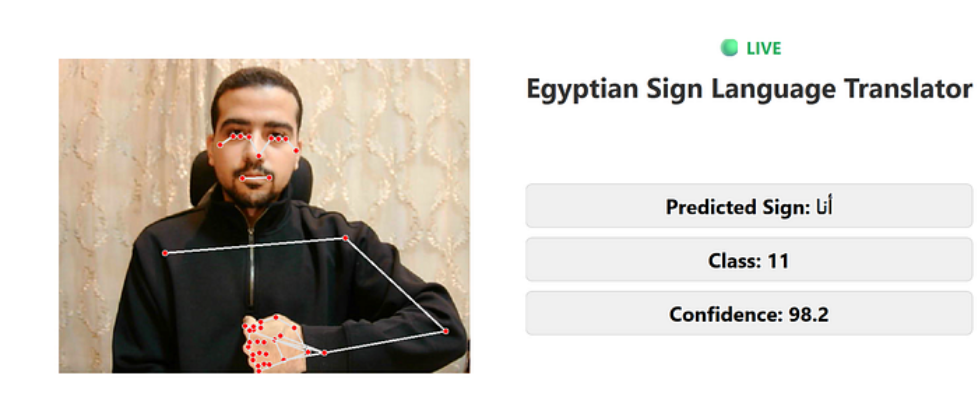

Author(s): Ahmed Ashraf Originally published on Towards AI. Figure 1: Real-Time Egyptian Sign Language translator 1. Introduction: Breaking Communication Barriers Communication is a fundamental human right, yet millions of Deaf and Hard-of-Hearing (DHH) individuals face daily challenges around the world. In Egypt, limited accessibility tools for Egyptian Sign Language (ESL) make communication with non-signers particularly difficult, impacting education, workplaces, and social interactions. To address this challenge, we developed, for the first time, a real-time Egyptian Sign Language translator using AI and computer vision. Unlike static recognition systems that classify individual gestures in isolation, our approach analyzes sequences of hand, body, and facial movements to produce complete words and phrases. By converting these sequences into Arabic text and speech, the system enables seamless communication and promotes inclusivity for the DHH community. This project demonstrates how cutting-edge AI can bridge language barriers and empower individuals in their everyday lives, creating a tangible social impact. 2. Project Overview: Real-Time Translation The core challenge in Egyptian Sign Language translation is that signs are rarely isolated. A single word or phrase may involve multiple sequential gestures performed by the signer. Recognizing these gestures in real time requires understanding temporal dependencies, not just static hand poses. Our solution leverages a sequence-based recognition pipeline, enabling natural translation of fluent signing. Key Features: Real-Time Recognition: Captures sequences of gestures using MediaPipe Holistic for hands, face, and body. Sequence Modeling: Two-layer Bidirectional LSTM predicts entire sequences, converting them into words or phrases. Context-Aware Translation: Individual gestures are combined into meaningful Arabic words/phrases. User Interface: Displays predictions instantly on screen, with optional Text-to-Speech output for auditory feedback. Figure 2: Dataset Collection Figure 3: Real-Time Recognition This sequence-based design ensures that translations reflect the fluidity of natural signing, making communication intuitive and efficient. 3. Technical Highlights: AI Meets Accessibility 3.1 Why MediaPipe Holistic? Traditional computer vision approaches struggled with real-time sign recognition under varying conditions such as lighting changes, occlusion, and fast hand movements. We adopted MediaPipe Holistic, which provides fast and accurate 3D landmark detection for the face, hands, and body, making it ideal for gesture-based systems. Advantages: Lighting Robustness: Works reliably under shadows and brightness changes. Background Invariance: Ignores clutter using a unified pose-and-hand network. Real-Time Performance: GPU-accelerated pipeline runs at 30+ FPS. High-Resolution Keypoints: Extracts 543 landmarks per frame for robust gesture representation. Figure 4: Full Pipeline Diagram 3.2 Dataset Collection and Preprocessing To train our system, we built a custom Egyptian Sign Language dataset, covering 400 distinct signs. Video Samples: 20 recordings per sign (16 training, 4 testing) Sequence Length: 30 frames per sample to capture gesture motion Feature Extraction: MediaPipe Holistic produces 225 features per frame Storage: Features saved as .npy files, organized by train/test split Data Collection Procedure: Automated Recording: Python scripts control webcam recording and MediaPipe landmark extraction. Label Mapping: Each gesture is linked to an Arabic word via an Excel sheet. Quality Control: Users can skip poor recordings or restart sessions. Diversity: Signs performed with both dominant and non-dominant hands to improve model robustness. Figure 5: Sample of Dataset 3.3 Model Architecture: Two-Layer LSTM for Sequences The core model uses a two-layer LSTM to capture temporal dependencies across gesture sequences: Sequence Modeling: Learns both short-term movements and long-term context. Dense + Dropout Layers: Generalize patterns and prevent overfitting. Training: Adam optimizer with early stopping ensures efficiency. Performance: Achieved 93% training accuracy and 97% test accuracy. Figure 6: LSTM Architecture Diagram Advantages: Models sequences of gestures, not static frames Real-time prediction with minimal latency Stable training without gradient issues 3.4 Real-Time Inference Pipeline Our inference pipeline continuously processes live video input to produce fluent translations: Frame Capture: Webcam streams frames continuously. Keypoint Extraction: MediaPipe Holistic detects 3D landmarks in real time. Sliding Window: Maintains the last 30 frames as the gesture sequence. Model Inference: LSTM classifies the full sequence into a word/phrase. Arabic Rendering: Reshaping and BiDi logic ensures correct right-to-left text display. Confidence Thresholds: Only high-certainty predictions appear. Threading: Detection and prediction run separately to maintain UI responsiveness. Performance: Fast CPU inference suitable for standard laptops Lightweight system optimized for accessibility without specialized hardware 4. Social Impact: Empowering the Deaf Community This project is more than a technical demonstration — it has real-world implications: Facilitates sequence-based communication in schools, workplaces, and public spaces. Bridges the gap between signers and non-signers, promoting social inclusion. Provides a foundation for Arabic sign language AI research. Supports future accessibility initiatives in Egypt and potentially other Arabic-speaking regions. Observation: Early testing confirmed accurate, immediate translation of gesture sequences, enabling real-time conversational interactions. 5. Challenges and Solutions Variability in signing styles: Diverse dataset, preprocessing normalization Limited annotated ESL data: Custom data acquisition with automated landmark extraction Real-time latency: Two-layer LSTM, sliding window, threaded prediction These solutions ensure the system works reliably for different signers, under varying conditions, and in real-world scenarios. 6. Future Directions Mobile Deployment: Bring real-time ESL translation to smartphones. Expanded Dataset: Include complex phrases and regional dialects. Collaboration: Partner with NGOs, schools, and universities for real-life adoption. Multilingual Support: Extend beyond Arabic for broader accessibility. Research & Development: Explore more advanced sequence models and AI-assisted sign prediction. 7. Conclusion The Real-Time Egyptian Sign Language Translator demonstrates how AI can break communication barriers and empower the Deaf community. By capturing gesture sequences and translating them into words and phrases, the system enables real-time, natural communication. Its combination of MediaPipe Holistic, sequence modeling with LSTM, responsive interface, and optional Text-to-Speech output provides a practical, high-impact tool for accessibility and inclusion. Contact for collaboration or demonstration: Ahmed Ashraf — ahmedashrafmohamed74@gmail.com — LinkedIn Mohamed Ibrahim — Mohibrahim2001fekry@gmail.com — LinkedIn License: © 2025 Ahmed Ashraf. All content related to the “Real-Time Egyptian Sign Language Translator” project — including articles, tutorials, screenshots, and diagrams — is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND 4.0). You are free to:– Share — copy and redistribute this material in any medium or format. Under the following terms:– Attribution — You must give appropriate credit to Ahmed Ashraf, provide a link […]