LLM API Token Caching: The 90% Cost Reduction Feature when building AI Applications

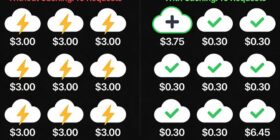

Author(s): Nikhil Originally published on Towards AI. LLM API Token Caching: The 90% Cost Reduction Feature when building AI Applications If you’ve used Claude, GPT-4, or any modern LLM API, you’ve been spending far more than necessary on token processing if you are not caching the system prompt or any prompt that just static and doesn’t change for every api call. Cost comparison: 10x savings on cached token readsToken caching provides substantial cost benefits by allowing reuse of […]