How Tolan builds voice-first AI with GPT-5.1

Tolan built a voice-first AI companion with GPT-5.1, combining low-latency responses, real-time context reconstruction, and memory-driven personalities for natural conversations.

Tolan built a voice-first AI companion with GPT-5.1, combining low-latency responses, real-time context reconstruction, and memory-driven personalities for natural conversations.

Fairness in machine learning is increasingly critical, yet standard approaches often treat data as static points in a high-dimensional space, ignoring the underlying generative structure. We posit that sensitive attributes (e.g., race, gender) do not merely shift data distributions but causally warp the geometry of the data manifold itself. To address this, we introduce Causal Manifold Fairness (CMF), a novel framework that bridges causal inference and geometric deep learning. CMF learns a latent representation where the local Riemannian […]

Let’s say an environmental scientist is studying whether exposure to air pollution is associated with lower birth weights in a particular county. They might train a machine-learning model to estimate the magnitude of this association, since machine-learning methods are especially good at learning complex relationships. Standard machine-learning methods excel at making predictions and sometimes provide uncertainties, like confidence intervals, for these predictions. However, they generally don’t provide estimates or confidence intervals when determining whether two variables are related. […]

Preliminaries ‘steepest descent algorithm’ Linear Algebra Calculus 1,2 Newton’s Method1 Taylor series gives us the conditions for minimum points based on both first-order items and the second-order item. And first-order item approximation of a performance index function produced a powerful algorithm for locating the minimum points which we call ‘steepest descent algorithm’. Now we want to have an insight into the second-order approximation of a function to find out whether there is an algorithm that can also work […]

An explanation of how YOLOv1 measures the correctness of its object detection and classification predictions The post YOLOv1 Loss Function Walkthrough: Regression for All appeared first on Towards Data Science.

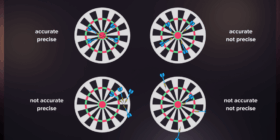

Effective evaluation metrics are crucial in assessing the performance of machine learning models. One of such metrics is the F1 score, which is widely used for classification problems, information retrieval, and NLP tasks. In this blog post, we’ll explore the foundational concepts of the F1 score, discuss its limitations, and look at use cases across diverse domains. What is the F1 score in machine learning? The performance of ML algorithms is measured using a set of evaluation metrics, […]

Continue reading on Towards AI »

In the first part, I talked about what Data Quality, Anomaly Detection and Outliers Detection are and what’s the difference between outliers detection and novelty detection. In this part, I will talk about a very known and easy method to detect outliers called Interquartile Range. Introduction The Interquartile Range method, also known as IQR, was developed by John Widler Turky, an American mathematician best known for development of the FFT algorithm and box plot.

A practical guide to Hugging Face Transformers and to how you can analyze your resumé sentiment in seconds with AI The post Hugging Face Transformers in Action: Learning How To Leverage AI for NLP appeared first on Towards Data Science.

This article is devoted to constructing of fractional powers of operators and their matrix approximations. A key feature of this study is the use of a spectral approach that remains applicable even when the base operator does not generate a semigroup. Our main results include the convergence rate of matrix approximation, derived from resolvent estimates, and a practical algorithm for constructing matrix approximations. The theory is supported by examples.