On Information Theoretic Bounds for SGD

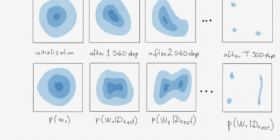

Few days ago we had a talk by Gergely Neu, who presented his recent work: Gergely Neu Information-Theoretic Generalization Bounds for Stochastic Gradient Descent I’m writing this post mostly to annoy him, by presenting this work using super hand-wavy intuitions and cartoon figures. If this isn’t enough, I will even find a way to mention GANs in this context. But truthfully, I’m just excited because for once, there is a little bit of learning theory that I half-understand, […]